Evaluation of PAFT: A Parallel Training Paradigm for Effective LLM Fine-Tuning

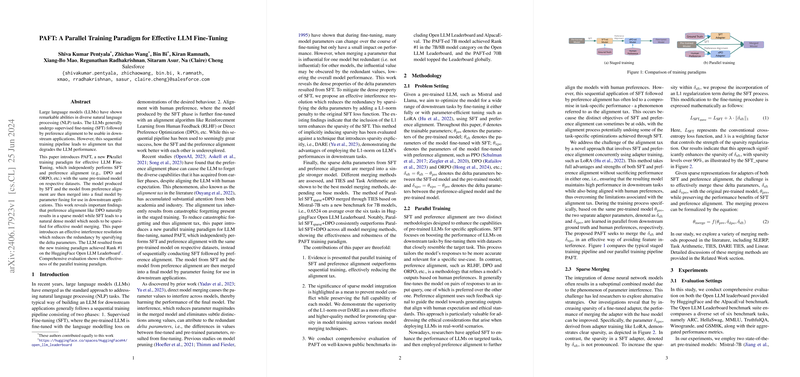

The paper "PAFT: A Parallel Training Paradigm for Effective LLM Fine-Tuning" addresses a fundamental challenge in the application of LLMs in NLP tasks, namely the "alignment tax" that arises from the sequential nature of existing fine-tuning frameworks. The typical approach of employing supervised fine-tuning (SFT), followed by a preference alignment stage often results in performance degradation due to catastrophic forgetting. This work proposes a novel parallel training paradigm (PAFT) that concurrently performs SFT and preference alignment, significantly mitigating the detrimental effects associated with sequential workflows.

Methodology and Results

The PAFT model architecture deviates from traditional sequential fine-tuning paradigms by concurrently applying SFT and preference alignment algorithms, like Direct Preference Optimization (DPO) or Odds-ratio Preference Optimization (ORPO), on the same pre-trained model across distinct datasets. The primary insight here is that the model emerges from SFT as inherently dense, leading to complications during the merging process with the preference-aligned model. PAFT introduces an L1-norm penalty during the SFT stage to induce sparsity in delta parameters, effectively reducing interference when merging models.

Comprehensive experiments underscore the efficacy of PAFT. Notably, models fine-tuned under the PAFT regime achieve notable rankings in public benchmarks, such as the HuggingFace Open LLM Leaderboard. PAFT consistently outperformed both independent and sequentially fine-tuned models. The integration of the L1-norm penalty enhanced model sparsity and facilitated more effective parameter merging, as evidenced by the concrete benchmark results.

Implications

This paper posits several significant implications for the future of NLP application and model training. Firstly, by providing a robust method for overcoming the alignment tax associated with sequential training, PAFT offers a pathway towards more refined and efficient LLM deployment across diverse tasks. This heightened efficiency will likely inspire further research into parallel processing paradigms and sparsity techniques in LLMs.

Secondly, the explicit inclusion of sparsity in the model fine-tuning process holds substantial promise for enhancing model performance post-merging. The findings encouraging the use of parallel training paradigms enable fully leveraging large-scale data and diverse task criteria, potentially setting a new standard for LLM fine-tuning methodologies.

Future Directions

This research suggests several pathways for future exploration. Given the success of PAFT with DPO and ORPO, there lies an opportunity to evaluate the applicability of PAFT with alternative alignment algorithms, potentially broadening its applicability and robustness across a wider array of NLP tasks.

Further studies could also examine the theoretical underpinnings of model sparsity's role in reducing training conflicts during parallel processing. By rigorously evaluating the balance between sparsity and model performance, researchers can better comprehend the dynamics behind improved model coherence, leading to more sophisticated fine-tuning methods.

In conclusion, the PAFT framework offers a promising strategy for overcoming sequential fine-tuning limitations by leveraging parallel processing and sparsity. These advancements signal a shift towards more efficient and powerful LLM fine-tuning strategies, opening avenues for continued innovation in NLP model training methodologies.