Overview of "Pariksha: A Large-Scale Investigation of Human-LLM Evaluator Agreement on Multilingual and Multi-Cultural Data"

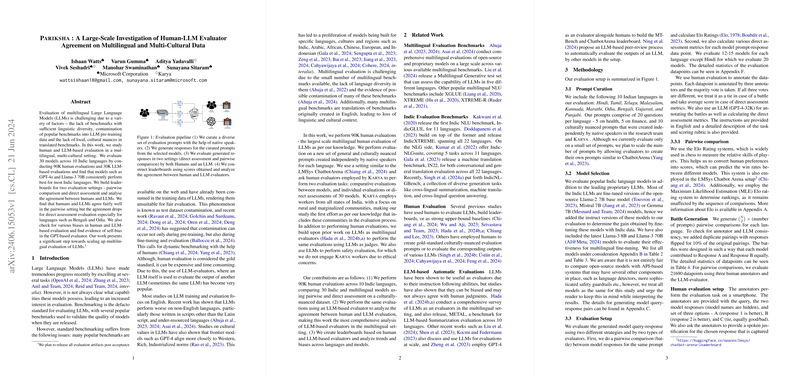

"Pariksha: A Large-Scale Investigation of Human-LLM Evaluator Agreement on Multilingual and Multi-Cultural Data" presents an extensive paper of LLMs evaluated across diverse languages and cultural contexts. The evaluation covered 30 models in 10 Indic languages, using 90,000 human and 30,000 LLM evaluations. This paper is methodologically rigorous, involving two main evaluation strategies: pairwise comparisons using the Elo rating system and individual direct assessments on linguistic acceptability, task quality, and hallucination metrics.

Methodology

Prompt Curation and Model Selection

The paper focused on 10 Indian languages: Hindi, Tamil, Telugu, Malayalam, Kannada, Marathi, Odia, Bengali, Gujarati, and Punjabi. Prompts were curated by native speakers and comprised general questions as well as culturally nuanced ones. The models evaluated included proprietary LLMs (e.g., GPT-4o, Gemini-Pro 1.0), open-source models (e.g., Llama-3 70B, Mistral 7B), and models fine-tuned for Indic languages (e.g., SamwaadLLM, AryaBhatta-GemmaOrca).

Evaluation Strategies

- Pairwise Comparison (Elo Rating):

- Each model’s responses were compared in a pairwise fashion.

- Elo ratings produced a relative ranking system, favoring models with better performance across numerous match-ups.

- Both human annotators and an LLM evaluator (GPT-4-32K) were employed to make these comparisons.

- Direct Assessment:

- Annotators rated responses based on linguistic acceptability (LA), task quality (TQ), and hallucinations (H).

- Ratings were converted to a composite score to rank models.

- Safety Evaluations:

- Utilized the RTP-LX Hindi dataset with GPT-4 as an evaluator to assess the models for toxic or problematic content.

Results and Analysis

Leaderboards and Performance

Pairwise Comparison (Elo Leaderboard):

- GPT-4o and Llama-3 70B consistently ranked highest across multiple languages.

- Fine-tuned Indic models like SamwaadLLM showed superior performance compared to their baselines.

Direct Assessment:

- Similar trends were observed, with GPT-4o leading most language-specific evaluations.

- The LLM evaluator typically assigned higher scores compared to human annotators, often showing more optimism.

Agreement and Bias

Inter-annotator agreement was analyzed using Percentage Agreement (PA) and Fleiss Kappa (). The correlation between human and LLM evaluators’ rankings was also quantified using Kendall's Tau (). Key findings include:

- Pairwise Comparison: Humans had a moderate score of 0.54, while human-LLM agreement was at 0.49, indicating a fair level of alignment.

- Direct Assessment: Agreement dropped significantly for direct assessments, revealing this to be a more challenging task for LLM evaluators.

- Language and Cultural Contexts: LLM evaluators showed lesser agreement on culturally nuanced queries, highlighting challenges in capturing cultural context.

Implications and Future Directions

This paper underscores the complexities involved in evaluating multilingual and multi-cultural data. The results demonstrate that while fine-tuned Indic models perform competitively, larger LLMs like GPT-4o exhibit strong performance across languages. Given the lower agreement on cultural prompts and fewer ties chosen by LLM evaluators, the paper suggests incorporating human judgment in complex multilingual evaluation settings.

Future directions include expanding language coverage to other Indic and non-Indic languages, increasing prompt diversity, and improving LLM fine-tuning techniques. Moreover, exploring hybrid evaluation systems combining human and LLM assessments could enhance accuracy, reliability, and cultural sensitivity.

Limitations

The paper acknowledges certain limitations:

- Coverage was limited to 10 Indic languages.

- A relatively small set of prompts was evaluated.

- Some overlap of function and performance between open-source and API-based commercial systems was noted.

Ethical Considerations

The paper adhered to ethical guidelines, including approval from relevant institutional review boards. Human annotators were fairly compensated, and privacy concerns were meticulously addressed, especially in the context of the safety evaluations.

Conclusion

"Pariksha" offers a comprehensive and methodologically sound investigation into the performance of LLMs across multilingual and multi-cultural contexts. The paper’s insights into evaluator alignment, biases, and model performance trends are invaluable for the continued development of linguistically and culturally aware AI systems. This work sets the stage for future enhancements in LLM evaluations, emphasizing the necessity for nuanced, hybrid approaches to realize the full potential of AI in diverse linguistic landscapes.