Evaluating RAG-Fusion with RAGElo: an Automated Elo-based Framework

Abstract

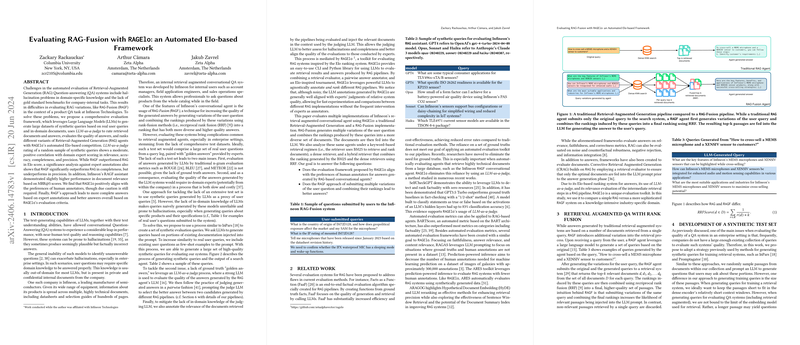

The paper addresses the evaluation of Retrieval-Augmented Generation (RAG) Question-Answering (QA) systems, with a specific focus on domain-specific knowledge and the difficulties in developing gold standard benchmarks for internal company tasks. The authors propose a comprehensive evaluation framework leveraging LLMs to generate synthetic queries and employing LLM-as-a-judge to rate documents and answers. This framework culminates in the ranking of RAG variations through RAGElo, an Elo-based competition. The evaluation highlighted significant aspects such as hallucination detection, the superiority of RAG-Fusion (RAGF) in completeness, and a positive correlation between LLM-as-a-judge ratings and human annotations.

Introduction

The leap in conversational QA systems driven by LLMs is constrained by hallucination issues and the inability to handle unanswerable questions reliably. This is exacerbated in enterprise settings where domain-specific knowledge is pivotal. The paper details the challenges faced by Infineon Technologies in managing their RAG-based conversational QA system. They underscore the advent of RAGF, which combines multiple query variations to enhance answer quality.

Related Work

The landscape of evaluative methodologies for RAG systems has been evolving, with several initiatives striving to refine the factual evaluation and the overall quality assessment. The paper references advancements such as FaaF, SelfCheckGPT, and CRAG, which contribute to the fidelity of RAG evaluations. The emergence of LLM-as-a-judge paradigms showcases robust alternatives to traditional evaluation metrics reliant on ground-truth benchmarks.

Retrieval Augmented QA with Rank Fusion

RAGF differentiates itself from traditional RAG by generating and leveraging multiple query variations, subsequently merging the resultant rankings through Reciprocal Rank Fusion (RRF). This approach is posited to minimize the incidence of irrelevant document retrieval and bolster the quality of the final answers. The paper meticulously elucidates the comparative advantages of RAGF over standard RAG configurations.

Development of a Synthetic Test Set

Given the paucity of extensive real-world query logs, the authors advocate for leveraging LLMs to generate synthetic queries based on in-domain corporate documentation. This novel approach ensures a broader range of high-quality queries, reflecting real user interactions more accurately.

LLM-as-a-Judge for RAG Pipelines

The introduction of RAGElo is a pivotal aspect of the paper. By embedding the evaluation within a two-step process—initial document relevance scoring followed by pairwise answer assessment—the framework seeks to mitigate the limitations of conventional evaluation methods. The LLM-as-a-judge, complemented by this structured methodology, demonstrates a commendable alignment with human expert ratings.

Experimental Results

Quality of Retrieved Documents

The evaluation using Mean Reciprocal Rank@5 (MRR@5) scores reveals that RAGF consistently outperforms RAG. The BM25 retrieval method emerged as the strongest baseline, underscoring the potential limitations of unrefined embedding-based searches.

Pairwise Evaluation of Answers

RAGElo's pairwise assessments highlight a preference for RAGF over traditional RAG, particularly in terms of completeness. Statistical analyses reaffirm this preference while noting that RAG excels in precision, revealing a nuanced trade-off between these two dimensions.

Discussion and Conclusion

The paper concludes that RAGElo's evaluation framework aligns well with human annotators, supporting the superiority of RAGF in delivering complete and detailed answers. However, the authors prudently recommend considering domain-specific nuances and the inherent precision demands of certain applications. Future work will explore the applicability of RAGF across varying contexts and refine its implementation for enhanced retrieval and response accuracy.

The authors capably demonstrate that RAGElo, with its Elo-based ranking and LLM-as-a-judge methodology, presents a robust, scalable solution for evaluating complex RAG systems. This stands to facilitate more nuanced, efficient, and reliable performance assessments, driving further advancements in the field of retrieval-augmented generation.