Exploring Design Choices for Building Language-Specific LLMs

Overview

This paper explores the challenge of building language-specific LLMs by adapting existing monolingual and multilingual LLMs. While substantial advancements have been made in the performance of LLMs, their efficacy across a wide range of languages remains notably lacking. This paper systematically investigates how various design choices—namely, base model selection, vocabulary extension, and continued fine-tuning—affect both the efficiency and the end-task performance of adapted LLMs.

Key Findings

- Non-Indicative Initial Performance: The performance of a base LLM before adaptation does not necessarily predict its performance post-adaptation. For instance, monolingual English-centric models, despite initially poor performance on low-resource languages, can be adapted effectively through appropriate training.

- Efficiency Gains: Simple vocabulary extension and continued fine-tuning can enhance the efficiency of most LLMs. For example, a vocabulary extension of around 10K tokens can bridge the efficiency gap between English and low-resource languages, defined by the number of tokens needed to encode equivalent information.

- Language-Specific vs. Multilingual Models: The optimal adaptation strategy is highly dependent on the target language. Contrary to expectations, adapting English-centric models can yield superior results compared to adapting certain multilingual models.

The paper contributes foundational insights into the efficient adaptation of existing LLMs to build effective language-specific models.

Methodology

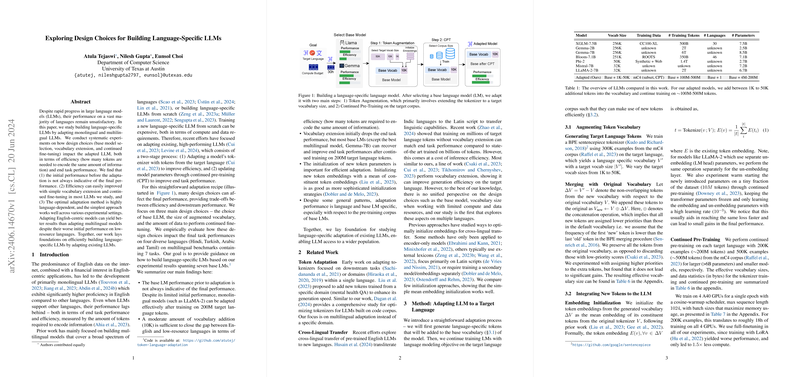

Token Vocabulary Augmentation

- Token Generation: The authors trained BPE sentencepiece tokenizers on 300K examples from the mC4 corpus in the target language, generating a language-specific vocabulary of varying sizes (1K to 50K tokens).

- Vocabulary Merging: The new tokens from the target language were appended to the base vocabulary of the original model, ensuring that all tokens from the initial vocabulary were retained.

Continued Pre-Training (CPT)

- Embedding Initialization: The new tokens' embeddings were initialized as the mean of their constituent token embeddings. This simple initialization performed competitively with more complex strategies.

- Training Details: The models underwent continued training on a large corpus of the target language, with parameters optimized to maximize multilingual efficacy.

Experimental Evaluation

The authors conducted extensive experiments to evaluate the impact of adaptation strategies on four diverse languages—Hindi, Turkish, Arabic, and Tamil—using several benchmarks:

- Machine Translation: Assessed with the FLORES-200 benchmark via 5-shot prompting.

- Text Summarization: Evaluated on the XL-Sum dataset by re-framing the task.

- Understanding Tasks: Various benchmarks such as mLAMA for knowledge probing, XNLI for natural language inference, sentiment analysis, and commonsense reasoning tasks like XCOPA and XStoryCloze.

Results

- Efficiency: Vocabulary augmentation significantly reduced the token disparity between English and other languages. For instance, extending LLaMA-2 with 10K tokens drastically improved fertility, reducing the sequence length needed to encode the same amount of information.

- End Task Performance: Adapted LLaMA-2-7B matched the performance of larger multilingual models on several tasks. Moreover, smaller multilingual models, such as Gemma-2B, demonstrated competitive performance post-adaptation.

- Optimal Design Choices: The paper finds that the balance between the size of the vocabulary extension and the amount of fine-tuning data is crucial. For instance, smaller vocabulary extensions yielded better performance with less continued training data, while larger vocabulary sizes required more data to achieve substantial performance gains.

Implications and Future Directions

This research highlights the need for pragmatic adaptation strategies tailored to linguistic diversity. By demonstrating how simple adaptations can significantly enhance model efficiency and performance, the paper sets the stage for more inclusive, multilingual AI technologies. Future research could expand in several directions:

- Broader Language Coverage: Extending the paper to more languages, especially under-resourced ones, would help generalize these findings.

- Refining Tokenization Approaches: Exploring advanced tokenization methods beyond BPE could yield further efficiency gains.

- Cross-Lingual Knowledge Transfer: Investigating the mechanisms of knowledge transfer in multilingual settings could enhance the effectiveness of CPT.

In conclusion, this paper provides pivotal insights into the adaptation of LLMs for specific languages, laying the groundwork for creating more efficient and performant language-specific LLMs.