Whiteboard-of-Thought: Thinking Step-by-Step Across Modalities

Abstract

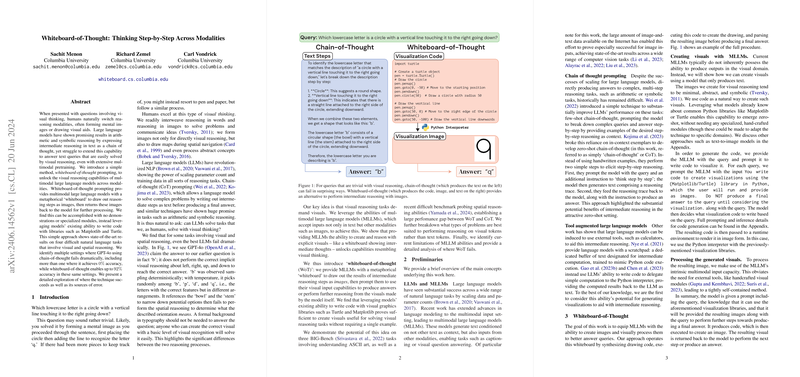

The paper, Whiteboard-of-Thought: Thinking Step-by-Step Across Modalities, introduces a novel mechanism termed "whiteboard-of-thought" (WoT) to enable visual reasoning capabilities in multimodal LLMs (MLLMs). The proposed approach demonstrates a significant improvement in performance on tasks that involve visual and spatial reasoning, such as those found in the BIG-Bench datasets and spatial navigation benchmarks, over traditional chain-of-thought (CoT) techniques typically employed in LLMs.

Introduction

Addressing the cognitive gap between visual and textual reasoning, this paper builds on recent advancements in multimodal LLMs. While humans naturally integrate visual aids and diagrams to solve complex problems, LLMs, including GPT-4o, have shown limitations in their pre-trained ability to handle tasks requiring visual interpretation. The introduction of WoT aims to emulate human-like visual thinking by leveraging the MLLM's inherent ability to generate and interpret images using code.

Previous Work

The paper situates itself in the context of recent developments in LLMs and their capabilities. Traditional chain-of-thought prompting techniques have significantly improved model performance on arithmetic and symbolic reasoning tasks but have fallen short in visual reasoning. Previous efforts, such as tool-augmented LLMs utilizing external calculators for arithmetic operations, fail to extend this utility to produce and interpret visual data effectively.

Whiteboard-of-Thought Methodology

The cornerstone of the WoT methodology is the generation and utilization of visual aids to enhance reasoning across modalities. The technique involves prompting MLLMs to generate Python code that creates visual representations, which are then processed by the model to complete reasoning tasks. This approach is zero-shot, requiring no additional training data or specialized modules, thereby leveraging existing capabilities in libraries like Matplotlib and Turtle.

Experimental Setup

The paper evaluates WoT across two primary domains: ASCII art interpretation from the BIG-Bench datasets and spatial navigation tasks from recent benchmarks. Each domain presents unique challenges for both visual and spatial reasoning, illustrating the limitations of text-only CoT approaches and highlighting the improvements achieved through WoT.

Results

The results showcase a stark contrast between the performance of traditional methods and WoT. For example:

- In the ASCII understanding tasks, WoT achieved up to 66.4% accuracy in word recognition and 73.8% in kanji recognition, compared to the near-zero accuracy of CoT in these visually intensive tasks.

- In spatial navigation tasks, WoT exhibited significant gains in non-grid geometries, such as hexagonal structures, achieving 61% accuracy compared to 8% with CoT.

Error Analysis

A detailed error analysis on the ASCII MNIST task attributes the majority of the error to visual perception issues rather than code generation or visualization, highlighting limitations in the MLLMs' visual recognition capabilities. This suggests that further improvements in visual processing by MLLMs could bolster WoT's efficacy.

Implications and Future Work

The research has several practical implications:

- Enhanced performance in multimodal reasoning tasks can significantly broaden the application scope of MLLMs in fields requiring visual comprehension, such as medical imaging and autonomous navigation.

- For theoretical development, WoT could serve as a foundation for further studies into integrating higher-dimensional reasoning capabilities into LLMs.

Speculatively, future developments could explore the integration of more sophisticated visualization tools or the application of WoT in areas like augmented reality and more complex interactive virtual environments. As computer vision capabilities within MLLMs improve, WoT's approach will likely become even more powerful and versatile.

Conclusions

The whiteboard-of-thought methodology demonstrates a compelling approach to enhancing the visual reasoning capabilities of multimodal LLMs. By bridging the cognitive gap between textual and visual reasoning, WoT represents a significant step toward more holistic and human-like problem-solving abilities in AI systems. The findings suggest a fruitful direction for future research and applications, emphasizing the importance of cross-modal integration in unlocking the full potential of artificial intelligence.

Limitations

Current limitations include the reliance on existing visual perception capabilities, which are still evolving. Domains such as geometry, where high precision in visual understanding is crucial, pose challenges that remain to be fully addressed. Future advancements in computer vision integrated into MLLMs could further enhance WoT’s performance and utility across diverse applications.

Acknowledgments

The research presented was supported in part by the NSF AI Institute for Artificial and Natural Intelligence, the DARPA ECOLE program, the NSF CAREER Award, and a fellowship from Amazon, underscoring the collaborative nature and broad interest in advancing AI capabilities across modalities.