Comprehensive Evaluation Framework for Clinical Diagnostics: An Analysis of ClinicalLab

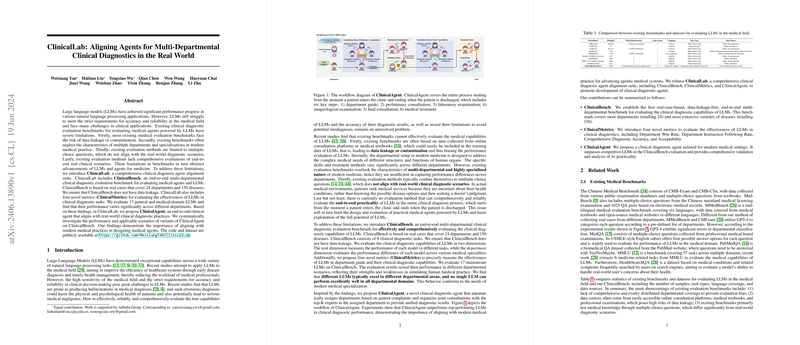

The paper "ClinicalLab: Aligning Agents for Multi-Departmental Clinical Diagnostics in the Real World" presents an extensive investigation into the application of LLMs in multi-disciplinary medical diagnostics, addressing key shortcomings in current methodologies and data evaluations. It introduces ClinicalLab, a multifaceted framework involving new benchmarks, metrics, and an agent designed for real-world clinical diagnostics.

At the heart of ClinicalLab is the ClinicalBench, a novel benchmark specifically devised to cover end-to-end clinical diagnostic scenarios across 24 departments and 150 diseases. This benchmark is lauded for its use of real-world data that circumvents issues of data leakage, thus providing a more robust ground for evaluating LLMs in medical diagnostics. With 1,500 detailed cases, ClinicalBench challenges models with tasks spanning department guidance, clinical diagnosis, and imaging diagnosis, a complexity not provided by previous medical benchmarks which typically restrict evaluations to multiple-choice questions with potential biases.

ClinicalMetrics, a suite of four novel metrics, complements ClinicalBench by offering granulated assessments of LLMs' capabilities, particularly focusing on department navigation accuracy, diagnostic thoroughness, and linguistic quality. The innovative metrics underscore the varying performance levels of LLMs across different departments—a reflection of the specialized nature of modern medicine.

In evaluating 17 LLMs using ClinicalBench, the research finds significant variability in performance, with general LLMs like InternLM2 demonstrating better aggregate results than specialized medical models, such as medical variants GPT-4. This discovery surfaces a critical insight: the immense specialization required in medical diagnostics challenges existing AI models, even ones with specific domain training. The inability of a single LLM to excel across all departmental domains indicates a pivotal opportunity for future advancements in model specialization or hybrid collaborations.

The paper further introduces ClinicalAgent, an advanced diagnostic agent that benefits from previously outlined evaluations. ClinicalAgent optimizes medical diagnosis with a dynamic allocation strategy, selecting the best-performing models for specific diagnostic tasks within departments, hence mirroring contemporary multi-disciplinary clinical practices. Evaluations reveal that this approach yields superior diagnostic outcomes compared to existing single-model approaches—total acceptability of 18.22% in configurations allowing for widespread departmental collaboration.

The implications of this paper are profound for the advancement of AI in healthcare. Firstly, the dataset's breadth and detailed evaluation metrics offer a more reliable standard for LLMs in a sensitive domain like healthcare, addressing data contamination concerns. Secondly, the research underscores a need for complex system designs, integrating multiple models for varied tasks, and suggests a pathway for realigning AI systems with human-like expertise specialization. Finally, ClinicalBench and ClinicalAgent serve as cornerstone contributions for further research, potentially prompting innovations in both AI model training and the practical applications of AI in clinical environments.

However, the paper acknowledges limitations such as region-specific data and the lack of direct comparisons with other agents due to distinct design constraints. Future research could explore incorporating multilingual datasets and testing advanced collaboration of multiple AI models for more comprehensive real-world applications.

Through ClinicalLab, the paper paves the way for developing and validating next-generation medical agents, positioning itself as a crucial step towards realizing reliable and effective AI solutions within clinical settings.