A Hopfieldian View-based Interpretation for Chain-of-Thought Reasoning

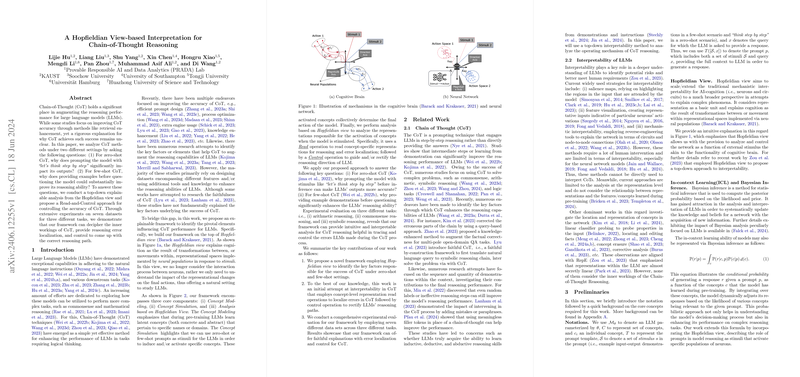

Chain-of-Thought (CoT) methods amplify the reasoning capabilities of LLMs, yet a rigorous theoretical explanation for their effectiveness remains elusive. The paper "A Hopfieldian View-based Interpretation for Chain-of-Thought Reasoning" by Hu et al. addresses this gap by providing a structured framework grounded in the Hopfieldian view to elucidate CoT methodologies under zero-shot and few-shot settings.

Core Objective and Motivation

The principal aim of the paper is to uncover the underlying factors that make CoT effective in enhancing logical reasoning in LLMs. This involves tackling two primary questions:

- Why does the prompt "let's think step by step" significantly improve zero-shot CoT outputs?

- Why do example demonstrations before querying enhance reasoning in few-shot CoT?

The authors propose an explainable framework derived from the Hopfieldian view, which posits cognition as the result of transformations within representational spaces created by neural populations in response to stimuli.

Proposed Framework

The framework suggested by the authors comprises three main components:

- Concept Modeling

- Concept Simulation

- Analysis based on Hopfieldian View

Concept Modeling

During the pre-training phase, LLMs learn both concrete and abstract latent concepts related to specific domains. These might involve names or domains and abstract notions like "positive language" or "careful reasoning."

Concept Simulation

Zero-shot or few-shot CoT tactics serve as stimuli triggering these concepts. The stimuli's role in the CoT setting is analogous to activating specific neural populations in a cognitive brain, as illustrated in the Hopfieldian view.

Analysis and Control

This phase involves two operations:

- Read Operation: It reads representations to localize errors in CoT reasoning.

- Control Operation: It adjusts the reasoning direction by guiding the activation of specific concepts.

Experimental Validation

The authors conducted extensive experiments on seven datasets across three tasks: arithmetic reasoning (GSM8K, SVAMP, AQuA), commonsense reasoning (StrategyQA, CSQA), and symbolic reasoning (Coin Flip, Random Letter).

Key observations from the experiments include:

- The proposed framework notably improves accuracy in arithmetic and symbolic reasoning tasks under both zero-shot and few-shot configurations.

- For example, in the zero-shot setting on the SVAMP dataset, the proposed method improved accuracy by approximately 4% for Mistral-7B-instruct.

- For few-shot CoT settings, the framework guided the correction of reasoning paths, as seen with LLaMA-2-7B-chat, achieving a 2.95% improvement on the CSQA dataset.

The paper also highlights the phenomenon of "stereotyping" in few-shot CoT, where LLMs may wrongly reinforce their reasoning paths due to influence from the few examples supplied. This indicates that, while few-shot learning can direct models towards a specific reasoning style, it may also mislead the reasoning process.

Practical and Theoretical Implications

The proposed Hopfieldian framework provides a novel approach for understanding and controlling the reasoning processes of LLMs. This not only enhances the reliability and accuracy of CoT reasoning but also enables error localization and correction—critical for improving the transparency and interpretability of LLMs. The authors’ methodology holds promise for future research in refining AI's reasoning capabilities and extending their framework to multi-modal scenarios.

Conclusion

Hu et al.'s work provides a well-founded theoretical framework for interpreting and enhancing CoT reasoning in LLMs. By leveraging the Hopfieldian view, it bridges the gap between cognitive neuroscience and artificial intelligence. This interpretative framework offers strong potential for both refining current reasoning techniques and guiding future developments in AI research, particularly in the domain of model interpretability and reasoning transparency.

Overall, the application of such a framework represents a significant step towards demystifying the inner workings of LLMs, facilitating improved model performance, and setting a foundation for more advanced research in the field of AI cognitive processing.