Iterative Length-Regularized Direct Preference Optimization: Enhancing 7B LLMs to Achieve GPT-4 Level Performance

The paper presents a refined technique for improving the alignment of LLMs with human preferences, utilizing a method known as Iterative Length-Regularized Direct Preference Optimization (iLR-DPO). The authors address a significant issue encountered in standard Direct Preference Optimization (DPO): the tendency towards increased verbosity in model outputs, which can be exacerbated in iterative training setups. This verbosity issue is particularly problematic when aiming to enhance response quality incrementally through online preference learning.

Methodology

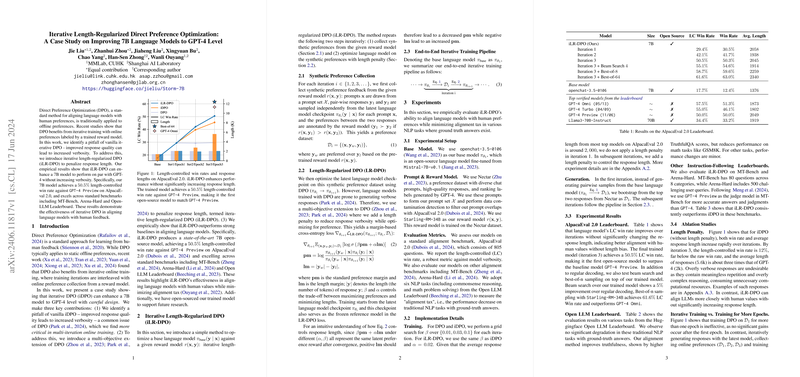

The approach employs a multi-objective optimization framework that incorporates a length penalty into the traditional DPO mechanism. By doing so, it seeks to mitigate the verbosity issue without compromising the alignment quality. The process involves iterative training cycles where synthetic preferences are harvested from a reward model. Each cycle consists of two primary steps: synthetic preference collection using a reward model and optimization of the LLM with a length penalty.

Critically, the adjustment introduces a margin-based cross-entropy loss function. This incorporates both a standard preference margin and a length margin, thereby providing a dual focus on maintaining response quality while managing response length.

Experimental Findings

The empirical results are notable. The model, identified as a 7B parameter LLM, reaches a 50.5% length-controlled win rate against the GPT-4 Preview in the AlpacaEval 2.0 evaluation, which includes an array of standard benchmarks like MT-Bench and Arena-Hard. This achievement marks it as the first open-source model to align closely with GPT-4 Preview under similar evaluation conditions, effectively matching its performance without falling into overly verbose outputs.

The paper highlights how iLR-DPO contributes to achieving a delicate balance between alignment to human feedback and maintaining reasonable computational resource demands—thereby minimizing what the authors term "alignment tax."

Implications and Future Directions

The implications of this work are multifaceted. Practically, the enhancement of a widely accessible 7B model to perform comparably to GPT-4-like standards represents a cost-effective way to democratize access to high-performing LLMs, broadening potential applications for users unable to afford proprietary solutions. Theoretically, the introduction of multi-objective alignment in LLMs opens avenues for further research in multi-dimensional preference optimization beyond verbosity concerns.

Speculating on future developments, this work suggests a burgeoning interest in devising more sophisticated reward models that incorporate nuanced human-like feedback facets beyond simple correctness or verbosity metrics. This could stimulate advancements in creating models that not only answer questions appropriately but also engage at higher levels of conversation dynamism and adherence to implicit social norms.

Conclusion

The paper contributes a meaningful step towards refining LLM alignment with human preferences by addressing verbosity and creating a balance in achieving performance standards comparable to state-of-the-art models such as GPT-4. The open-sourcing of the enhanced model is an enabling move that positions the research community to build upon these findings, exploring novel optimization spaces and deploying these in real-world contexts.