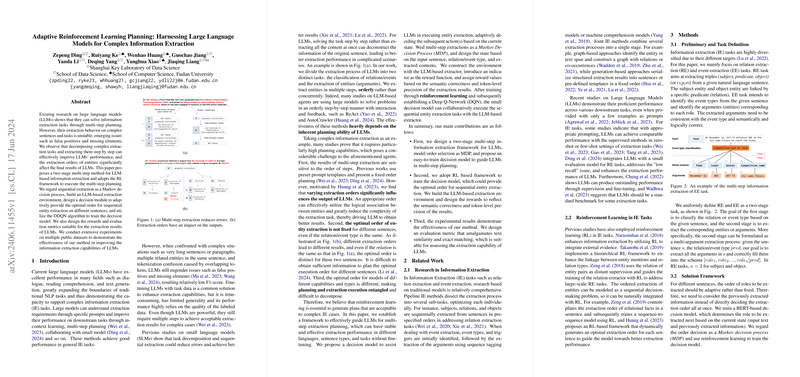

The paper introduces a novel two-stage multi-step framework for information extraction (IE) that leverages LLMs and Reinforcement Learning (RL) to enhance extraction performance on complex sentences and tasks. The approach addresses the instability of LLMs in handling intricate extraction scenarios, where issues like false positives and missing elements often arise.

The authors observe that decomposing complex extraction tasks into sequential steps and carefully ordering the extraction of entities can significantly improve the performance of LLMs. The core idea is to treat sequential extraction as a Markov Decision Process (MDP), where a decision model adaptively determines the optimal order for extracting entities from different sentences. A Deep Q-Network (DQN) algorithm is employed to train this decision model. Additionally, the paper introduces custom rewards and evaluation metrics tailored for assessing the extraction results of LLMs.

Here's a breakdown of the key components and contributions:

- Problem Statement: The paper addresses the challenge of unstable extraction behavior in LLMs when dealing with complex sentences and tasks in IE. This includes issues like false positives, missing elements, and sensitivity to the order of extraction.

- Proposed Solution: The authors propose a two-stage multi-step method for LLM-based IE, incorporating an RL framework to manage multi-step planning. This involves:

- Decomposing the extraction task into relation/event classification and entity/argument extraction.

- Modeling the sequential extraction process as an MDP.

- Developing an LLM-based extraction environment.

- Designing a decision module to adaptively determine the optimal order for sequential entity extraction.

- Utilizing the DDQN algorithm to train the decision model.

- Creating rewards and evaluation metrics suitable for LLM extraction results.

- Methodology:

- Framework Overview: The framework involves a two-stage process: first, classifying the relation or event type; second, extracting entities or arguments in multiple steps guided by the decision model.

- Markov Decision Process (MDP): The entity extraction order selection is modeled as an MDP, with the state defined by the input sentence, relation/event type, extracted content, and roles schema. The action is the choice of the next role to extract, and the reward is based on the semantic correctness and token-level precision of the extraction results.

- Decision Model: A BERT-based model is trained to evaluate the value of (State, Action) pairs, guiding the environment (LLM-based extractor) to perform sequential extraction. The model is trained using Deep Q-Learning and a Deep Q-Network (DQN).

Reward Function and Model: The reward function is designed as an indicator function that assigns a reward of 1 to acceptable extraction results and 0 to incorrect ones. An LLM is used as the reward model to evaluate semantic correctness, with token-level similarity used as a threshold. TextF1 score is used to measure token-level similarity between ground-truth and extracted arguments:

: Set of tokens that are both in predicted and ground truth. : Set of tokens in predicted arguments. : Set of tokens in ground truth arguments.

,

: Text Precision : Set of tokens that are both in predicted and ground truth. : Set of tokens in predicted arguments. : Text Recall : Set of tokens that are both in predicted and ground truth. : Set of tokens in ground truth arguments.

: Text F1-score : Text Precision : Text Recall

LLM Utilization: LLMs are used for relation/event classification and argument extraction without fine-tuning. For RE tasks, the roles are designed as "subject/object: entity type" to provide more context for the LLM.

- Experiments and Results: The authors conduct experiments on public IE datasets, including relation extraction datasets like NYT, Wiki80, and event extraction datasets like DuEE and ACE05. The results demonstrate that the proposed method outperforms existing prompt-based fixed-order planning methods, achieving higher precision, recall, and F1 scores across various LLM extractors and datasets. The experiments also show that RL-based extraction order selection generally yields better results compared to fixed or random order selection.

- Key Contributions:

- A two-stage multi-step IE framework for LLMs that models order selection as an MDP.

- An RL-based framework for training a decision model that provides the optimal order for sequential entity extraction.

- A reward design that considers both semantic correctness and token-level matching.

- Empirical evidence demonstrating the effectiveness of the method on multiple datasets.

The paper concludes by highlighting the effectiveness and generalizability of the proposed RL-based multi-step planning method for enhancing the IE capabilities of LLMs. It also discusses limitations and future research directions, such as improving the reward model and exploring LLMs for order-decision tasks.