A Simple and Effective Norm-Based Strategy for KV Cache Compression

The paper "A Simple and Effective Norm-Based Strategy for KV Cache Compression" presents a novel approach to addressing the extensive memory requirements associated with the Key-Value (KV) cache in LLMs, particularly as context lengths increase. The authors, Devoto et al., propose a heuristic that leverages the norm of key embeddings to compress the KV cache without sacrificing model accuracy.

Context and Motivation

In LLMs, particularly those built using decoder-only Transformer architectures, the KV cache plays a crucial role in storing keys and values derived from past tokens to avoid recomputation during generation. This cache enables efficient handling of long context dependencies, but as context length increases, so does the size of the KV cache, leading to higher memory usage and decoding latency. The authors' primary motivation is to mitigate this issue without requiring complex algorithms or significant modifications to the model architecture.

Key Observations and Methodology

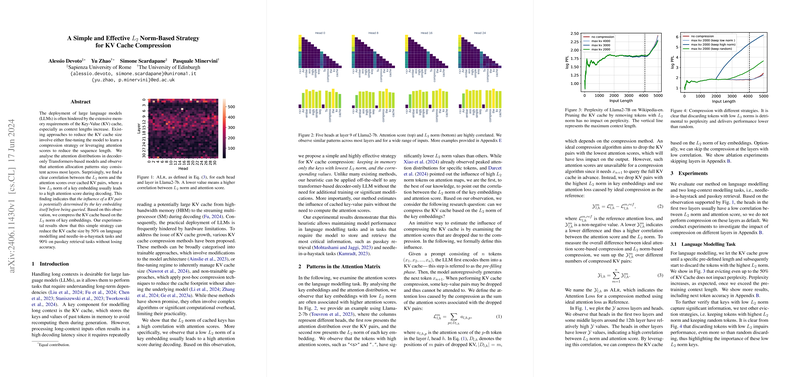

The core observation underpinning this work is the high correlation between the norm of key embeddings () and their attention scores during decoding. Analysis of attention distributions across multiple layers of popular LLMs like Llama-2 reveals that key embeddings with lower norms are generally associated with higher attention scores. Based on this, the authors hypothesize that retaining only the keys with the lowest norms could effectively compress the KV cache without substantial loss of critical information.

The proposed strategy involves compressing the KV cache by retaining keys with the lowest norms and their corresponding values, thereby reducing the cache size significantly. This approach stands out because it does not require retraining the model or making architecture modifications, making it applicable to any transformer-based decoder-only LLM off-the-shelf.

Experimental Results

The authors evaluate their proposed method on several tasks:

- LLMling:

- The experiments demonstrate that removing up to 50% of the KV cache based on the norm does not degrade perplexity. Results shown in Figure 3 indicate that perplexity remains stable until a large compression ratio is applied, which confirms the practicality of the method.

- Additional evaluations using other strategies (keeping high norm keys and random compression) clearly indicate that the proposed approach of retaining low norm keys performs significantly better.

- Long-Context Modelling Tasks:

- Needle-in-a-Haystack and Passkey Retrieval Tasks:

- The method achieves impressive results, maintaining 99% accuracy on the needle-in-a-haystack task even with a 50% KV cache reduction (Figure 8).

- For passkey retrieval, the method maintains 100% accuracy even when compressing 90% of the KV cache (Figure 9).

- These results starkly contrast with other compression strategies, further validating the effectiveness of the -norm-based approach.

- Needle-in-a-Haystack and Passkey Retrieval Tasks:

Theoretical Analysis and Implications

The paper introduces the concept of Attention Loss (ALr) to measure the efficacy of the compression methodology. By defining attention loss as the sum of attention scores of dropped KV pairs, the authors quantitatively showcase the correlation between attention scores and norms (Equation 3).

The implications of these findings are substantial. The proposed method not only provides a simple, computationally efficient way to manage KV cache sizes but also paves the way for more accessible deployment of LLMs in hardware-constrained environments. This approach's practical benefits can notably enhance applications involving LLMs where long context handling is critical, such as document summarization, translation, and large-scale question-answering systems.

Future Directions

Future research could involve extending this heuristic to different model architectures and context lengths. Additionally, further theoretical exploration into why the norm correlates so strongly with attention scores could provide deeper insights, potentially uncovering more sophisticated strategies for memory management. Expanding the evaluation to larger models like Llama2-13b and Llama2-70b, as well as upcoming model architectures, would be valuable to verify the generalizability of these findings.

Conclusion

The paper by Devoto et al. contributes a straightforward yet effective strategy for KV cache compression in LLMs by leveraging the norm of key embeddings. Their approach, validated through rigorous experimentation on various tasks, demonstrates that significant memory footprint reductions are achievable without impacting model performance. This work has clear practical implications and opens up avenues for further research and optimization in efficient AI deployment.