Quest: Query-Aware Sparsity for Efficient Long-Context LLM Inference

The paper under review introduces "Quest," a novel approach designed to enhance the efficiency of long-context inference in LLMs by leveraging query-aware sparsity within self-attention mechanisms. As LLMs such as GPT-4 become capable of handling exceptionally long context windows ranging from 128K to 1M tokens, the computational burden of processing such extensive inputs in real-time becomes increasingly challenging. The primary source of inefficiency is identified as the loading of large key-value (KV) caches during the self-attention phase, which significantly contributes to inference latency.

Methodological Contributions

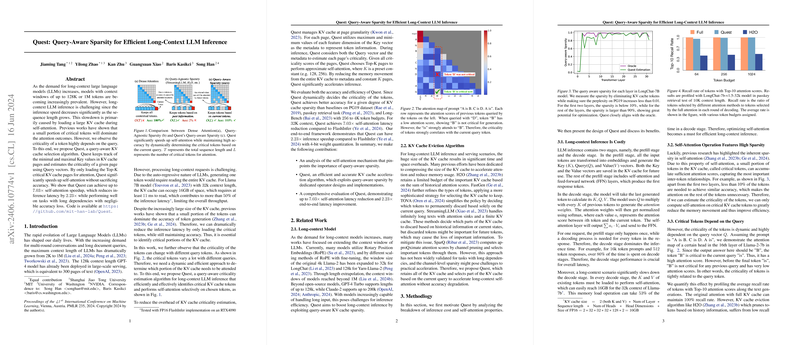

- Query-Aware KV Cache Selection: Quest introduces an innovative algorithm that dynamically selects critical parts of the KV cache based on the current query. This approach deviates from traditional methods that apply a static or history-based selection criterion for KV cache entries. By estimating the criticality of cache pages through query vector interactions, Quest emphasizes a query-aware processing regime that effectively reduces the number of key-value pairs utilized during attention without compromising model accuracy.

- Efficient Page-Based Management: The proposed method organizes KV caches at the granularity level of pages rather than individual key-value pairs. By maintaining minimum and maximum values for each feature dimension within these pages, Quest estimates the criticality score through interactions with the query vectors, thus allowing for a rapid determination of which segments of the cache significantly influence the self-attention output.

- Performance Improvements: The paper presents compelling numerical evidence demonstrating Quest's efficacy. It achieves up to a 7.03x speedup in self-attention operations and a 2.23x reduction in end-to-end latency. The approach successfully handles tasks with long dependencies, maintaining a negligible loss in accuracy.

Experimental Evaluation

The research comprehensively evaluates Quest across multiple datasets, including PG19, passkey retrieval tasks, and LongBench benchmarks. Notable results illustrate that Quest outperforms existing KV cache eviction strategies such as H2O, TOVA, and StreamingLLM by ensuring superior recall rates and maintaining high accuracy across diverse and demanding long-context scenarios.

On practical implications, Quest significantly enhances self-attention efficiency by concentrating computational resources only on the most critical parts of the KV cache. This leads to substantial reductions in memory movement and improves overall throughput for inference tasks with extended sequences.

Implications and Future Directions

The introduction of Quest presents a potential paradigm shift in how long-context dependencies are managed in LLMs. By integrating a dynamic, query-aware selection process, Quest opens avenues to explore further optimizations in sparsity-aware algorithms and could inspire advancements in model design, particularly for commercial applications where latency and computational resource management are of paramount importance.

Future research directions may explore reducing the computational overhead involved in criticality estimation or further scaling the approach to accommodate the increasingly larger models and context lengths expected in forthcoming LLM iterations. Additionally, integrating Quest with other model efficiency techniques such as quantization could further optimize long-context model deployments.

In conclusion, Quest demonstrates a sophisticated and effective method for improving the inference efficiency of LLMs with long-context capabilities, offering promising insights for both theoretical and practical advancements in artificial intelligence.