MMWorld: Comprehensive Evaluation of Multimodal LLMs in Video Understanding

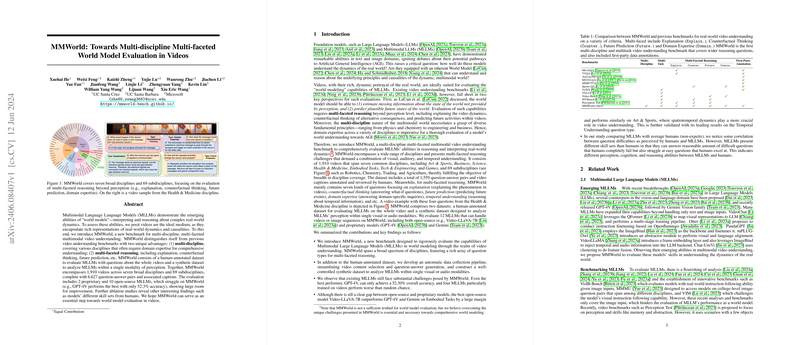

The paper "MMWorld: Towards Multi-discipline Multi-faceted World Model Evaluation in Videos" introduces a novel benchmark, MMWorld, aimed at assessing the multifaceted reasoning capabilities of Multimodal LLMs (MLLMs) through video understanding. MMWorld distinguishes itself with its unique dual focus on covering a broad spectrum of disciplines and presenting multi-faceted reasoning challenges. This new benchmark aspires to provide an extensive evaluation of MLLMs' abilities to understand and reason about real-world dynamics, making it a crucial resource for advancing research towards AGI.

Key Contributions

MMWorld presents several key contributions:

- Multi-discipline Coverage: MMWorld encompasses videos from seven broad disciplines and 69 subdisciplines, requiring domain-specific knowledge for comprehensive understanding.

- Multi-faceted Reasoning: The benchmark integrates various reasoning tasks, including explanation, counterfactual thinking, future prediction, domain expertise, and more, thereby extending the evaluation beyond mere perception.

- Dataset Composition: MMWorld consists of 1,910 videos accompanied by 6,627 question-answer pairs and captions. It comprises both a human-annotated dataset for whole-video evaluation and a synthetic dataset designed to test single-modality perception.

- Model Performance Evaluation: Twelve MLLMs, including both proprietary and open-source models, were evaluated, with GPT-4V achieving the highest accuracy of 52.3%, yet still demonstrating substantial room for improvement.

Evaluation Metrics and Results

The MMWorld benchmark evaluates MLLMs on how well they interpret and reason across various video-based tasks:

- Explanation: Models are tasked with explaining phenomena in the videos.

- Counterfactual Thinking: Models predict alternative outcomes to hypothetical scenarios.

- Future Prediction: Models predict future events based on current video context.

- Domain Expertise: Assesses models' abilities to answer domain-specific inquiries.

- Temporal Understanding: Evaluates reasoning about temporal information.

The performance of models varies significantly across different tasks and disciplines. Proprietary models like GPT-4V and Gemini Pro lead in most disciplines, achieving the highest overall accuracy. Open-source models like Video-LLaVA-7B show comparative performance on specific disciplines, particularly where spatiotemporal dynamics are crucial. Notably, four MLLMs performed worse than random chance, underlining the complexity and difficulty posed by MMWorld.

Implications for Future Research

The results from MMWorld illustrate both the current capabilities and limitations of MLLMs in understanding and reasoning about dynamic real-world scenarios. The clear performance gaps, even for top models like GPT-4V achieving only 52.3% accuracy, indicate substantial room for advancement. These findings prompt several future research directions:

- Improvement of MLLMs: Enhancing multimodal models to better understand and integrate visual, auditory, and temporal information.

- Domain-specific Training: Developing models with enhanced domain-specific knowledge to improve performance in specialized areas such as health and engineering.

- Temporal Reasoning: Focusing on improving models' capabilities in temporal understanding and prediction, which are crucial for many real-world tasks.

- Error Analysis and Mitigation: Investigating and mitigating common error types, such as hallucination, misunderstanding of visual or audio content, and reasoning flaws.

Furthermore, the comparative paper between MLLMs and human evaluators reveals that while models show promising results, there are distinct differences in reasoning and understanding capabilities. This insight encourages the development of hybrid systems leveraging both human expertise and model predictions.

Conclusion

MMWorld sets a new standard for evaluating the "world modeling" abilities of MLLMs in video understanding, covering a diverse range of disciplines and reasoning tasks. The benchmark highlights the current state and challenges in the field, serving as a critical tool for driving future innovations. As the quest for AGI continues, MMWorld provides a structured and comprehensive testing ground to explore and expand the horizons of multimodal AI, ultimately contributing to the creation of more robust, versatile, and intelligent systems.