Mixture-of-Agents Enhances LLM Capabilities

"Mixture-of-Agents Enhances LLM Capabilities" by Junlin Wang et al. presents a novel approach to leverage the collective strengths of multiple LLMs through a Mixture-of-Agents (MoA) methodology. This methodology addresses the inherent limitations of individual LLMs by introducing a layered architecture where each layer comprises multiple LLM agents that iteratively refine and improve responses.

Key Contributions

The paper's contributions are summarized below:

- Collaborativeness of LLMs: One of the pivotal insights from the paper is the phenomenon termed "collaborativeness," where LLMs tend to generate better responses when presented with outputs from other models, even if those other models are less capable. This effect was empirically validated on benchmarks such as AlpacaEval 2.0.

- Mixture-of-Agents Framework: The authors propose a MoA framework that iteratively enhances the generation quality by employing multiple LLMs at each layer to refine and synthesize responses. This iterative refinement continues for several cycles, ensuring a robust final output.

- State-of-the-Art Performance: The MoA framework achieves state-of-the-art performance on multiple benchmarks, including AlpacaEval 2.0, MT-Bench, and FLASK. The MoA using only open-source LLMs outperforms the GPT-4 Omni by a significant margin, achieving a score of 65.1% compared to 57.5% on AlpacaEval 2.0.

Methodology

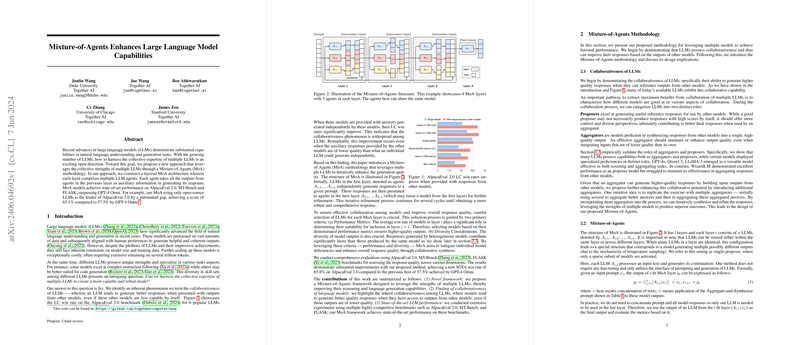

Mixture-of-Agents Architecture

The proposed MoA architecture consists of multiple layers, each containing several LLM agents. Each agent processes the outputs from the previous layer to generate refined responses. The iterative refinement process ensures that the final output is more comprehensive and robust.

The selection of LLMs for each MoA layer is based on two primary criteria:

- Performance Metrics: The average win rate of models in the current layer.

- Diversity Considerations: The diversity of model outputs, ensuring heterogeneous contributions to improve overall response quality.

Experimental Setup and Evaluations

The authors conducted extensive evaluations using AlpacaEval 2.0, MT-Bench, and FLASK benchmarks to assess the quality of responses generated by the MoA framework. Key results include:

- AlpacaEval 2.0: The MoA framework achieved a new state-of-the-art win rate of 65.1%, substantially outperforming GPT-4 Omni.

- MT-Bench: The MoA with GPT-4o achieved an average score of 9.40, demonstrating superior performance when compared to existing models.

- FLASK: The MoA framework outperformed GPT-4 Omni in various dimensions, including robustness, correctness, efficiency, factuality, and insightfulness.

Implications and Future Work

The introduction of the MoA framework has significant implications for the future of LLM research and deployment, both practically and theoretically.

Practical Implications

- Enhanced Model Performance: By leveraging multiple LLMs, the MoA framework provides a pathway to achieving higher performance without the need for extensive retraining of individual models.

- Cost Efficiency: The MoA-Lite variant demonstrates that comparable or superior performance can be achieved at a fraction of the cost, making it a cost-effective solution for deploying high-quality LLMs.

Theoretical Implications and Future Directions

- Understanding Collaboration: The collaborativeness phenomenon opens up new avenues for understanding how models can be better utilized collectively. Future research can explore the mechanics of model collaboration and optimization of MoA architectures.

- Scalability: The positive results suggest that further scaling the width (number of proposers) and depth (number of layers) of the MoA framework could yield even better performance, providing a promising direction for future investigation.

- Interpretable AI: The MoA approach, due to its iterative and layered refinement mechanism, enhances the interpretability of model outputs, potentially aiding in better alignment with human reasoning and preferences.

Conclusion

The paper "Mixture-of-Agents Enhances LLM Capabilities" introduces an innovative methodology for improving LLM performance by leveraging the collective expertise of multiple models. The MoA framework not only achieves state-of-the-art performance but also offers significant improvements in cost efficiency and interpretability. This work paves the way for more collaborative and efficient models, setting a new paradigm in the landscape of natural language processing and artificial intelligence.