Analyzing LLM Behavior in Dialogue Summarization: Unveiling Circumstantial Hallucination Trends

The paper "Analyzing LLM Behavior in Dialogue Summarization: Unveiling Circumstantial Hallucination Trends" by Sanjana Ramprasad, Elisa Ferracane, and Zachary C. Lipton explores the subtleties of summarization capabilities demonstrated by LLMs such as GPT-4 and Alpaca-13B. The authors critique the existing body of knowledge which predominantly focuses on BART-based models in evaluating news domain summaries, highlighting an opportunity to extend this evaluation framework to dialogue summarization.

In summarization, hallucinations present a persistent challenge, wherein models generate statements not directly supported by the source material. This research endeavors to bridge existing gaps by examining the faithfulness of LLM-generated dialogue summaries, identifying prevalent error categories, and proposing novel detection methodologies.

Research Contributions

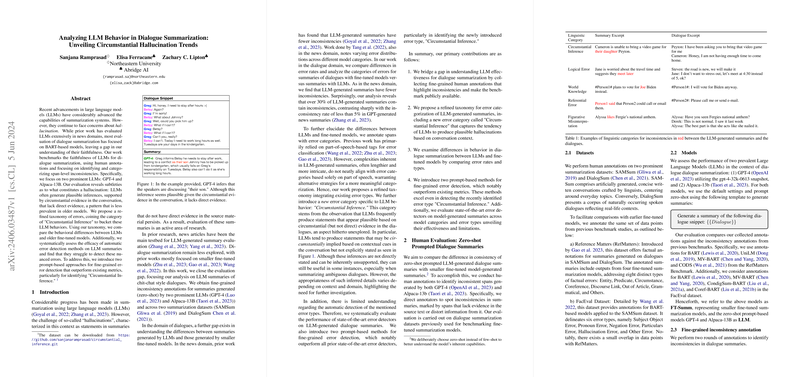

- Taxonomy of Errors: The authors introduce a refined taxonomy to classify LLM-generated summary inconsistencies, with a focus on dialogue summarization. They particularly underscore the emergence of "Circumstantial Inference" errors, where models like GPT-4 generate plausible conclusions based on circumstantial conversation cues rather than explicit information from the source dialogue. This is a nuanced form of hallucination, whereby commonsense implications surface in summaries but are devoid of direct textual evidence.

- Comparison Between Models: By comparing dialogue summarization performance between LLMs such as GPT-4 and Alpaca-13B, and older, fine-tuned models like BART, the authors reveal that LLM-generated summaries exhibit fewer inconsistencies, albeit not as few as those observed in news domain summaries. This evaluation was conducted using datasets such as SAMSum and DialogSum, where a notable finding was approximately 23% of GPT-4-generated summaries exhibiting inconsistencies, primarily due to circumstantial inference.

- Error Detection Evaluation: The paper assesses current state-of-the-art automatic error detection methods and asserts their inadequacy in identifying nuanced errors inherent in LLM-generated summaries. The authors introduce two novel prompt-based methods for fine-grained error detection. Their results demonstrate heightened efficacy, especially in identifying "Circumstantial Inference" errors — highlighting potential paths forward in summarization error detection methodologies.

- Annotation and Evaluation Techniques: Utilizing human annotations, the paper establishes a benchmark for LLM summarization inconsistencies. The analysis extends across multiple linguistic error categories, providing insights into how traditional error classification approaches fall short when applied to LLMs due to the inherent abstractions and inferences these models make.

Implications and Future Directions

The findings of this research underscore the need for re-evaluating and enhancing error detection frameworks due to the sophistication of LLM outputs. Specifically, the prevalence of circumstantial inferences in dialogue summarization urges the development of more exacting benchmarks and detection systems that align better with LLM capabilities. Furthermore, the incorporation of advanced metrics using prompt-based methods suggests promising directions for future research.

This investigation into dialogue summarization adds to the growing understanding of how LLMs behave in this less-explored context compared to the news domain. Decoding the intricacies of circumstantial inference bears significant theoretical implications by extending the boundaries of what constitutes a hallucination in the AI field. Practically, these insights could refine how automated systems summarize conversations in applications ranging from customer service to medical records, potentially enhancing the consistency and reliability of such technologies.

In conclusion, this paper adeptly identifies the subtleties in large-scale dialogue summarization and sets a precedent for advancing both theoretical frameworks and practical methodologies in the field of natural language processing and AI. Future research should explore expanding these benchmarks across a broader inventory of LLM architectures to further dissect the error landscapes these models encounter.