Analysis of the QJL: 1-Bit Quantized JL Transform for KV Cache Quantization

Overview

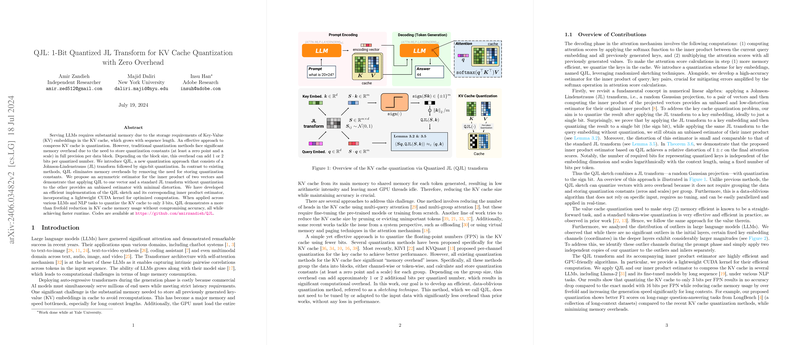

The paper "QJL: 1-Bit Quantized JL Transform for KV Cache Quantization with Zero Overhead" introduces a novel methodology for alleviating the substantial memory demands associated with LLMs, specifically addressing the key-value (KV) cache memory overhead during autoregressive generation. Traditional approaches to quantization in the KV cache suffer from overheads due to the storage of quantization constants. The proposed QJL method utilizes a Johnson-Lindenstrauss (JL) transform followed by a sign-bit quantization to effectively reduce this overhead.

Methodology

The QJL approach integrates randomized sketching techniques to quantize the KV cache with minimal distortion. Notably, this technique does not require the usual storage of quantization constants, achieving a zero overhead quantization. The model applies a JL transform on key embeddings followed by quantization to a single bit (the sign bit). Meanwhile, the query embeddings undergo the same JL transform, albeit unquantized. This asymmetric application allows the computation of unbiased inner product estimations necessary for the softmax operations during attention score calculations.

A key theoretical contribution lies in proving that the estimator retains low distortion and unbiasedness, even under quantization to a single bit. This claim is substantiated through several lemmas illustrating bounded distortion characteristics and high fidelity of the QJL transform when applied to real-time, parallelizable tasks. The proposed method is highly suited for GPU implementations, indicating significant practical applicability.

Empirical Results

The authors performed a comprehensive set of experiments demonstrating the efficacy of the QJL method across several LLMs, including Llama-2. It is reported that QJL can quantize the KV cache to 3 bits per floating-point number (FPN), achieving a more than fivefold reduction in memory usage without sacrificing model accuracy. In particular, the quantized models exhibited no accuracy drop compared to models utilizing a larger 16 bits per FPN, even improving F1 scores on long-range question-answering datasets such as LongBench.

Furthermore, the computational efficiency of QJL surpasses traditional quantization approaches, as evidenced by speed benchmarks comparing various methods. The paper highlights the advantage of QJL's data-oblivious algorithm, which is more efficient than contemporary methods like KVQuant due to eliminating the need for detailed preprocessing or adaptive mechanisms.

Implications and Future Directions

The QJL framework prominently contributes toward optimizing LLM deployment by significantly lowering memory requirements and computational burden while maintaining, or even improving, model accuracy. The findings have practical implications for the real-time deployment of LLMs in commercial settings with stringent latency and resource constraints.

Theoretically, the employment of a JL transform for quantization holds promise for further research on efficient model compression techniques. Future work may expand upon this by exploring alternative quantization schemas or refining the JL transform parameters to enhance precision or reduce overhead further.

Overall, the integration of JL transforms into the quantization process presents a compelling direction for both academia and industry, highlighting the paper's contributions to advancing LLM efficiency research. Future evaluations could extend to broader model architectures or explore the generalizability of this quantization strategy in other data-intensive domains beyond LLMing.