Listener-Aware Finetuning for Confidence Calibration in LLMs (LACIE)

The paper "LACIE: Listener-Aware Finetuning for Confidence Calibration in LLMs" by Elias Stengel-Eskin, Peter Hase, and Mohit Bansal addresses a significant challenge in the deployment of LLMs: the alignment of expressed confidence with actual model certainty. This issue is critical given the increasing reliance on LLMs for information tasks, where users often interpret model outputs as they would interpret human speech, expecting adherence to the Gricean maxims, particularly that of truthfulness.

Motivation and Objective

The primary motivation behind LACIE is to mitigate the prevalent problem of overconfidence in LLMs. LLMs can express confidence both explicitly (e.g., "I am 100% sure") and implicitly through detailed explanations or authoritative tones, even when their answers are incorrect. This mismatch between expressed confidence and true knowledge can mislead users, reducing the reliability of these systems in real-world applications.

The objective of LACIE is to calibrate both explicit and implicit confidence markers by incorporating a listener-aware finetuning framework. This method involves pragmatic calibration, where a simulated listener's acceptance or rejection of an LLM's response informs the finetuning process, considering not only the correctness of the information but also how it will be perceived by users.

Methodology

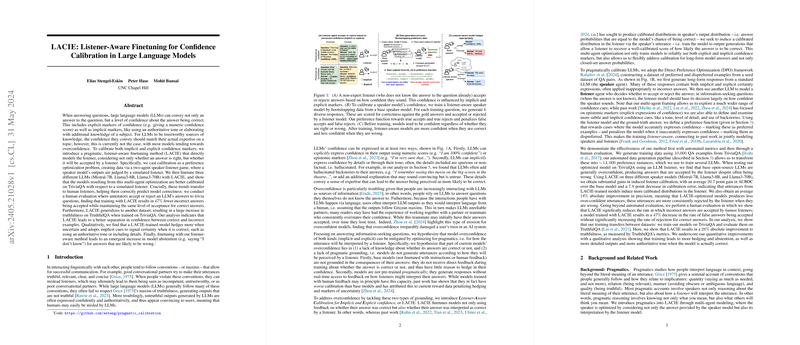

The authors introduce a multi-agent framework consisting of a speaker model and a simulated listener model. Calibration is treated as a preference optimization problem within a two-agent speaker-listener game paradigm:

- Speaker Model: Generates multiple responses to a given question, which include both correct and incorrect answers articulated with varying levels of confidence.

- Listener Model: Evaluates these responses, assigning probabilities of acceptance based on the perceived confidence and correctness of the answers.

The process involves several key steps:

- Data Generation: Using a large subset of TriviaQA, multiple diverse responses to each question are generated using an LLM (e.g., Mistral-7B).

- Answer Evaluation: Using a follow-up prompt, the final answer is extracted from each response. The listener model, instructed to ignore its prior knowledge, rates the responses on their perceived confidence.

- Preference Data Construction: Responses are compared against gold-standard answers to create preference pairs, which are categorized based on correctness and acceptance by the listener model.

- Finetuning with DPO: Using Direct Preference Optimization (DPO), the speaker model is finetuned to maximize the margin between preferred and non-preferred pairs, thus aligning expressed confidence with true correctness.

Results

The efficacy of LACIE is demonstrated through both automated and human evaluations:

- Automated Metrics:

- AUROC and ECE: LACIE-trained models exhibit a significant improvement in Area Under Receiver Operating Characteristic (AUROC) and Expected Calibration Error (ECE) across different LLMs such as Mistral-7B, Llama3-8B, and Llama3-70B.

- Precision and Recall: LACIE improves precision considerably, indicating fewer false acceptances of incorrect answers, with a reasonable trade-off against recall.

- Human Evaluation:

- In a paper involving human annotators, LACIE leads to a 47% decrease in the acceptance rate of incorrect answers without significantly increasing false rejections. This confirms the practical applicability of the method for human-AI interaction.

- Out-of-Distribution Generalization:

- Evaluation on TruthfulQA indicates a substantial increase in truthfulness metrics when models trained on TriviaQA are tested, suggesting robust generalization capabilities.

Implications

The paper's findings have significant theoretical and practical implications:

- Theoretical Implications:

The paper confirms that incorporating pragmatic listener models can effectively bridge the gap between LLM expressed confidence and true knowledge. This extends the understanding of how multi-agent systems and preference optimization can enhance the reliability of conversational agents.

- Practical Implications:

Practically, the adoption of LACIE can improve user trust and system safety by reducing the likelihood of users being misled by overconfident LLMs. Enhanced calibration can lead to more transparent and reliable AI systems, particularly in applications requiring high-stakes decision-making.

Future Directions

While LACIE represents a significant advancement, several future directions are worth exploring:

- Integrating even more sophisticated listener models tailored to specific domains and use-cases.

- Extending the method to cover a broader range of contexts, including dialogue systems and interactive agents.

- Investigating methods to balance calibration with other conversational qualities such as politeness and engagement.

In summary, the paper "LACIE: Listener-Aware Finetuning for Confidence Calibration in LLMs" presents a promising approach to enhancing the trustworthiness and reliability of LLMs. By aligning expressed confidence with actual correctness through innovative multi-agent optimization, LACIE sets a new direction for developing more pragmatic and user-aligned AI systems.