Examination of In-Context Learning for Instruction Following in LLMs

Overview

The paper "Is In-Context Learning Sufficient for Instruction Following in LLMs?" authored by Hao Zhao, Maksym Andriushchenko, Francesco Croce, Nicolas Flammarion, and EPFL Switzerland, presents a rigorous evaluation of in-context learning (ICL) techniques to align LLMs for instruction-following tasks. This paper, contextualized by prior research including Brown et al. (2020) and Lin et al. (2024), seeks to assess whether ICL can align LLMs comparably to instruction fine-tuning across established benchmarks such as MT-Bench and AlpacaEval 2.0.

The researchers derive insights by comparing several models' performances and exploring the limitations of ICL alignment through various strategies. Their work sheds light on the differentiation between instructional tasks and more traditional tasks like classification, further pondering upon the challenges and future directions in AI alignment techniques.

Main Findings

Performance Comparisons

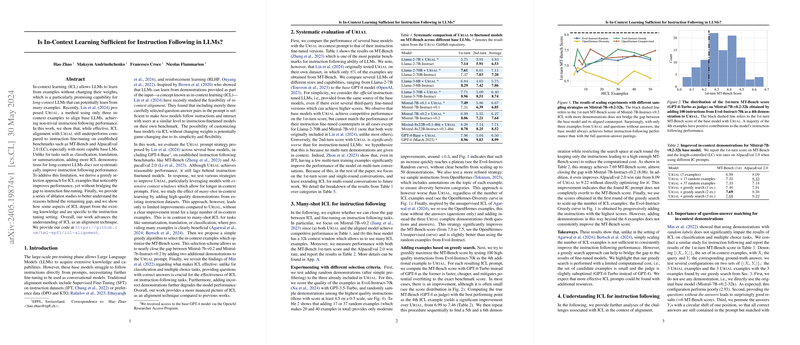

The research underscores the notable performance gap between ICL and instruction fine-tuning. Despite the effectiveness of U RIAL, an initial ICL alignment method using three in-context examples, the base models' performances consistently lag behind their instruction-tuned counterparts.

- Performance Metrics:

- Llama-2-70B with ICL scored an average of 7.11 on the 1st-turn MT-Bench, while the instruction-tuned model scored 7.20.

- Mistral-7B-v0.2 with ICL scored 6.27 in comparison to its instruction-tuned version, which scored 7.64.

The results indicate that even the addition of multiple high-quality ICL examples only provides limited improvements. For instance, adding up to 50 in-context examples does not significantly bridge the gap with instruction-tuned models.

Greedy Selection Approach

To mitigate the underperformance of ICL relative to instruction fine-tuning, the authors explore a greedy selection strategy to enhance ICL example selection, focusing on the base model Mistral-7B-v0.2 due to its extended context window.

- Results of adding examples through a greedy search showed:

- An improvement from 6.99 to 7.46 in MT-Bench score with one additional example.

- Further addition of examples led to a marginal increase in performance, signaling diminishing returns.

Additionally, the paper finds that providing correct answers in the ICL examples is crucial. Incorrect demonstrations significantly degrade performance, especially with more extensive ICL prompts. Variations in MT-Bench performance, specifically the 2nd-turn scores, highlight the limitations of ICL in handling multi-turn instructions due to a lack of multi-turn demonstrations.

Scaling Experiments and Transferability

The research explores scaling ICL examples and their impact on instruction-following tasks, generally showing no consistent improvement. Notably, a few specific high-quality examples can be more beneficial than a larger set of random examples.

- Experiments with many-shot ICL:

- Demonstrated only limited improvements.

- Performance plateaued after a certain number of examples.

The transferability of the optimized ICL examples to other LLMs yielded mixed results, indicating that effective ICL strategies may need to be tailored to each model, reflecting the variations in their pre-training datasets and architectures.

Implications and Future Directions

This paper underscores several theoretical and practical implications for AI alignment:

- Theoretical Understanding:

- The alignment of LLMs through ICL reveals task-specific nuances, contrasting with ICL applications in classification or summarization.

- Comprehensive evaluations confirm that ICL, while promising, is not universally sufficient for achieving fine-tuned performance levels, particularly for complex, open-ended instruction-following tasks.

- Practical Methodologies:

- Fine-tuning remains a critical requirement for optimal instruction-following performance in LLMs.

- ICL methods can be improved by incorporating high-quality, contextually appropriate examples, although this does not compensate entirely for the benefits obtained through instruction fine-tuning.

- Future Development:

- Enhancing ICL’s effectiveness for multi-turn conversations and investigation into scalable, resource-efficient greedy selection strategies hold potential for future research.

- The nuanced understanding of ICL’s performance dynamics emphasizes the need for customized alignment techniques for different LLMs, fostering advancements in model robustness and versatility, particularly in lengthy context scenarios.

Conclusion

The paper provides a substantive analysis of ICL as an alignment technique for instruction-following in LLMs. It extensively discusses the challenges and inefficacies of ICL when juxtaposed with traditional instruction fine-tuning, particularly for high-stakes, complex tasks. Ultimately, while ICL showcases potential for simple, cost-efficient alignment strategies, comprehensive fine-tuning is indispensable for harnessing the full capabilities of LLMs in instruction-following applications. This paper’s insights prompt further exploration into the scalability of ICL and the intricacies of optimizing its application across diverse LLM architectures and tasks.