Self-Training on Image Comprehension for Large Vision LLMs

The paper introduces a novel approach named Self-Training on Image Comprehension (STIC) aimed at improving the image comprehension capabilities of large vision LLMs (LVLMs). The primary objective is to address the significant challenge posed by the high cost and laborious efforts required to procure high-quality vision-language data necessary for training these models. The methodology innovatively leverages self-generated data, consequently bypassing the need for extensive supervised data typically used in conventional approaches.

Overview of the Approach

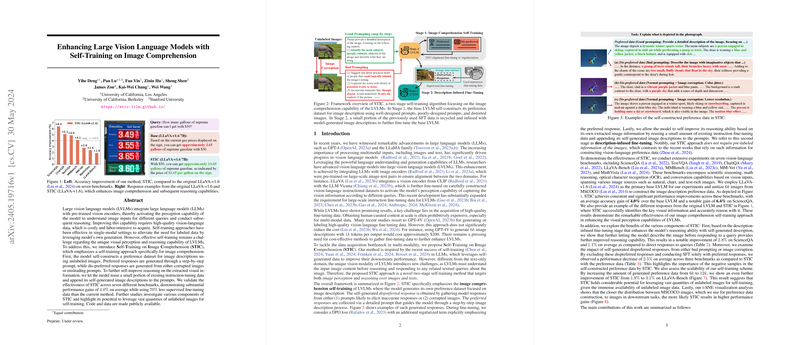

The paper details a two-stage self-training algorithm specifically designed for LVLMs.

- Stage 1: Image Comprehension Self-Training:

- The model autonomously constructs a preference dataset for image descriptions using unlabeled images. This involves generating preferred responses through well-constructed prompts and dispreferred responses from either corrupted images or misleading prompts.

- The preference dataset consists of paired data: preferred responses derived from explicit reasoning prompts and dispreferred responses from either corrupted images or "bad" prompts.

- The LVLM is subsequently fine-tuned on this self-constructed preference dataset using a modified Direct Policy Optimization (DPO) with an added regularization term to emphasize the preferred responses.

- Stage 2: Description-Infused Fine-Tuning:

- The second stage focuses on reinforcing the model's reasoning abilities by integrating its self-generated image descriptions into existing instruction-tuning data.

- This stage reuses a small portion of the fine-tuning data from the supervised fine-tuning (SFT) stage, infusing the prompts with model-generated image descriptions and further fine-tuning the LVLM.

Experimental Validation

The experimental results validate the efficacy of the proposed STIC approach. Multiple benchmarks were used to demonstrate performance improvements, including:

- ScienceQA

- TextVQA

- ChartQA

- LLaVA-Bench

- MMBench

- MM-Vet

- MathVista

STIC results in substantial performance gains, achieving an average improvement of 4.0% over the baseline methods while utilizing 70% less supervised fine-tuning data. Notably, on the ScienceQA dataset, a substantial gain of 6.4% was observed. These robust numerical results underscore the effectiveness of leveraging vast quantities of unlabeled images for self-training, highlighting STIC's potential to reduce the dependency on costly, annotated datasets.

Discussion and Implications

Comparison to Existing Methods

Extensive comparisons were drawn with existing vision-language fine-tuning methodologies such as POVID. While POVID relies on manually injected hallucinations using labeled object information, STIC diverges by exclusively using unlabeled images and self-generated descriptions. This automatic generation process not only simplifies the data preparation stage but also demonstrates superior performance gains.

Contribution of Key Components

Through meticulous ablation studies, the paper underscores the significance of various components within STIC. One such paper involves removing dispreferred responses and observing a performance degradation, thereby establishing the crucial role of negative samples in fine-tuning preferences. Additionally, the “describe-and-respond” (DaR) prompting method synergistically enhances STIC's performance, reaffirming the benefit of integrating self-generated descriptions.

Scalability and Generalization

Another salient point is the scaling law observed with STIC. By increasing the amount of generated preference data from 6k to 12k, further performance improvements were evidenced, suggesting that STIC can effectively harness larger datasets. Furthermore, a t-SNE visualization analysis substantiates the correlation between image distribution overlap and performance gains, providing deeper insights into how STIC benefits tasks with similar image distributions to the self-generated dataset.

Future Direction

Given the promising results, future work could delve into exploring more diverse and large-scale datasets to expand the generalizability of STIC. The paper's implications extend to cost-effective training paradigms for LVLMs, potentially reshaping approaches to acquiring and utilizing vision-language data. Moreover, further refinement of the self-training steps and incorporation of more sophisticated negative sampling techniques could yield even more significant advancements in LVLM capabilities.

Conclusion

In summary, the proposed STIC framework represents a significant advancement in self-training methodologies for LVLMs. By efficiently leveraging self-generated data and reducing reliance on extensive supervised fine-tuning datasets, STIC achieves substantial performance improvements across various benchmarks. This work exemplifies a substantial stride toward cost-effective and scalable training paradigms in the field of vision LLMs, with promising avenues for future exploration and enhancement.