Overview of "Nearest Neighbor Speculative Decoding"

This paper introduces Nearest Neighbor Speculative Decoding (Nest), an innovative approach in semi-parametric LLMing that combines traditional LLMs (LMs) with retrieval-augmented methods to generate more accurate and reliably attributed content. This work leverages retrieval augmentation by incorporating non-parametric data stores to refine and ground the LM's predictions, addressing the well-documented issues of hallucination in LMs.

Key Contributions

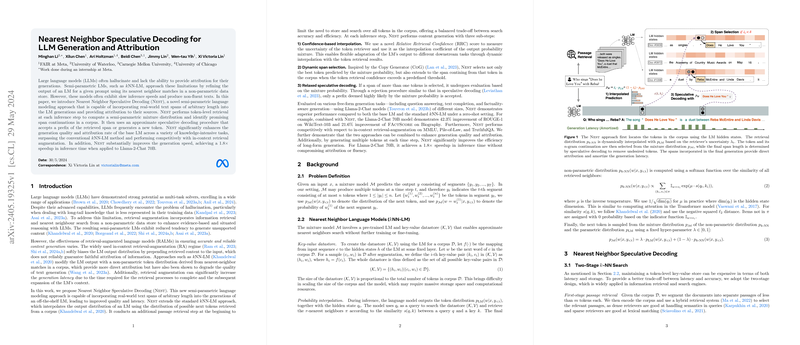

- Novel Architecture: Nest extends the NN-LM framework through a series of enhancements, including a two-stage retrieval process for more efficient and accurate token prediction. The architecture incorporates a confidence-based interpolation mechanism, dynamic span selection, and a relaxed speculative decoding method.

- Two-Stage Retrieval: This method performs an initial passage retrieval step to narrow down the search space, followed by -nearest neighbor (-NN) token retrieval. This approach balances accuracy and efficiency, improving generation latency while requiring less storage and computation compared to maintaining a token-level key-value store.

- Confidence-Based Interpolation: Nest introduces Relative Retrieval Confidence (RRC) to dynamically adjust the balance between the LM's inherent distribution and the retrieval-augmented distribution. This adaptability ensures better performance across divergent downstream tasks.

- Dynamic Span Selection: Inspired by the Copy Generator (CoG) approach, Nest can select not just the next token but a sequence of tokens (or spans) when the retrieval confidence is sufficiently high. This mechanism significantly enhances coherence and attribution in the generated text while also improving efficiency.

- Relaxed Speculative Decoding: Building on speculative decoding principles, Nest employs an evaluation phase to accept or reject spans based on a confidence threshold, thereby maintaining high fluency and factuality in the output.

Experimental Validation

The authors conducted extensive evaluations using various free-form generation tasks, such as question answering and text completion, employing different-sized Llama-2-Chat models under zero-shot settings. The paper highlights several key results:

- Performance Gains: On WikiText-103, Nest combined with Llama-2-Chat 70B exhibited a 42.3% improvement in ROUGE-1 and a 21.6% enhancement in FActScore on the Biography dataset compared to its base model.

- Efficiency: The speculative decoding technique accelerates the generation process, achieving a 1.8x speedup in inference time for long-form generation without compromising textual quality or factual attribution.

- Attribution: The incorporation of spans from verified sources ensures that a large proportion of the generated text can be directly attributed back to the corpus, enhancing the credibility of the output.

Implications and Future Directions

The implications of Nest are multifaceted, impacting both practical applications and theoretical advancements in AI:

- Practical Implications: Nest demonstrates significant potential in applications requiring high factual accuracy and reliable attribution, such as automated journalism, content summarization in legal and medical domains, and educational tools. The ability to source content directly from a non-parametric store helps in mitigating hallucination and supports users in identifying the provenance of information.

- Theoretical Implications: The introduction of a two-stage retrieval mechanism and confidence-based interpolation opens new avenues for blending parametric and non-parametric methods efficiently. Future research might explore more sophisticated interpolation techniques and the scalability of such hybrid models to even larger datasets and more complex retrieval tasks.

- Efficiency Bridging: The paper contributes to ongoing discussions on improving the efficiency of generation models. By parallelizing the token processing and effectively balancing retrieval and generation stages, Nest demonstrates optimized performance which is crucial for real-time applications.

Conclusion

Nest represents a significant stride in semi-parametric LLMing, addressing key limitations of existing retrieval-augmented methods. Its validated improvements in both generation quality and efficiency underscore its potential for broad applicability and future exploration in enhancing LM capabilities. This work sets the stage for more robust and accountable AI-driven text generation systems, promoting reliable and factual generation in a variety of practical contexts.