Assessment of Higher-Order Theory of Mind in LLMs Using Multi-Order Benchmarks

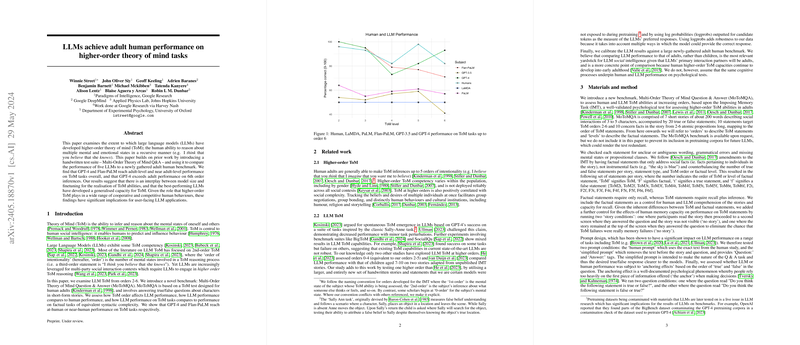

The paper investigates the development of higher-order Theory of Mind (ToM) capabilities in LLMs through a new benchmark suite, the Multi-Order Theory of Mind Question Answer (MoToMQA). The paper evaluates the performance of five LLMs—LaMDA, PaLM, Flan-PaLM, GPT-3.5, and GPT-4—against human adults. Notably, GPT-4 and Flan-PaLM exhibit performance at or near adult human levels, with GPT-4 surpassing human performance on sixth-order ToM inferences.

Introduction to Theory of Mind and Literature Context

ToM is a critical aspect of human social intelligence, enabling individuals to reason about others' mental states and predict behaviors. While previous research has confirmed ToM competencies in LLMs, most studies focus on second-order ToM. This paper extends the assessment to sixth-order ToM through a novel benchmark—MoToMQA—comprising true/false questions derived from short-form stories.

Methodology and Benchmark Design

MoToMQA is based on a validated ToM test for adults, encompassing seven stories with 20 statements each. These statements are categorized into orders and levels to distinguish between mental inferences and factual recall. The benchmark features two prompt conditions for LLMs (human and simplified) and controls for memory in human participants by presenting stories with and without visibility during testing.

Results and Analysis

ToM Task Performance

A comparison of LLM and human performances revealed significant variations:

- Best Performing Models: GPT-4 and Flan-PaLM demonstrated superior performance, with no significant differences between them. Both models, however, outperformed GPT-3.5, PaLM, and LaMDA significantly.

- Human vs. Models: Humans outperformed Flan-PaLM but showed no significant difference from GPT-4, underscoring GPT-4's capability in higher-order ToM.

- Order-Specific Analysis:

- GPT-4 and Flan-PaLM showed high accuracy across all orders, with distinct performance declines aligned with higher order complexities.

- Human performance was significantly higher at fifth-order compared to fourth-order, suggesting a cognitive process enhancement.

Factual Task Performance

Both GPT-4 and Flan-PaLM excelled in factual recall, significantly outperforming other models but not differing significantly from human performance. This highlights the models' learning capacity extends beyond syntactic complexity to factual content comprehension.

Comparative Analysis

Notably, both humans and LLMs showcased better performance on factual tasks compared to ToM tasks, consistent with prior studies. This suggests that ToM reasoning poses a more significant challenge to models, likely due to the necessity of generalizing social knowledge from pretraining data.

Implications and Future Research

The research elucidates the potential of larger, fine-tuned models like GPT-4 and Flan-PaLM in achieving advanced ToM capabilities. This aligns with the notion of scaling laws, where model size and fine-tuning play pivotal roles in realizing ToM competencies. However, ethical considerations arise from such capabilities, including the potential for manipulation and the need for technical guardrails.

Continued investigations should:

- Expand benchmarks to include multi-lingual and culturally diverse scenarios.

- Extend the testing beyond sixth-order ToM to explore the limits of both human and model capacities.

- Incorporate multimodal signals to better align LLM ToM assessments with real-world human interactions.

In conclusion, the paper presents compelling evidence of the advanced ToM capabilities in state-of-the-art LLMs, particularly GPT-4, and elucidates directions for future research to further understand and ethically leverage these capabilities.