A Survey of Multimodal LLMs from a Data-centric Perspective

The paper "A Survey of Multimodal LLMs from a Data-centric Perspective" presents a comprehensive exploration of Multimodal LLMs (MLLMs) with a focus on the pivotal role of data. The authors systematically review existing literature to shed light on the methodologies for preparing, processing, and evaluating multimodal data, while also outlining potential future research directions. This essay provides an expert overview of the key contributions, findings, and implications of this paper.

Overview of MLLMs and Data-centric AI

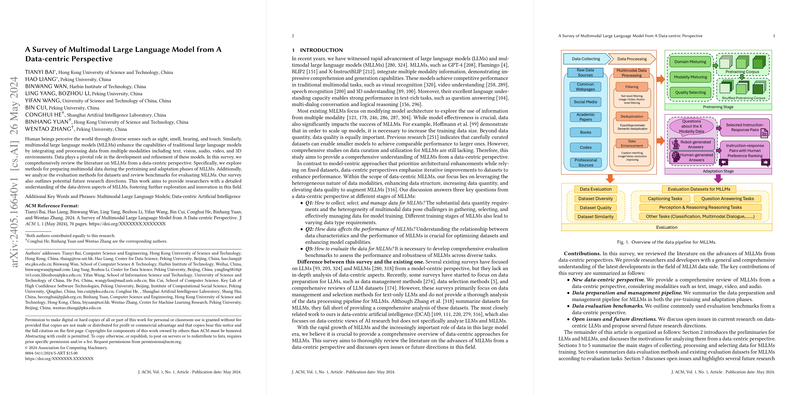

The integration of multimodal data, such as text, images, audio, video, and 3D environments, significantly enhances the capabilities of traditional LLMs. MLLMs like GPT-4, Flamingo, and BLIP2 demonstrate compelling performance across traditional multimodal tasks and exhibit robust language understanding capabilities. In contrast to model-centric approaches that primarily focus on architectural enhancements, a data-centric perspective emphasizes iterative improvements to datasets to enhance performance. This paradigm shift towards data-centric AI is motivated by the recognition that the quality, diversity, and representativeness of training data are instrumental in the success of AI models.

Data Collection and Processing

The paper details various sources for data collection, drawing from common webpages, social media, academic papers, books, and professional domains. A meticulous data curation process is necessary to optimize the datasets:

- Common Webpages: Leveraging large-scale web crawls like CommonCrawl for diverse data ingestion.

- Social Media: Extracting rich multimodal data from platforms like Stack Exchange, Reddit, and YouTube.

- Academic Papers: Utilizing vast corpora like arXiv and S2ORC for high-quality scholarly content.

- Books: Mining extensive libraries like Project Gutenberg and BookCorpus for textual richness.

- Professional Sources: Domain-specific datasets derived from legal, medical, and financial resources.

Data Processing is equally critical. The authors discuss filtering methodologies to eliminate low-quality or irrelevant data and deduplication techniques to minimize redundancy. For instance, textual filtering tools like FastText and LangDetect ensure language consistency, while semantic deduplication leverages embedding models like Sentence-BERT to identify and remove semantically similar content. The enhancement of multimodal datasets involves augmenting textual descriptions and improving image or video quality, as demonstrated by methods like those used in LLaVA-1.5 and BLIVA.

Data-centric Pre-training

The pre-training phase is bifurcated into training the LLM backbone and modality encoders independently, followed by integrating these components using input projectors. The MLLMs are further trained using a domain and modality mixture. For example, the optimal ratio of image-caption pairs and interleaved text documents can enhance both zero-shot and few-shot learning.

The notion of Data Selection improves training efficiency and model performance. Techniques such as active learning-based selection and pre-training selection are crucial. These methods, using metrics like CLIP scores or advanced filters, prioritize high-quality data.

Data-centric Adaptation

Adaptation involves fine-tuning models using multimodal instruction-response datasets. Notable methodologies include:

- Supervised Fine-tuning (SFT): Constructing instruction-response pairs across various downstream tasks—captioning, question answering, reasoning, and classification.

- Data Selection for SFT: Employing coreset-based, gradient-based, and LLMs-based methods to refine datasets, thereby focusing on high-quality instructional data.

- Human Preference Alignment: Reinforcement Learning from Human Feedback (RLHF) models like InstructGPT align responses with human values, ensuring higher accuracy and ethical alignment.

Evaluation of MLLMs

The paper emphasizes data evaluation metrics to assess dataset quality, such as:

- Diversity: Evaluating the range of concepts represented in the dataset.

- Quality: Ensuring the factual accuracy and relevance of data points.

- Similarity: Measuring how well datasets align with the target distribution.

The authors also review benchmark datasets used for evaluating MLLMs. These datasets, tailored for tasks such as captioning, question answering, and reasoning, serve as critical tools for measuring model performance across different dimensions.

Implications and Future Directions

The research highlights significant implications for both theoretical advancements and practical applications of AI. The findings underscore the value of high-quality, well-curated datasets in improving model robustness and generalizability. The paper calls for further studies on optimal data selection strategies, the development of MLLM-specific data processing systems, and an in-depth exploration of scaling laws for data quantity and quality.

Conclusion

In conclusion, the paper provides a thorough survey of MLLMs from a data-centric perspective, offering valuable insights into data collection, processing, pre-training, and adaptation. It underscores the importance of data in shaping the capabilities of MLLMs and sets the stage for future research aimed at optimizing data-centric methodologies in AI. This comprehensive review is an essential reference for researchers aiming to advance the field of multimodal AI.