Parallels Between Induction Heads in Transformer Models and Human Episodic Memory

The paper explores the underexamined connection between Transformer models, specifically attention heads in Transformers, and human episodic memory. By focusing on "induction heads", components critical for in-context learning (ICL) in Transformer-based LLMs, the research introduces a compelling parallel to the Contextual Maintenance and Retrieval (CMR) model that describes human episodic memory. This exploration adds a significant piece to the puzzle of understanding the intersection of artificial and biological intelligence systems.

Key Findings and Methodology

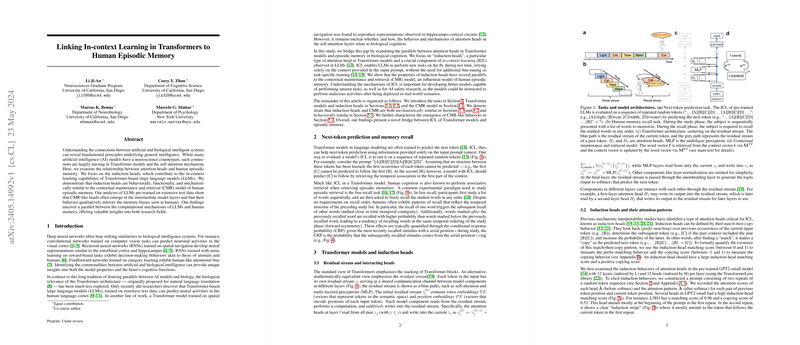

The paper's key goal is to demonstrate that induction heads in Transformer models exhibit behavioral and mechanistic similarities to the CMR model. The research unfolds through several methodical steps:

- Behavioral Parallels: The paper illustrates that induction heads in LLMs adopt behaviors similar to those seen in human episodic memory. Specifically, the attention mechanisms employed by induction heads to predict next tokens mirror episodic retrieval, marked by temporal contiguity and forward asymmetry. These phenomena are well-documented in human memory studies using the CMR framework.

- Mechanistic Similarities: By reinterpreting induction heads through the lens of the CMR model, the paper reveals that the computation performed by these attention heads can be likened to the associative retrieval processes in CMR. This includes mechanisms such as K-composition and Q-composition in induction heads, which align with the context and word retrieval operations in CMR.

- Empirical Validation: Through an analysis of pre-trained models like GPT-2 and Pythia, they demonstrate that induction heads with high induction-head matching scores exhibit attention biases consistent with CMR's model of human memory. The metrics used include CMR distance, allowing a quantitative assessment of the heads’ similarity to CMR behaviors.

Implications and Future Directions

This research offers meaningful insights for both AI and neuroscience fields. The characterized parallels allow us to rethink ICL mechanisms in LLMs, suggesting that these models may leverage processes analogous to human episodic memory to enhance next-token predictions. Such insights can drive the design of more sophisticated models with improved in-context learning capabilities and safer AI system behaviors.

For neuroscience, these findings contribute to our understanding of the hippocampal and cortico-hippocampal systems, potentially elucidating the function of these biological structures in similar computational tasks. The rediscovered normative principles underlying episodic memory biases offer valuable guidance for modeling human cognitive functions.

Practical Insight: Induction heads emerge predominantly in the intermediate layers of Transformer models, a discovery supported by examining both GPT-2 and Pythia models. This localized emergence can inform architecture designs optimized for better performance in language tasks and potentially other cognitive functions.

Theoretical Insight: The persistence of CMR-like behavior as model training progresses suggests that attention mechanisms in LLMs evolve in ways that reflect optimal memory recall strategies inherent in human cognition.

Speculative Future Developments: Future research may seek to confirm whether these findings generalize across other Transformer-based models and natural language settings. Additionally, exploring the "lost in the middle" phenomenon in deeper Transformers could reveal more about how these models manage long-range dependencies, echoing recency and primacy effects known in human memory.

Limitations

The current paper uses sequences of repeated random tokens to test induction behaviors, which might omit crucial aspects present in natural language processing tasks. Furthermore, whether CMR can serve as a fundamentally mechanistic model for the behavior of heads in extremely large Transformer models remains uncertain. Additional research should examine other variants of Transformer models to determine the robustness of these findings.

Conclusion

By establishing a bridge between the CMR model and induction heads in LLMs, the paper enriches our understanding of both artificial and biological systems. The alignment of Transformer attention mechanisms with human episodic memory models opens new pathways for cross-disciplinary research in AI and neuroscience, ultimately pushing towards more advanced and cognitively plausible models of intelligence.