Comprehensive Overview of "Getting More from Less: LLMs are Good Spontaneous Multilingual Learners"

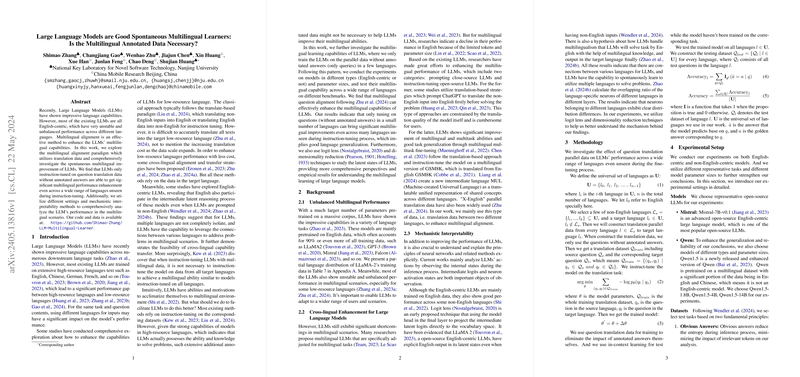

"Getting More from Less: LLMs are Good Spontaneous Multilingual Learners" by Zhang et al. rigorously investigates the spontaneous multilingual learning capabilities of LLMs. This paper's central focus explores how LLMs, when instruction-tuned on translation data without annotated answers, show significant enhancements in multilingual alignment, even with languages not seen during training. This research has profound implications for leveraging LLMs' multilingual potential in both high-resource and low-resource language scenarios.

Core Contributions

- Investigating Spontaneous Multilingual Alignment:

- The authors explore whether LLMs can improve cross-lingual performance via instruction-tuning on parallel translation data. Their experiments confirm that such training significantly enhances the alignment between English and multiple other languages, even those not present in the training data.

- Evaluation across Multiple Benchmarks and Models:

- Experiments span various models, including both English-centric models (e.g., Mistral-7B) and non-English-centric models (e.g., Qwen1.5). The results demonstrate consistent improvement across a wide array of benchmarks, including Amazon Reviews Polarity, SNLI, and PAWS, emphasizing the validity of their findings across different model types and tasks.

- Mechanistic Interpretability Analysis:

- Using techniques like logit lens and PCA (Principal Component Analysis), the paper provides an in-depth analysis of the changes in LLMs' internal representations pre- and post-instruction tuning. These analyses help quantify the improvements in model alignment and generalization capabilities across different languages.

Experimental Framework

Models and Datasets

- The authors deploy an assortment of LLMs, including the English-centric Mistral-7B and the multilingual Qwen1.5.

- Their experiments utilize prominent datasets, like Amazon Reviews Polarity for emotion classification, SNLI for natural language inference, and PAWS for paraphrase identification. These selections ensure that the results are robust and generalizable.

Language Selection

- The research evaluates performance across 20 languages, including both high-resource languages (e.g., English, Chinese, German) and low-resource languages (e.g., Swahili, Hindi, Bengali). The diversity in language resources ensures a comprehensive assessment of the models' multilingual capabilities.

Key Findings

- Effectiveness of Question Alignment:

- Instruction-tuning LLMs on multilingual question translation data (without answers) significantly improves their performance across unseen languages, indicating robust generalization and alignment.

- Role of High-Resource Languages:

- Training on high-resource languages not only improves their own performance but also provides stable improvements across many other languages. This suggests that high-resource languages have a leadership effect in multilingual transfer learning.

- Generalization across Different Model Scales:

- The findings hold consistent across models with varying parameter sizes, from Qwen1.5's 1.8B parameters to Mistral's 7B parameters, highlighting the scalability of the method.

Implications and Future Directions

Practical Implications

- Enhanced Multilingual Applications:

- The research suggests that minimal data involving only translated queries can significantly boost LLMs' cross-lingual performance. This can be immediately beneficial for applications requiring multilingual support, such as global customer service and multilingual virtual assistants.

- Efficient Model Training:

- Efficiently using a small subset of high-resource languages to boost overall multilingual performance can reduce the computational burden and data requirements for training robust multilingual models.

Theoretical Implications

- Superficial Alignment Hypothesis:

- The improvements align with the "Superficial Alignment Hypothesis," suggesting that LLMs predominantly decode using the knowledge acquired during pretraining. The multilingual tuning step activates this latent knowledge, implying that the same subdistributional formats can enhance cross-lingual transfer.

- Language Generalization:

- The paper evidences strong inherent multilingual generalization in LLMs. This insight opens avenues for further theoretical exploration into the mechanisms underlying this spontaneous learning capability.

Conclusion

The paper by Zhang et al. makes a significant contribution to understanding and advancing the multilingual capabilities of LLMs. By demonstrating that instruction-tuning on question translation data enhances multilingual alignment effectively, they provide a pathway towards more efficient and scalable multilingual models. The paper's mechanistic analyses also deepen our understanding of how LLMs handle multilingual scenarios, paving the way for future research and practical innovations in this domain.