Do LLMs Learn Like Humans in Decision-Making Tasks?

Introduction

LLMs like GPT-3.5 and GPT-4 have shown an impressive range of abilities from language translation to problem-solving. Among these abilities is something called in-context learning, where models can learn to perform new tasks just by observing examples within a given context. This paper dives into how LLMs deal with decision-making tasks, particularly ones involving reinforcement learning (RL) under the hood. The focus is on understanding whether these models exhibit human-like biases when encoding and using rewards to make decisions.

Experiment Setup: The Bandit Tasks

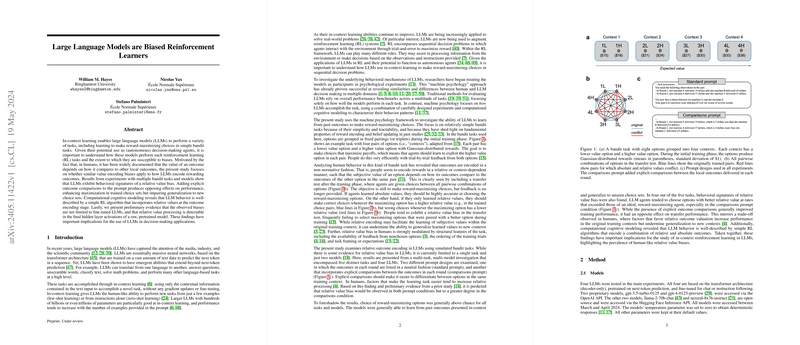

To probe the decision-making abilities of LLMs, the researchers employed so-called bandit tasks. These tasks involve making choices from a set of options where each choice results in a reward. The goal is to maximize the cumulative reward over time. Think of it like choosing a slot machine to play out of a set of slot machines to get the maximum payout.

Here's how the experiment was set up:

- Models Tested: The researchers tested four popular LLMs, including proprietary ones like GPT-3.5-turbo-0125 and GPT-4-0125-preview, as well as open-source models like Llama-2-70b-chat and Mixtral-8x7b-instruct.

- Tasks: Five different bandit tasks were used. Each task had different structural features, like how rewards were distributed and the grouping of options.

- Prompt Designs: Two types of prompts were tested: one listing outcomes in a neutral manner (standard prompt) and another adding explicit comparisons between outcomes (comparisons prompt).

Figure \ref{Fig1} in the paper illustrates an example of these bandit tasks with different contexts and prompt designs.

Main Findings

Choice Accuracy

The researchers measured how well the LLMs performed in both the training phase (where feedback was provided) and the transfer test phase (where no feedback was given). Key observations include:

- Training Phase: The comparisons prompt generally led to higher accuracy, suggesting that explicit comparisons helped models learn better within the training context.

- Transfer Test: Interestingly, the comparisons prompt actually reduced accuracy, indicating a trade-off between learning well in the initial context and generalizing to new contexts.

Relative Value Bias

The paper revealed something fascinating: LLMs displayed what’s known as a relative value bias. This means that, like humans, the models tended to favor options that had higher relative rewards in the training context, even if those options were not the best in an absolute sense.

- LLMs’ Preference: The researchers found that the models were more biased towards relative value, especially with the comparisons prompt. For example, options that gave better local outcomes were favored even when it wasn't the optimal choice.

- Human-Like Bias: This reflects a human-like tendency where subjective rewards depend more on local context than absolute values, possibly leading to sub-optimal decisions in new contexts.

Computational Modeling

To further understand the underlying behavior, the researchers used computational cognitive models. They created models that combined both relative and absolute value signals. The winning models generally included:

- Relative Encoding: Incorporating both the absolute value of rewards and their relative standing compared to other options.

- Confirmation Bias: The models updated their expectations differently based on whether the outcome confirmed or disconfirmed prior beliefs, mirroring a kind of confirmation bias seen in humans.

Hidden States Analysis

An additional interesting revelation came from examining a pre-trained, non-fine-tuned model (Gemma-7b). This model also showed relative value bias, indicating that such biases are not necessarily introduced during the fine-tuning stages but could be inherent in the way these models are pretrained on massive datasets.

Implications and Future Directions

The paper offers several important takeaways and sets the stage for future research:

- Decision-Making Applications: The finding that LLMs exhibit human-like biases is crucial for deploying these models in real-world decision-making scenarios.

- Fine-Tuning vs. Pretraining: Since biases can appear even in pretrained models, it suggests that strategies beyond fine-tuning need attention.

- Mitigation Strategies: Future research should explore methods to counteract these biases, potentially through different prompting strategies or architectural changes.

Exploring the intricate behavior of LLMs not only aids in improving their performance in various tasks but also deepens our understanding of how these models compare to human cognition.

Final Thoughts

The paper sheds light on the nuanced behavior of LLMs in reinforcement learning tasks, emphasizing the importance of understanding and potentially mitigating human-like biases for better autonomous decision-making. As LLMs continue to evolve, keeping an eye on such findings will be crucial to responsibly harness their capabilities.