Scaling Multimodal Models with Mixture of Experts: An Exploration of Uni-MoE

Background and Motivation

When it comes to Multimodal LLMs (MLLMs), one key question has always loomed large: how do we scale these models efficiently without ramping up computational costs? Recent advancements in this space have shown that larger models and more data significantly boost performance, but they come with a challenge—huge computational overhead during training and inference.

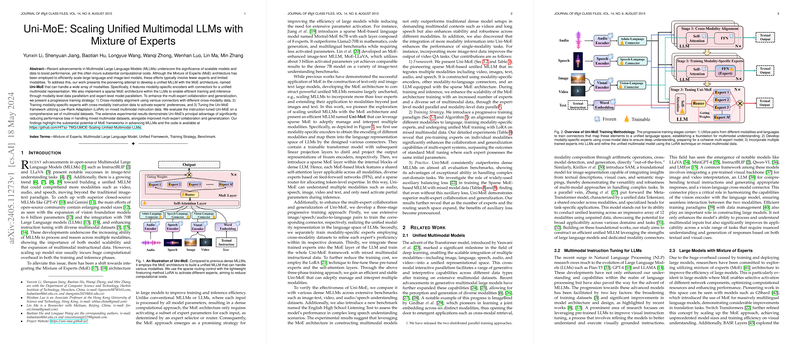

Traditionally, LLMs and image-text models have been scaled using the Mixture of Experts (MoE) architecture. However, the application of MoE to a unified multimodal setting—one that goes beyond just the image and text modalities to include audio, speech, video, and more—had remained relatively unexplored. That's where Uni-MoE steps in as a pioneering attempt to address this gap.

The Architecture: How Uni-MoE Works

Uni-MoE leverages modality-specific encoders combined with connectors that map these inputs into a unified language representation space. The MoE architecture is incorporated into the foundation of this model, enabling efficient training and inference. Here's a simplified breakdown of its components:

- Modality-Specific Encoders: These specialized modules process data inputs (like images, audio clips, videos, etc.) and encode them.

- Connectors: These transformers help map different modality encodings into a common representation space suitable for a LLM.

- Sparse MoE Layers: Within the core LLM, sparse routing mechanisms ensure that only a handful of experts are activated per input, making the process more efficient.

Training Strategy

The training of Uni-MoE is pretty novel. It follows a three-stage process:

- Cross-Modality Alignment: This stage focuses on training the connectors to map different modalities into a unified language space.

- Specialized Expert Training: Each modality-specific expert is trained on relevant cross-modal datasets to refine its capabilities.

- Unified MoE Training: Finally, the MoE and sparse routing mechanisms are fine-tuned using mixed multimodal instructional datasets with Low-Rank Adaptation (LoRA) techniques.

Experimental Results and Key Insights

Uni-MoE's effectiveness was put to the test across various multi-modal datasets, encompassing tasks related to images, videos, audio, and long-form speech understanding. Here are some headline results:

- Speech-Image Understanding: Uni-MoE achieved notable improvements over existing models like Macaw-LLM and X-InstructBLIP, especially in long speech understanding tasks, highlighting its robustness.

- Audio-Text Tasks: It surpassed state-of-the-art models by a substantial margin in tasks like ClothoAQA, demonstrating its capability to handle audio data.

- Image-Text Understanding: While dense models often perform well on image-text tasks, Uni-MoE still holds its own, delivering competitive performance.

Implications

The potential implications of Uni-MoE are noteworthy:

- Efficiency: By incorporating sparse routing, Uni-MoE significantly reduces the computational overhead, making it feasible to scale up multimodal models without hitting performance bottlenecks.

- Versatility: The ability to handle a range of modalities efficiently positions Uni-MoE as a flexible foundation for future multimodal models.

- Stability and Generalization: The novel training strategy ensures that Uni-MoE exhibits reduced performance biases across different tasks, improving the overall stability and robustness.

Future Directions

The introduction of Uni-MoE opens up several avenues for future research:

- Scaling Up: Exploring the addition of more experts and further optimizing the routing mechanisms could push the performance boundaries even higher.

- Extended Modalities: Incorporating more varied data types, including potentially more complex auditory and visual data, could further broaden the scope of Uni-MoE.

- Real-world Applications: With its efficient training and inference capabilities, deploying Uni-MoE in real-world applications such as voice-activated assistants, automated video analysis, and more could be a game-changer.

In essence, Uni-MoE represents a commendable step forward in the field of multimodal LLMs. By addressing the intrinsic challenges of scaling and efficiency, it paves the way for future innovations in combining diverse data into cohesive and powerful AI models. Check out the code and explore Uni-MoE's unique architecture here.