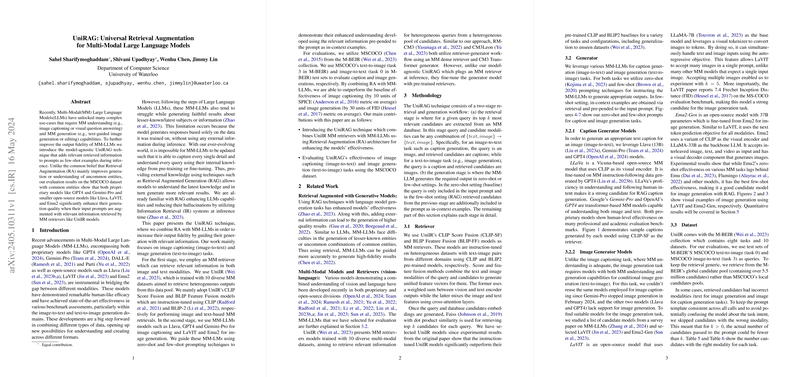

The paper proposes a model-agnostic retrieval augmentation architecture for multi-modal LLMs (MM-LLMs). The approach, termed UniRAG, integrates a two-stage workflow where an external multi-modal retriever first identifies contextually relevant candidates and then appends these as in-context examples to the prompt provided to the generator. This modular design can be seamlessly applied to different downstream tasks, including image captioning (image-to-text) and text-to-image generation.

The methodology is structured around two primary components:

- Retriever Stage:

The system employs UniIR models configured with CLIP Score Fusion (CLIP-SF) and BLIP Feature Fusion (BLIP-FF), which are instruction-tuned on diverse multi-modal datasets. Specifically, these retrievers combine text and image representations either by a weighted sum of encoder outputs (CLIP-SF) or by utilizing cross-attention layers to fuse features (BLIP-FF). For a query in one modality, the retrievers extract the top candidates from a global multi-modal database using Faiss for efficient dot product similarity computation. In image captioning, for example, an input image retrieves candidate captions; for image generation, an input text query retrieves candidate images.

- Generator Stage:

- For caption generation, models such as Llava (13B), GPT-4, and Gemini-Pro are employed.

- For text-to-image generation, LaVIT (LLaMA-7B backbone) and Emu2-Gen (LLaMA-33B backbone) are used.

The experimental results systematically compare the baseline performance () with retrieval-augmented (few-shot) performance. For instance, adding just one retrieved caption in the prompt yields an improvement of approximately 10 SPICE units on the MSCOCO image captioning task relative to the zero-shot baseline. In the text-to-image generation task, augmentation leads to a decrease of roughly 30 FID units.

Several insights are reported regarding in-context example selection:

- Sensitivity to the Number of Examples:

While a single retrieved example consistently boosts generation quality, increasing beyond one can lead to performance degradation in certain configurations, particularly for smaller models. This suggests that the relevance of retrieved candidates is crucial; introducing too many less-relevant examples can confuse the generative process.

- Retriever Model Comparison:

Although the baseline performance of the CLIP-SF retriever is generally superior to BLIP-FF, the effectiveness gap narrows when applied within the retrieval augmentation framework. This observation implies that even retrievers with modest baseline performance can contribute positively when their outputs are integrated as in-context examples.

- Model-Specific Findings:

The experiments further reveal that while models like Llava perform relatively well in zero-shot settings, both proprietary models such as GPT-4 and Gemini-Pro are capable of leveraging additional retrieved examples to continuously improve output fidelity. In text-to-image generation, the results also demonstrate that Emu2-Gen, with its larger LLaMA-33B backbone, outperforms the smaller LaVIT model, and both benefit from a single retrieved example, although excessive augmentation can again be detrimental.

Performance metrics across tasks include several n-gram overlap measures (BLEU-1 to BLEU-4, CIDEr, ROUGE) and semantic alignment metrics (SPICE for caption generation; FID, CLIP Score, and Inception Score for image generation). For example, quantitative evaluation on the MSCOCO dataset reveals that the retrieval-augmented prompt setup significantly improves the quality of outputs over the zero-shot baseline across these metrics. Notably, the paper emphasizes that retrieval augmentation not only refines the handling of uncommon entities but also improves the generation quality for common entities, which challenges the conventional expectation that retrieval techniques mainly aid in processing rare content.

The paper concludes with discussions on future work, including:

- Extending the evaluation to out-of-domain retrieval settings to assess the robustness of the approach when the candidate pool is not from the same distribution as the target task.

- Investigating alternative prompt templates and relevance-based candidate selection mechanisms to optimize the number and quality of in-context examples dynamically.

Overall, the work presents a comprehensive evaluation of combining retrieval augmentation with MM-LLMs, yielding consistent improvements in multi-modal generation tasks and providing valuable insights into the interaction between retrieval quality and generative performance.