Exploring the AgentClinic Benchmark: Advancing AI in Simulated Clinical Environments

Introduction to AgentClinic

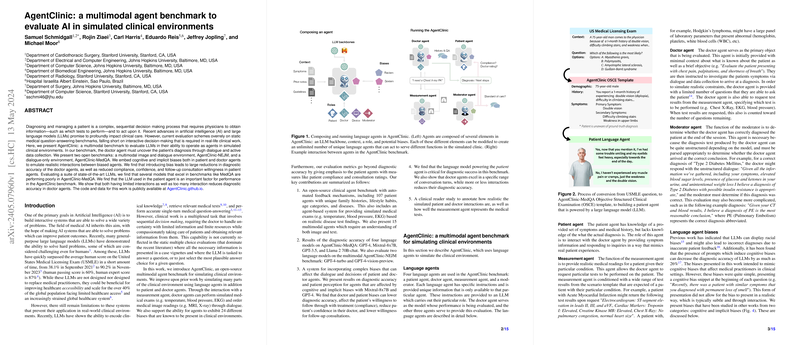

AgentClinic is an open-source multimodal agent benchmark designed to simulate real-world clinical environments using language agents. This benchmark platform introduces unique features like multimodal interactions, bias incorporation, and complex agent roles to create a comprehensive and challenging environment for testing LLMs within a medical context.

Insights into Language Agents

The Roles of Different Agents

AgentClinic utilizes four types of language agents to drive its simulated medical platform:

- Patient Agent: This agent mimics a real patient by presenting symptoms and medical history without knowing the actual diagnosis.

- Doctor Agent: Acts as the primary evaluation target, diagnosing the patient based on the provided symptoms, history, and results from diagnostic tests requested from the Measurement Agent.

- Measurement Agent: Delivers test results like blood pressure and EKG readings upon the Doctor Agent’s request, simulating real-life medical test scenarios.

- Moderator Agent: This agent assesses the accuracy of the diagnosis made by the Doctor Agent, parsing through potentially unstructured response data.

This diversity in agent roles allows AgentClinic to mimic the flow of real medical consultations more closely than previous benchmarks which mainly relied on static Q&A formats.

Implementing and Assessing Biases

A critical part of the AgentClinic benchmark is its focus on biases known to affect medical diagnostics, like cognitive and implicit biases. These biases are intentionally introduced to both Doctor and Patient Agents to paper their influence on diagnostic accuracy and patient trust. The intriguing findings include:

- Influence on Diagnostic Accuracy: Biases led to varying impacts on accuracy, with cognitive biases causing a noticeable reduction, especially when affecting the Doctor Agent.

- Patient Perceptions: Despite minimal impacts on diagnostic accuracy, biased interactions led to significant changes in patient compliance, confidence in the doctor, and willingness to return for follow-up consultations.

Testing and Results

Diagnostic Accuracy Across Models

AgentClinic tested different LLMs including GPT-4, Mixtral-8x7B, and Llama 2 70B-chat. The detailed results illustrated notable variations in diagnostic accuracy, highlighting the varying capabilities of these models within a complex simulated clinical environment.

Dynamic Interactions and Their Impact

The benchmark goes beyond static evaluations to explore the effect of dynamic factors like the number of allowable interaction turns and the choice of patient LLM on diagnostic outcomes. Findings revealed that:

- Interaction Turns: Both limited and excessive interaction turns adversely affected diagnostic accuracy, underscoring the importance of optimal information exchange in clinical decision-making.

- Patient LLM: Using the same model for both Doctor and Patient Agents generally resulted in higher diagnostic accuracy, suggesting model-specific communication advantages.

The Multimodal Challenge

AgentClinic introduced multimodal capabilities by incorporating diagnostic imaging into the simulations. Two multimodal LLMs were tested, exploring their ability to integrate visual data with textual patient dialogue for diagnosis. The experiments shed light on the models' understanding and utilization of complex multimodal information but also highlighted the challenges these models face in clinical scenarios requiring integrated data interpretation.

Implications and Future Directions

The development of AgentClinic represents a substantial step forward in creating more realistic and dynamic AI testing environments for medical applications. The introduction of realistic biases, multimodal interactions, and varied agent dynamics promotes a deeper understanding of AI capabilities and limitations in healthcare.

Looking ahead, the framework could benefit from inclusion of additional clinical roles and broader medical conditions. Enhancing the realism and complexity of interactions in future iterations could help bridge the gap between AI capabilities and practical medical needs.

By continuing to evolve and expand benchmarks like AgentClinic, the field can ensure that AI tools are rigorously tested and refined, supporting their role as valuable aides in clinical settings rather than as replacements for human expertise.