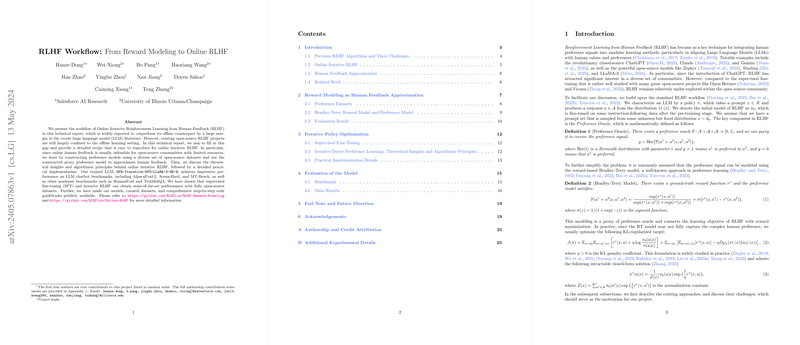

Exploring Online Iterative Reinforcement Learning from Human Feedback (RLHF) for LLMs

Introduction to Online Iterative RLHF

Reinforcement Learning from Human Feedback (RLHF) has garnered significant attention for integrating human preferences into machine learning, particularly for enhancing LLMs. While existing work has predominantly focused on offline RLHF, this exploration explores the niche of online iterative RLHF, aiming to bridge the performance gap reported between offline and online modalities. Typically, human feedback, especially in an online setting, remains a challenge due to resource constraints. This work innovatively approximates this by constructing preference models from a variety of open-source datasets, which serve as proxies for human feedback in the iterative learning process.

Understanding the Process and Setup

The core of the online iterative RLHF process involves these key components:

- Initial Setup:

- Starting with a model fine-tuned on known instruction-following datasets (labelled ), the model encounters prompts sampled from a fixed distribution.

- The response of the model to these prompts is guided by a policy , which aims to maximize a reward function as defined by the preference oracle.

- Preference Oracle and Reward Function:

- A hypothetical oracle determines the preference between pairs of responses, aiding in defining the direction of model training.

- The reward function, rooted in the Bradley-Terry model, serves as a simplified approach where model preferences are modeled as a logistic function of the difference in their individual rewards.

- Practical Implementation:

- Through iterative adjustments and real-time feedback simulations via proxy models, the LLM adapicates responses to better align with desired outcomes as per human feedback proxies.

Algorithmic Insights and Implementation

The workflow transitions from theoretical constructs to applied methodologies with a focus on:

- Preference Model Training: Before diving into RLHF, constructing robust preference models from diverse datasets enhances the model’s capability to discern and learn from nuanced feedback, aligning closely with human judgments.

- Policy Optimization: The approach cyclically updates the response policy using newly generated and historical data, refining the model iteratively to progressively approximate human preferences.

- Online Versus Offline: Key differences and benefits of using online data collection include continuous model updating, which contrasts with the static nature of offline data, potentially leading to more adaptive and generalized models.

Results and Implications

The model demonstrated impressive performance across various benchmarks, including chatbot evaluations and academic benchmarks. Key takeaways include:

- Performance Metrics: The model achieved state-of-the-art performance on tasks such as AlpacaEval-2 and MT-Bench, showcasing its practical effectiveness.

- Extended Accessibility: By making models and training guides publicly available, the work invites further exploration and adaptation by the broader community, fostering open-source collaboration.

- Future Potential: Ongoing developments could see enhancements in proxy preference modeling, more efficient data utilization, and broader applications across different LLM tasks.

Conclusion and Future Directions

This exploration into online iterative RLHF opens up several avenues for both theoretical exploration and practical applications. Future work includes addressing challenges like reward model biases, exploring different model architectures, and expanding the training datasets to cover a broader range of human-like preferences. By continuously pushing the boundaries of what open-source tools and methodologies can achieve, the field can look forward to more refined, human-aligned LLMs.