Making LLMs Faster and Lighter with Sparsity

Introduction to Sparse LLMs

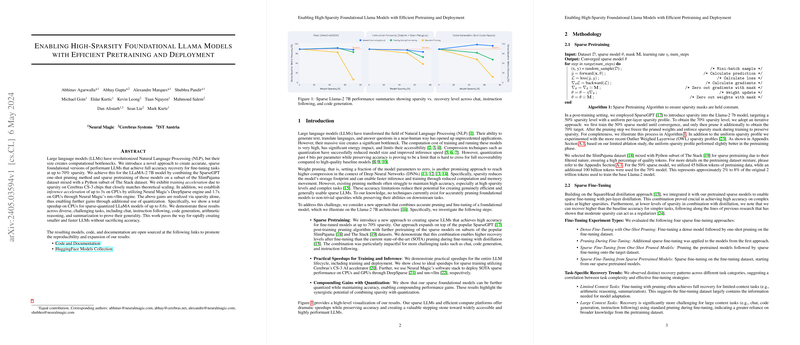

LLMs have significantly advanced the field of NLP, enabling applications like chatbots, translation, and code generation. However, the massive size of these models presents considerable challenges, including high computational costs and energy consumption. The authors of the paper under review have tackled this issue by developing sparse versions of LLMs that maintain performance while being computationally more efficient. Specifically, they demonstrate a method for making the LLaMA-2 7B model up to 70% sparse, achieving substantial speedups without sacrificing accuracy.

Methodology

Sparse Pretraining

One of the key steps introduced in this paper is sparse pretraining. Let's break this down:

- Sparse Pretraining Process: The sparse pretraining process begins with the SparseGPT algorithm, which prunes the LLaMA-2 7B model post-training to achieve 50% sparsity. The model is then pretrained using 45 billion tokens from the SlimPajama and The Stack datasets. To achieve higher sparsity (70%), the process involves iterative pruning and training with an additional 100 billion tokens.

- Why This Matters: This approach contrasts with traditional methods that limit sparsity to levels where accuracy is still retained. Sparse pretraining enables higher levels of sparsity by first pruning and then refining the model parameters through additional training, which makes the model robust even with a significant number of parameters set to zero.

Practical Speedups for Training and Inference

The research demonstrates how these sparse models lead to significant speed improvements:

- Training Speedups: On the Cerebras CS-3 chips, the sparse models achieved training acceleration that was nearly ideal in terms of theoretical scaling.

- Inference Speedups: On CPUs, Neural Magic's DeepSparse engine achieved a 3x speedup, and on GPUs, the nm-vLLM engine delivered a 1.7x speedup.

What's more, combining sparsity with quantization achieved even more dramatic performance gains, particularly on CPUs, where total speedup reached up to 8.6x.

Sparse Fine-Tuning

The paper explores several fine-tuning methods to maintain high accuracy across different task complexities:

- Dense Fine-Tuning with One-Shot Pruning

- Pruning During Fine-Tuning

- Sparse Fine-Tuning from One-Shot Pruned Models

- Sparse Fine-Tuning from Sparse Pretrained Models

Experimental Validation

The authors conducted extensive experiments to validate their approach. Here are some highlights:

Sparse Pretraining Results

- 50% Sparsity: Achieved 96.1% recovery of Llama Evaluation metrics.

- 70% Sparsity: Notably achieved 91.8% recovery, demonstrating the model's robust performance even at high sparsity levels.

Limited Context Tasks

Sparse models performed exceptionally well in arithmetic reasoning and summarization tasks, proving that the model could handle tasks with limited context effectively even at high sparsity levels.

Large Context Tasks

For more complex tasks like chat, instruction following, and code generation, sparse fine-tuning from pretrained models showed superior recovery, even at 70% sparsity. This suggests that the robustness of these sparse models extends to tasks requiring broader contextual understanding.

Sparse Quantized Inference Performance

By integrating quantization with sparsity, the authors achieved negligible accuracy degradation while significantly improving inference performance:

- Prefill performance increased by 3.86x.

- Decode performance increased by 8.6x.

This combination of sparse and quantized models leads to significant reductions in time-to-first token and time-per-output token, especially noticeable in CPU-based deployments.

Implications for AI and Future Work

This research provides a significant advancement in making LLMs more accessible and efficient. Some practical implications include:

- Reduced Computational Costs: Smaller, faster models lower the barrier to entry for deploying sophisticated NLP applications, making these technologies more accessible.

- Energy Efficiency: Reduced energy consumption aligns with global sustainability efforts in technology.

- Scalability: These methodologies can potentially be applied to larger models and adapted to emerging LLM architectures, paving the way for future breakthroughs in model efficiency.

Conclusion

The authors' approach to creating sparse, efficient LLMs marks an important step forward. By combining sparse pretraining, practical speedups, and integrated quantization techniques, they demonstrated that it's possible to dramatically reduce the computational footprint of LLMs without compromising their performance. This research opens new avenues for making advanced NLP technologies more scalable, cost-effective, and environmentally friendly.