The paper "Analyzing Narrative Processing in LLMs: Using GPT4 to test BERT" explores the intricate mechanisms of language processing within LLMs, particularly focusing on the distinct roles played by different layers in BERT. This paper aims to leverage the capabilities of LLMs, such as ChatGPT and BERT, to draw parallels and hypotheses about human brain functions related to language processing.

Objectives and Methodology

The central objective of the paper was twofold:

- To use LLMs as a model to elucidate fundamental mechanisms of language processing in artificial neural networks.

- To make informed predictions and generate hypotheses about how similar processes might occur in the human brain.

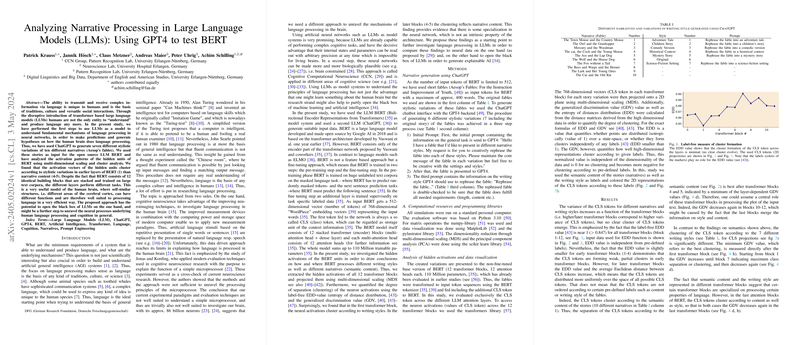

To achieve these goals, the authors utilized ChatGPT to create seven stylistically varied versions of ten narratives, selected from Aesop's fables. These generated stories were then inputted into BERT, an open-source LLM, to analyze the activation patterns across its hidden units. Specifically, the activation vectors of BERT’s hidden units were examined using multi-dimensional scaling and cluster analysis.

Key Findings

The paper yielded several significant findings:

- Layer-wise Functional Differentiation: The results indicated that the activation vectors in BERT's layers clustered according to stylistic variations in the earlier layers (Layer 1), while clustering by narrative content was observed in the middle layers (Layers 4-5). This suggests that different layers in BERT are specialized for different aspects of language processing—earlier layers handle style, and intermediate layers address content.

- Functional Analogies to the Human Brain: Despite BERT being composed of 12 identical building blocks, each layer demonstrated a specialization for distinct tasks after training on large text corpora. This observation is analogous to the human cerebral cortex, where different regions, though structurally similar, perform varied functions. This specialization within BERT’s architecture might offer insights into the efficiency of human language processing mechanisms.

Implications and Future Directions

The paper presents compelling evidence that different layers within LLMs like BERT can be seen as models for understanding how the human brain processes language. The methodology employed in this paper, specifically the use of stylistic variations and narrative content to probe the layers of BERT, provides a novel lens for investigating the "black box" of LLMs.

The findings could serve as a foundational step toward a deeper understanding of neural language processing both in artificial systems and the human brain. The approach used in this paper holds promise for future research aimed at unraveling the neural processes underlying human language cognition.