Overview of Ethical Considerations for LLMs in High-Stakes Sectors

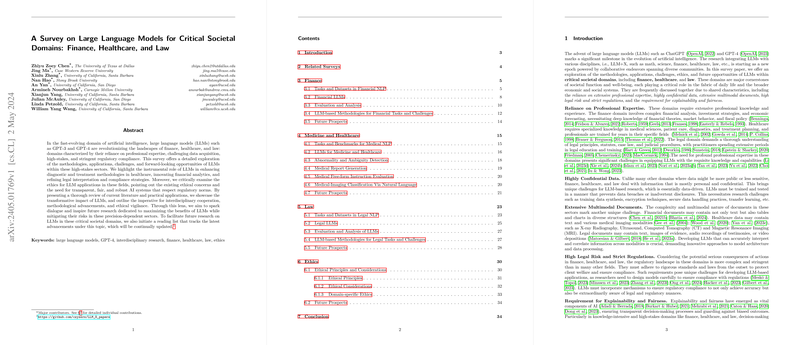

Introduction to LLMs in Critical Sectors

LLMs such as GPT-4 are playing increasingly significant roles across various high-stakes sectors, including finance, healthcare, and law. These sectors are particularly sensitive due to their substantial impact on individual and societal well-being, making the deployment of LLMs in these fields both promising and challenging.

Due to the complexity and the high-stakes nature of tasks in these domains, ensuring the ethical application of LLMs is paramount. This discussion explores some of the primary ethical concerns and considerations in deploying LLMs across these sectors, as well as future directions to address these challenges.

Key Ethical Challenges Across Sectors

The use of LLMs in sectors such as finance, healthcare, and law introduces various ethical challenges that need careful consideration:

- Data Sensitivity and Confidentiality: These domains often involve handling sensitive and confidential information, raising significant concerns about privacy and data protection.

- Need for Explainability: Decisions in these fields can have life-altering consequences. Therefore, the ability of LLMs to provide explainable and interpretable outputs is crucial.

- Risk of Bias and Fairness: Ensuring that LLMs do not perpetuate or amplify existing biases is critical, especially in decision-making processes that affect human rights and access to resources.

- Compliance and Regulation: Each sector faces strict regulatory requirements that LLMs must adhere to, complicating their deployment.

Specialized Domain Challenges

Each high-stakes domain presents unique challenges:

- Finance: LLMs are used for tasks like risk assessment and fraud detection, where accuracy and reliability are crucial to avoid financial mishaps and maintain trust.

- Healthcare: In healthcare, LLMs assist with diagnosis and treatment recommendations. Mistakes or inaccuracies can directly endanger lives, highlighting the need for extremely reliable and precise systems.

- Law: Legal applications involve analyzing legal texts and aiding in case predictions. Ethical concerns here include the need for fairness, unbiased support, and adherence to the latest laws and regulations.

Addressing Ethical Concerns

The development and deployment of LLMs in these sectors require a comprehensive strategy addressing various ethical concerns:

- Enhancing Data Privacy: Implementing advanced encryption and anonymization techniques to protect sensitive information processed by LLMs.

- Improving Explainability: Developing methods to make LLM decisions more transparent and understandable to users, enabling them to trust and verify the outputs provided by these models.

- Mitigating Bias: Employing techniques like dataset balancing and bias audit trails to ensure LLMs operate fairly across all demographics.

- Ensuring Compliance: Integrating regulatory compliance checks into the LLM training and deployment processes to align with sector-specific legal standards.

Future Directions

Looking forward, several key areas could further enhance the ethical deployment of LLMs in high-stakes domains:

- Robust Testing Frameworks: Developing comprehensive testing and validation frameworks to evaluate the ethical implications of LLMs before full-scale deployment.

- Cross-disciplinary Collaboration: Fostering cooperation between AI developers, domain experts, and ethicists to ensure that LLMs are developed with a thorough understanding of domain-specific needs and ethical requirements.

- Continuous Monitoring and Updating: Establishing systems for the ongoing monitoring of deployed LLMs to quickly identify and rectify any emerging ethical issues or non-compliance.

Conclusion

The integration of LLMs into finance, healthcare, and law holds remarkable potential but comes with significant ethical responsibilities. By addressing these ethical concerns proactively and comprehensively, we can harness the power of LLMs to benefit these critical sectors while safeguarding the interests and rights of individuals and society as a whole.