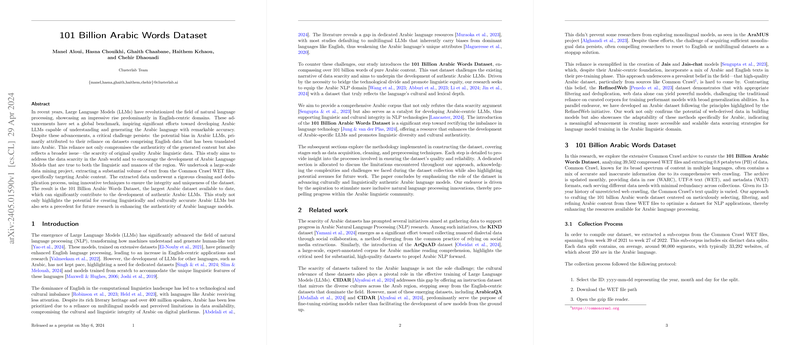

Exploring the 101 Billion Arabic Words Dataset for NLP

The Significance of Arabic in NLP

While the proliferation of LLMs has significantly advanced the field of NLP (Natural Language Processing), much of this progress has centered around the English language. Arabic, spoken by over 400 million people and rich in cultural history, has not seen equivalent advancements, partly due to the scarcity and inadequacy of focused datasets. This gap in resources has made the development of robust Arabic-centric models slow, affecting the representation and processing of the language in digital contexts.

The Introduction of a Vast Arabic Dataset

Responding to these challenges, researchers have developed the 101 Billion Arabic Words Dataset, a sizeable consolidated corpus of Arabic text intended to catalyze the development of NLP models that can proficiently handle the nuances of the language. With over 101 billion words of pure Arabic content, this dataset aims to bridge the gap in language resources and allow for the creation of models that are not only performant but culturally and linguistically authentic.

Methodology: Building a Reliable Dataset

Data Acquisition and Initial Processing

The dataset was assembled from the Common Crawl archive, filtering and processing web pages to extract Arabic content. Over a period spanning several months, extensive data extraction was conducted, sifting through 0.8 petabytes of data which underscores the enormity and comprehensiveness of this endeavor.

Cleaning and Preprocessing

Before a dataset can be used to train models, it must be cleansed and preprocessed to remove noise and ensure consistency. Here's how the researchers ensured the quality of the Arabic dataset:

- URL Filtering and Deduplication: Initial steps involved filtering out undesirable URLs and removing duplicates to ensure that the dataset contained unique and relevant content only.

- Textual Cleaning Procedures: This included removing HTML tags, correcting encoding issues, dealing with special characters, and eliminating both overly brief and lengthy text segments.

- Normalization and Dediacritization: To standardize the text and simplify computational requirements, the dataset underwent processes to normalize characters and remove diacritical marks.

Advanced Text Cleaning Techniques

Utilizing Yamane’s formula, a subset of documents was examined in-depth for issues like inappropriate content or formatting errors. Advanced tools and programming environments such as Python, Rust, and AWS technologies were leveraged to efficiently handle and process the vast amounts of data.

Challenges and Limitations

The scale of this dataset and the complexity of Arabic script presented unique challenges:

- Ensuring Quality at Scale: Manual inspection methods had to be limited due to the dataset's size, posing challenges in fully guaranteeing text quality.

- Ethical Concerns: Filtering out inappropriate and biased content was critical yet challenging, and the researchers acknowledged the limitations of URL-based filtering techniques in capturing sensitive content comprehensively.

Implications and Future Directions

The creation and refinement of the 101 Billion Arabic Words Dataset mark a pivotal step towards resolving the disparity in language resources available for Arabic. It provides a robust foundation for developing advanced Arabic LLMs that respect linguistic and cultural nuances.

Furthermore, this extensive dataset not only has the potential to drive advancements in Arabic NLP but also sets a precedent for similar endeavors in other underrepresented languages. As more data becomes available and computational resources grow, we can expect to see the rise of more linguistically diverse and culturally aware AI systems.

Conclusion

The development of the 101 Billion Arabic Words Dataset is a substantial move towards equitable technological advancement in NLP. While it opens up numerous possibilities for research and application in the Arabic linguistic domain, it also highlights the ongoing need for resources that cater to a broader linguistic landscape, promising a more inclusive digital future.