Progressive Feedforward Collapse of ResNet Training

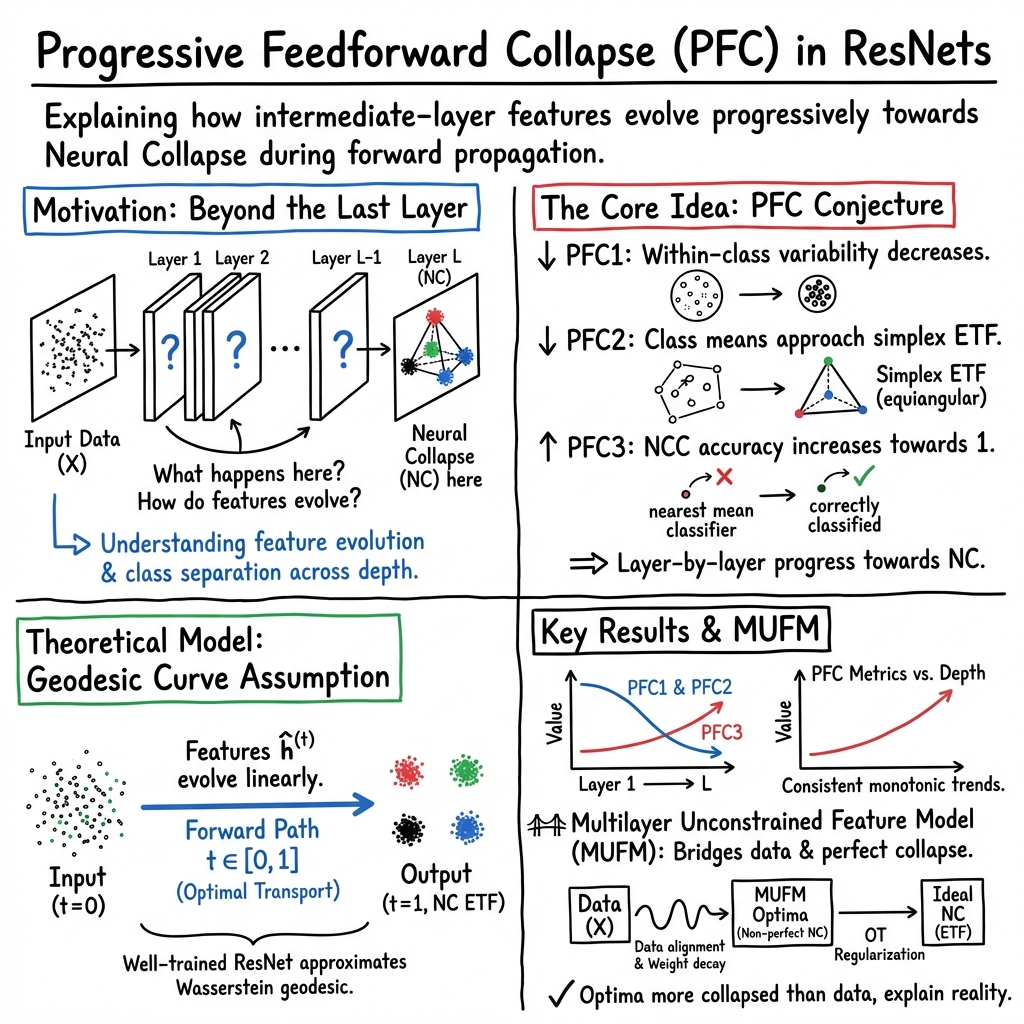

Abstract: Neural collapse (NC) is a simple and symmetric phenomenon for deep neural networks (DNNs) at the terminal phase of training, where the last-layer features collapse to their class means and form a simplex equiangular tight frame aligning with the classifier vectors. However, the relationship of the last-layer features to the data and intermediate layers during training remains unexplored. To this end, we characterize the geometry of intermediate layers of ResNet and propose a novel conjecture, progressive feedforward collapse (PFC), claiming the degree of collapse increases during the forward propagation of DNNs. We derive a transparent model for the well-trained ResNet according to that ResNet with weight decay approximates the geodesic curve in Wasserstein space at the terminal phase. The metrics of PFC indeed monotonically decrease across depth on various datasets. We propose a new surrogate model, multilayer unconstrained feature model (MUFM), connecting intermediate layers by an optimal transport regularizer. The optimal solution of MUFM is inconsistent with NC but is more concentrated relative to the input data. Overall, this study extends NC to PFC to model the collapse phenomenon of intermediate layers and its dependence on the input data, shedding light on the theoretical understanding of ResNet in classification problems.

- K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 770–778.

- O. Abdel-Hamid, A.-r. Mohamed, H. Jiang, L. Deng, G. Penn, and D. Yu, “Convolutional neural networks for speech recognition,” IEEE/ACM Trans. Audio, Speech, Language Process., vol. 22, no. 10, pp. 1533–1545, 2014.

- K. Dong and S. Zhang, “Deciphering spatial domains from spatially resolved transcriptomics with an adaptive graph attention auto-encoder,” Nat. Commun., vol. 13, no. 1, p. 1739, 2022.

- C. Zhang, S. Bengio, M. Hardt, B. Recht, and O. Vinyals, “Understanding deep learning (still) requires rethinking generalization,” Commun. ACM, vol. 64, no. 3, pp. 107–115, 2021.

- N. Tishby and N. Zaslavsky, “Deep learning and the information bottleneck principle,” in Proc. IEEE Inf. Theory Workshop, 2015, pp. 1–5.

- R. Shwartz-Ziv and N. Tishby, “Opening the black box of deep neural networks via information,” arXiv:1703.00810, 2017.

- A. A. Alemi, I. Fischer, J. V. Dillon, and K. Murphy, “Deep variational information bottleneck,” arXiv:1612.00410, 2016.

- A. Kolchinsky, B. D. Tracey, and D. H. Wolpert, “Nonlinear information bottleneck,” Entropy, vol. 21, no. 12, p. 1181, 2019.

- P. Zhai and S. Zhang, “Adversarial information bottleneck,” IEEE Trans. Neural Netw. Learn. Syst., pp. 1–10, 2022.

- B. Neyshabur, R. Tomioka, and N. Srebro, “In search of the real inductive bias: On the role of implicit regularization in deep learning,” arXiv:1412.6614, 2014.

- D. Soudry, E. Hoffer, M. S. Nacson, S. Gunasekar, and N. Srebro, “The implicit bias of gradient descent on separable data,” J. Mach. Learn. Res., vol. 19, no. 1, pp. 2822–2878, 2018.

- K. Lyu and J. Li, “Gradient descent maximizes the margin of homogeneous neural networks,” in Proc. Int. Conf. Learn. Represent., 2019.

- V. Papyan, X. Han, and D. L. Donoho, “Prevalence of neural collapse during the terminal phase of deep learning training,” Proc. Nat. Acad. Sci., vol. 117, no. 40, pp. 24 652–24 663, 2020.

- X. Han, V. Papyan, and D. L. Donoho, “Neural collapse under mse loss: Proximity to and dynamics on the central path,” in Proc. Int. Conf. Learn. Represent., 2021.

- V. Papyan, “Traces of class/cross-class structure pervade deep learning spectra,” J. Mach. Learn. Res., vol. 21, no. 252, pp. 1–64, 2020.

- J. Zarka, F. Guth, and S. Mallat, “Separation and concentration in deep networks,” in Proc. Int. Conf. Learn. Represent., 2020.

- P. Wang, X. Li, C. Yaras, Z. Zhu, L. Balzano, W. Hu, and Q. Qu, “Understanding deep representation learning via layerwise feature compression and discrimination,” arXiv:2311.02960, 2024.

- L. Hui, M. Belkin, and P. Nakkiran, “Limitations of neural collapse for understanding generalization in deep learning,” arXiv:2202.08384, 2022.

- T. Galanti, A. György, and M. Hutter, “On the role of neural collapse in transfer learning,” in Proc. Int. Conf. Learn. Represent., 2021.

- I. Ben-Shaul and S. Dekel, “Nearest class-center simplification through intermediate layers,” arXiv:2201.08924, 2022.

- T. Galanti, L. Galanti, and I. Ben-Shaul, “On the implicit bias towards minimal depth of deep neural networks,” arXiv:2202.09028, 2022.

- T. Tirer, H. Huang, and J. Niles-Weed, “Perturbation analysis of neural collapse,” in Proc. Int. Conf. Mach. Learn., 2023.

- H. He and W. J. Su, “A law of data separation in deep learning,” Proc. Nat. Acad. Sci., vol. 120, no. 36, p. e2221704120, 2023.

- E. Haber and L. Ruthotto, “Stable architectures for deep neural networks,” Inverse Probl., vol. 34, no. 1, p. 014004, 2017.

- E. Weinan, “A proposal on machine learning via dynamical systems,” Commun. Math. Statist., vol. 1, no. 5, pp. 1–11, 2017.

- K. Gai and S. Zhang, “A mathematical principle of deep learning: Learn the geodesic curve in the wasserstein space,” arXiv:2102.09235, 2021.

- Z. Zhang and S. Zhang, “Towards understanding residual and dilated dense neural networks via convolutional sparse coding,” Nat. Sci. Rev., vol. 8, no. 3, p. 159, 2021.

- F. He, T. Liu, and D. Tao, “Why resnet works? residuals generalize,” IEEE Trans. Neural Netw. Learn. Syst., vol. 31, no. 12, pp. 5349–5362, 2020.

- T. Liu, M. Chen, M. Zhou, S. S. Du, E. Zhou, and T. Zhao, “Towards understanding the importance of shortcut connections in residual networks,” in Proc. Adv. Neural Inf. Process. Syst., vol. 32, 2019.

- D. G. Mixon, H. Parshall, and J. Pi, “Neural collapse with unconstrained features,” arXiv:2011.11619, 2020.

- C. Fang, H. He, Q. Long, and W. J. Su, “Exploring deep neural networks via layer-peeled model: Minority collapse in imbalanced training,” Proc. Nat. Acad. Sci., vol. 118, no. 43, 2021.

- J. Lu and S. Steinerberger, “Neural collapse with cross-entropy loss,” arXiv:2012.08465, 2020.

- E. Weinan and S. Wojtowytsch, “On the emergence of simplex symmetry in the final and penultimate layers of neural network classifiers,” in Math. Sci. Mach. Learn., 2022, pp. 270–290.

- W. Ji, Y. Lu, Y. Zhang, Z. Deng, and W. J. Su, “An unconstrained layer-peeled perspective on neural collapse,” in Proc. Int. Conf. Learn. Represent., 2021.

- T. Tirer and J. Bruna, “Extended unconstrained features model for exploring deep neural collapse,” in Proc. Int. Conf. Mach. Learn., 2022.

- Z. Zhu, T. Ding, J. Zhou, X. Li, C. You, J. Sulam, and Q. Qu, “A geometric analysis of neural collapse with unconstrained features,” in Proc. Adv. Neural Inf. Process. Syst., vol. 34, 2021, pp. 29 820–29 834.

- J. Zhou, X. Li, T. Ding, C. You, Q. Qu, and Z. Zhu, “On the optimization landscape of neural collapse under mse loss: Global optimality with unconstrained features,” arXiv:2203.01238, 2022.

- C. Yaras, P. Wang, Z. Zhu, L. Balzano, and Q. Qu, “Neural collapse with normalized features: A geometric analysis over the riemannian manifold,” in Proc. Adv. Neural Inf. Process. Syst., 2022.

- J. Zhou, C. You, X. Li, K. Liu, S. Liu, Q. Qu, and Z. Zhu, “Are all losses created equal: A neural collapse perspective,” Proc. Adv. Neural Inf. Process. Syst., 2022.

- Y. Yang, J. Steinhardt, and W. Hu, “Are neurons actually collapsed? On the fine-grained structure in neural representations,” in Proc. Int. Conf. Mach. Learn., 2023.

- A. Rangamani, M. Lindegaard, T. Galanti, and T. A. Poggio, “Feature learning in deep classifiers through intermediate neural collapse,” in Proc. Int. Conf. Mach. Learn., 2023.

- P. Súkeník, M. Mondelli, and C. H. Lampert, “Deep neural collapse is provably optimal for the deep unconstrained features model,” Proc. Adv. Neural Inf. Process. Syst., 2024.

- Y. Yang, S. Chen, X. Li, L. Xie, Z. Lin, and D. Tao, “Inducing neural collapse in imbalanced learning: Do we really need a learnable classifier at the end of deep neural network?” in Proc. Adv. Neural Inf. Process. Syst., 2022.

- L. Xie, Y. Yang, D. Cai, and X. He, “Neural collapse inspired attraction-repulsion-balanced loss for imbalanced learning,” Neurocomputing, 2023.

- X. Liu, J. Zhang, T. Hu, H. Cao, Y. Yao, and L. Pan, “Inducing neural collapse in deep long-tailed learning,” in Proc. Int. Conf. Artif. Intell. Statist., vol. 206, 2023, pp. 11 534–11 544.

- J. Haas, W. Yolland, and B. T. Rabus, “Linking neural collapse and l2 normalization with improved out-of-distribution detection in deep neural networks,” Trans. Mach. Learn. Res., 2023.

- V. Kothapalli, E. Rasromani, and V. Awatramani, “Neural collapse: A review on modelling principles and generalization,” arXiv:2206.04041, 2022.

- H. Dang, T. Nguyen, T. Tran, H. Tran, and N. Ho, “Neural collapse in deep linear network: From balanced to imbalanced data,” arXiv:2301.00437, 2023.

- K. He, X. Zhang, S. Ren, and J. Sun, “Identity mappings in deep residual networks,” in Proc. Eur. Conf. Comput. Vis., 2016, pp. 630–645.

- J.-D. Benamou and Y. Brenier, “A numerical method for the optimal time-continuous mass transport problem and related problems,” Contemp. Math., vol. 226, pp. 1–12, 1999.

- L. Parker, E. Onal, A. Stengel, and J. Intrater, “Neural collapse in the intermediate hidden layers of classification neural networks,” arXiv:2308.02760, 2023.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.