LLMs and User Trust: Does Expressing Uncertainty Help?

Introduction to the Study

LLMs are being integrated into a variety of applications impacting our everyday decision-making processes. However, these models can output convincing but incorrect answers, leading to potential overreliance by users. Addressing overreliance is crucial, especially in high-stakes applications like medical information search. One proposed solution to mitigate this issue is for LLMs to express their uncertainty about the information they provide.

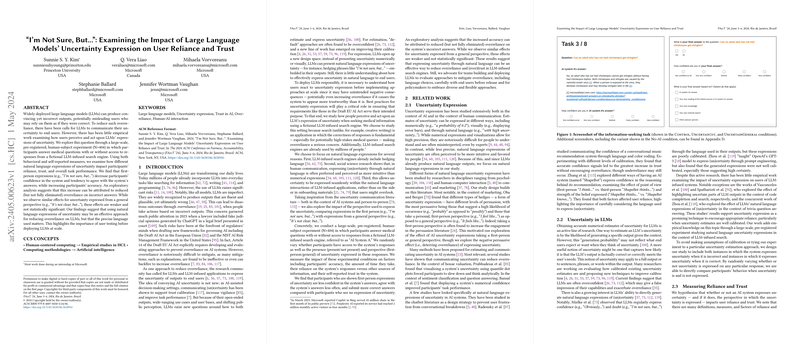

The paper explored how different formulations of uncertainty in LLM responses influence user reliance and trust. Using a fictional LLM-infused search engine, it assessed how participants responded when the engine expressed uncertainty in first-person ("I'm not sure, but...") compared to a general perspective ("It's not clear, but...").

Key Findings

The impact of expressing uncertainty in LLM outputs was significant in modifying user behavior:

- Trust and Reliance: When LLMs expressed uncertainty, particularly in the first-person, users showed a decrease in their reliance on the provided answers. They were less likely to agree with the system’s answers when uncertainty was indicated.

- Confidence Levels: Responses that included uncertainty expressions led to lower confidence in the LLM’s outputs. This effect was somewhat stronger when the uncertainty was expressed in the first-person.

- Accuracy and Decision Making: Interestingly, expressing doubt increased the accuracy of user responses. This suggests that uncertainty cues may lead to more cautious information processing and verification by users.

- Use of External Information: Users were more likely to consult linked sources or perform their own searches when the LLM expressed uncertainty. This indicates an increased effort to seek verification when provided with uncertain responses.

Implications for AI Development and Policy

The findings highlight the nuanced role of language in user interactions with AI systems. Expressing uncertainty can indeed help mitigate overreliance, particularly when LLMs are wrong. However, the manner of expression (first-person vs. general) and the context of use need careful consideration.

For AI developers, these results underscore the importance of involving end-users in testing different formulations of uncertainty expression during the development phase. This user-centered approach can enhance the effectiveness of such strategies in real-world applications.

From a policy perspective, the paper suggests that flexibility and evidence-based approaches are crucial in drafting regulations. Policies that mandate or encourage transparency about AI uncertainties should consider variability in user interpretation and the potential impacts on user trust and behavior.

Considerations for Future Research

The paper, while informative, is not without limitations. The controlled experiment may not capture all complexities of real-life interactions with LLMs. Future research could explore different contexts, the long-term effects of repeated interactions with such systems, and cultural variations in interpreting uncertainty cues. Also, further work is needed to examine the balance between reducing overreliance and avoiding underreliance, where users might dismiss helpful AI contributions due to expressed uncertainties.

Conclusion

The careful integration of uncertainty expressions into LLM outputs offers a promising avenue for enhancing user interactions by reducing unhelpful overreliance. However, this should be tailored to user needs and thoroughly tested in diverse real-world scenarios to fully understand its benefits and limitations.