Exploring the Depths of In-Context Learning with Long Context Models

Introduction to In-Context Learning with Long Contexts

With advancements in LLMs, the field of in-context learning (ICL) has been revolutionizing how machines understand and process vast amounts of information. Traditionally focused on short-context scenarios, ICL has now been pushed towards an intriguing horizon—the ability to handle and learn from contexts as broad as entire training datasets. This approach contrasts starkly with methods like example retrieval and finetuning, sparking a detailed exploration of ICL at this vast scale by employing multiple datasets and models.

Key Findings: Performance in Long-context ICL

Researchers found that ICL performance notably increases even with hundreds to thousands of demonstrations, revealing a critical insight: more data can indeed empower LLMs to perform better under in-context learning setups. Here are some major points from their analysis:

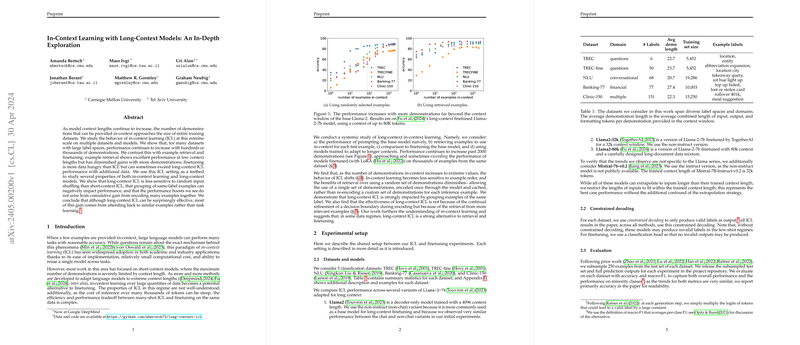

- Performance Scaling: As the number of in-context examples rises to extreme values, the typical behavior of ICL shifts, showing promising improvements in accuracy and robustness against input confusion caused by shuffling. These findings were particularly marked when using up to 2000 demonstrations.

- Comparing Retrieval and Random Sampling: Initially, retrieving relevant examples significantly outperformed using a random subset of demonstrations. However, as the number of demonstrations increased, this advantage diminished, suggesting diminished benefits from retrieval in high-resource settings.

- Inferiority of Label Grouping: Grouping demonstrations by label was shown to hurt performance, a stark contrast to randomly mingling examples, which appears to foster better general learning of tasks within the model.

The insights into long-context ICL pave the way for less computationally expensive yet effective methodologies to leverage a singular, large set of cached demonstrations across different inference examples.

Comparison with Finetuning

Regarding the effectiveness of finetuning versus ICL, the paper presents an intricate comparison:

- Data Hunger: Finetuning showed a greater hunger for data compared to ICL, specifically in high-demonstration scenarios, although it occasionally surpassed ICL in performance with sufficient data.

- Performance Gains: For datasets with larger label spaces, finetuning did not consistently outperform ICL, signaling a nuanced interaction between task complexity, label diversity, and the chosen approach (ICL or finetuning).

These findings suggest scenarios where traditional finetuning might not be as effective as previously perceived, especially when data availability scales up significantly.

Future Implications and Theoretical Insights

The paper propounds several future paths and theoretical implications for AI and machine learning:

- Efficiency vs. Effectiveness: As adding more examples to ICL setups continues to prove beneficial, the balance between computational efficiency (especially during inference) and learning effectiveness will become a critical factor in systems design.

- The Role of Memory and Recall in LLMs: The decreasing importance of meticulous example selection with increased context size hints at a fundamental capability of LLMs to utilize broader memories more effectively.

- Potential for Less Supervised Learning: The ability of LLMs to learn from large context windows with less curated examples posits a future where less supervised, yet more robust models could become commonplace.

Speculating on What Lies Ahead

Looking forward, the trajectory of in-context learning, especially within the field of long-context models, is likely to intersect more with the development of new model architectures and possibly, new paradigms of machine learning that lean heavily on less supervision and greater data utilization. The research highlighted not only enriches our understanding of current model capabilities but also subtly nods towards a future where models could become more autonomous in learning from vast, unstructured datasets without heavy human oversight or costly re-training cycles.