Understanding Adapt-LLM: A New Approach to Teaching LLMs When to Retrieve Information

Introduction to Adaptive Retrieval in LLMs

When building a system to answer questions using LLMs, deciding whether to pull in external data to inform that answer is a crucial factor. Traditionally, there are two main strategies:

- Closed Book: This method relies solely on what the LLM has learned during training (its 'parametric memory'), which may become outdated or limited.

- Open Book: This approach teams the LLM with an Information Retrieval (IR) system to fetch relevant external information, hence potentially offering more accurate and up-to-date answers.

However, each method has its setbacks. Closed Book methods might not cover all knowledge areas comprehensively, while Open Book approaches can be overkill for simpler queries that the LLM can handle on its own. This is where adaptive retrieval comes into play.

The Innovation of Adapt-LLM

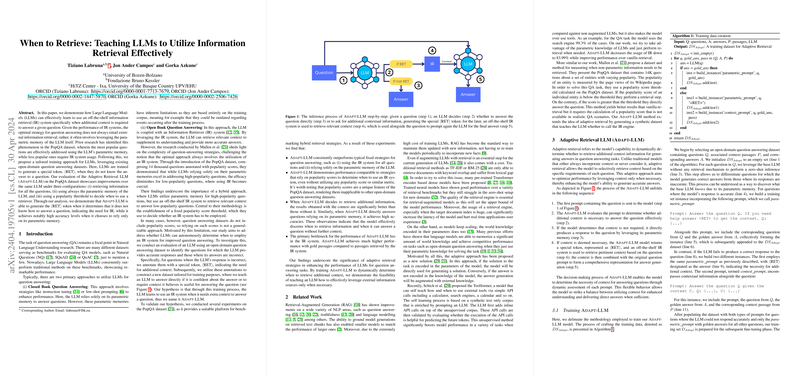

Adapt-LLM is a new flavor of LLM that determines on-the-fly whether it needs external information to answer a question effectively. It does this by generating a special token, <RET>, whenever it encounters a question it cannot answer with high confidence based on its internal knowledge alone. Here’s how Adapt-LLM handles the question-answering process:

- Initial Question Handling: Adapt-LLM first tries to answer the question using its existing knowledge.

- Decision Point: If unsure, it outputs

<RET>indicating the need for external data. - Fetching Data: It then uses an IR system to fetch the necessary information.

- Re-evaluation: With new data, Adapt-LLM attempts to answer the question again.

This process allows Adapt-LLM to leverage its trained ability to discern when external help is needed, optimizing both its accuracy and efficiency.

Training Adapt-LLM: A Closer Look

The training of Adapt-LLM is interesting because it involves creating a scenario where the model can learn to judge the necessity of external data. Here’s a simplified breakdown:

- Direct Answers: For questions it answers correctly in a zero-shot setting, these Q&A pairs are used to reinforce the model's confidence in its internal knowledge.

- Indicating Uncertainty: For questions answered incorrectly, the model is trained to output

<RET>, prompting it to seek external information in future similar scenarios. - Incorporating External Data: For these uncertain cases, Q&A pairs are also created using external data to teach the model how to integrate new information effectively.

Experimentation and Results Reflections

Testing showed that Adapt-LLM performs better than both always-retrieve and never-retrieve baselines. Specifically, it excels in identifying when external data is necessary, and its answers are more accurate when it chooses to retrieve data. For instance, on the PopQA dataset analysis:

- Adapt-LLM's Smart Decisions: It effectively determined when to depend on its own knowledge and when to fetch external data.

- Comparative Effectiveness: Adapt-LLM often outperforms fixed strategy models, demonstrating both a practical understanding of its own knowledge limits and an effective use of IR systems when needed.

Implications and Future Directions

The development of Adapt-LLM illustrates a significant step towards more intelligent AI question-answering systems, adept not just at retrieving information, but knowing when its retrieval is actually necessary. It’s a move towards more autonomous, efficient systems which bodes well for future applications across various domains where information needs are continuously changing.

Looking ahead, exploring techniques that refine the IR system's efficiency or the model’s decision strategies could further enhance performance, making adaptive retrieval models more resilient and capable across an even broader array of questions and topics.

Conclusion

Adapt-LLM represents an exciting advancement in the pursuit of more intelligent, context-aware AI systems. By balancing its internal knowledge with the ability to seek external help autonomously, it paves the way for creating more robust, knowledgeable LLMs that can adapt their strategies based on the demands of the query. This not only enhances the potential applications of LLMs but also optimizes their operational efficiency, setting a promising ground for future research and development.