Exploring Reinforced Token Optimization for Reward Learning from Human Feedback

Introduction to Reinforced Token Optimization (RTO)

In the field of AI and machine learning, aligning LLMs with human feedback is crucial for enhancing their practical utility and acceptability. Reinforced Learning from Human Feedback (RLHF) has been a prevalent approach to train LLMs to act in ways that align with human values and intentions. This blog post explores an innovative approach coined as Reinforced Token Optimization (RTO), which has shown promising results in improving the engagement of LLMs with token-level rewards derived from human preferences.

Issues with Classical RLHF Approaches

Classical methods like Proximal Policy Optimization (PPO) have had significant success in training models on sparse, sentence-level rewards but come with challenges such as instability and inefficiency, especially with open-source implementations. These approaches typically struggle with problems like maintaining response length and avoiding sudden drops in reward value, which significantly hampers their effectiveness.

How RTO Enhances RLHF

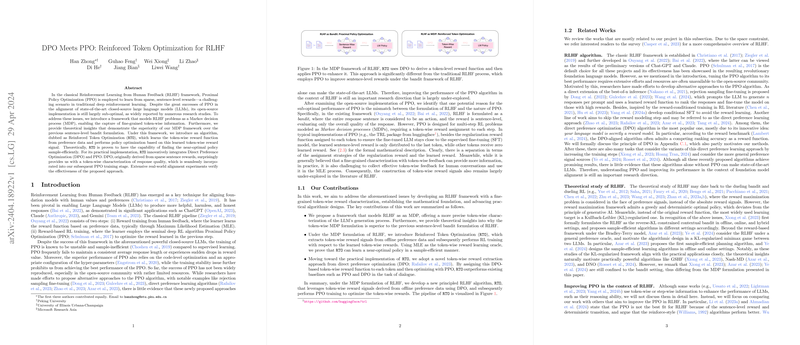

The RTO approach addresses these issues by introducing a Markov decision process (MDP) framework for RLHF, replacing the traditional bandit approach that deals with sentence-level rewards only. This shift allows for a more granular, token-wise characterization of rewards, which is more aligned with the sequential decision-making process in LLM generation. Here’s a breakdown of how RTO enhances the RLHF process in AI models:

- Token-wise Reward Characterization: RTO characterizes rewards at the token level, which inherently involves a more detailed feedback mechanism compared to sentence-level rewards. This method not only captures the full spectrum of human feedback during the language generation process but also allows the model to adjust its generation policy more precisely and effectively.

- Integration of Direct Preference Optimization: RTO incorporates Direct Preference Optimization (DPO) into the PPO training stage. This integration is surprising yet intuitive—while DPO originally deals with sparse sentence rewards, it can delineate token-wise response quality effectively, thereby enhancing the overall training process.

- Improved Sample Efficiency: Theoretically, RTO can find a near-optimal policy in a much more sample-efficient manner, which is a substantial improvement over traditional methods that might require much larger datasets to achieve similar results.

Practical Implications & Theoretical Insights

The adoption of RTO heralds several practical and theoretical implications for the future of AI training:

- Efficiency in Open-Source Applications: RTO's ability to work effectively even with limited resources makes it particularly beneficial for open-source projects, where resources might not be as abundant as in closed-source environments.

- Enhanced Alignment with Human Preferences: By capturing subtle nuances in human feedback at the token level, RTO ensures that the trained models are better aligned with human intentions, potentially increasing the usability and safety of AI systems.

- Future Research Directions: The innovative integration of token-wise rewards and the demonstrated efficiency of RTO opens up new avenues for research, especially in exploring other aspects of LLM training that could benefit from similar approaches.

Conclusion

Reinforced Token Optimization (RTO) represents a significant stride forward in training LLMs using human feedback. By addressing the inherent limitations of previous approaches and harnessing the detailed granularity of token-wise rewards, RTO not only enhances the stability and efficiency of the training process but also ensures that the resultant models are more aligned with human values and preferences. As we continue to explore and refine such methodologies, the future of AI and machine learning looks both exciting and promising, with models that can better understand and interact with their human users.