Evaluating Distributional Assumptions in Benchmark Evaluations of LLMs

Introduction

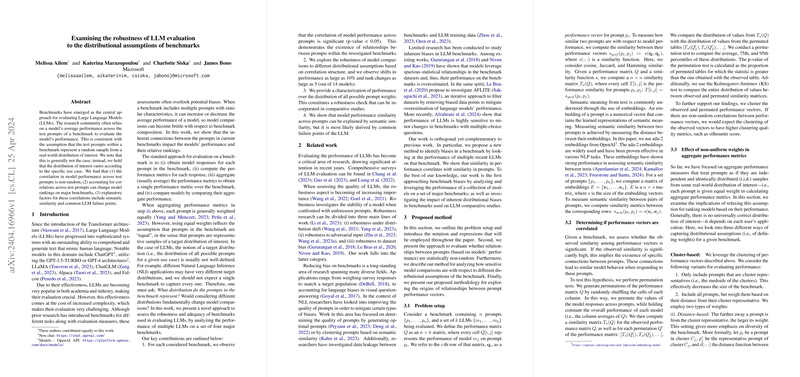

The accuracy and effectiveness of LLMs are typically evaluated using benchmark datasets. Previous approaches have typically treated benchmark prompts as independent samples from an equivalent distribution. However, new research suggests that correlations exist among prompt performances within these benchmarks, influencing the overall model evaluations and rankings. This investigation highlights that the distributional assumptions about benchmark composition can fundamentally affect the appraisal of LLMs.

Key Contributions and Findings

Several significant observations were made in this paper:

- Performance Correlation: There is a notable non-random correlation in model performance across benchmark test prompts. Such a correlation suggests hidden relationships among prompts that influence model performance predictably across similar prompt types.

- Impact on Model Rankings: Different weighting schemes of test prompts based on their distribution lead to notable changes in model rankings. Variations were observed up to 10% in performance metric shifts and up to 5 places in model ranking adjustments.

- Distributional Assumptions: The equality assumption in prompt weighting is misleading because it neglects the inherent biases and relationships among the prompts. This paper categorized prompts based on their similarity and rearranged model rankings based on these clusters.

Methodological Approach

Correlation Analysis

The paper utilized permutation tests to evaluate the statistical randomness of correlations observed in model responses across prompts. By reshuffling responses and comparing agglomerated metrics, researchers could affirm the presence of significant non-random performance similarities.

Weighted Performance Metrics

Exploring different methods to account for prompt distribution, the paper analyzed cluster-based representative sampling and distance-weighted performance evaluations. Each method showed varying effects on model rankings, confirming that equating prompt contribution can skew benchmark outcomes.

Semantic Analysis

To understand the sources of prompt correlation, the paper compared performance vectors with semantic embeddings of prompts. The findings suggested correlations in several cases, attributed to semantic similarities or shared model failure points in processing particular prompt types.

Implications and Future Directions

The implications of these findings are critical for both theoretical and practical aspects of AI research. They challenge the conventional methods of evaluating LLMs using benchmarks and suggest the necessity for more nuanced approaches that consider the relationships and distributional biases within prompt sets.

Theoretical Implications

The paper enriches our understanding of the interactions within benchmark datasets and their impact on model evaluation metrics. This prompts a theoretical shift towards considering benchmarks as complex systems with internal dependencies rather than independent prompt samples.

Practical Implications

For AI practitioners, the paper underscores the need for robust benchmarking strategies that account for inherent prompt correlations. It suggests adapting benchmark weighting schemes based on prompt distribution and interrelations to better reflect real-world model performance and utility.

Future Research

Future work should focus on developing methodologies to further dissect the sources of prompt correlation, extending beyond semantic similarity to perhaps syntactic or contextual dimensions. Additionally, there's potential in exploring automated systems that dynamically adjust prompt weights in benchmarks based on observed performance correlations, thus offering a real-time calibration of benchmark difficulty and representativeness.

Conclusion

The research provides compelling evidence that standard evaluation benchmarks may not adequately reflect the true capabilities of LLMs due to their failure to acknowledge prompt interdependencies. This paper calls for a reevaluation of how benchmarks are constructed and utilized, proposing a more granular and dynamic methodology for LLM evaluation.