Performance Evaluation of Low-Bit Quantization on Meta's LLaMA3 Models

Introduction

Meta's LLaMA3 models, introduced in April 2024, represent a significant advancement in the field of LLMs, boasting configurations of up to 70 billion parameters and extensive pre-training on over 15 trillion tokens. Despite their superior performance across various benchmarks, the real-world application of LLaMA3 models is often restricted by resource limitations, prompting a closer examination of low-bit quantization methods as a viable solution for compression. This paper evaluates the effectiveness of different low-bit quantization techniques, both post-training and during fine-tuning, to maintain the operational integrity of LLaMA3 models under resource constraints.

Quantization Techniques Evaluated

The paper categorizes the quantization methods into two main tracks:

- Post-Training Quantization (PTQ)

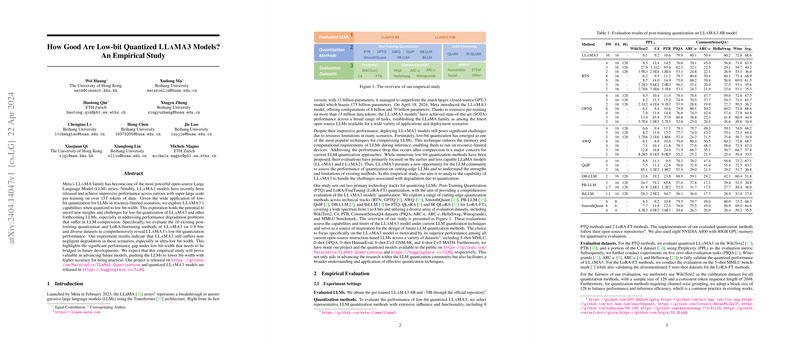

- Techniques such as RTN, GPTQ, AWQ, and SmoothQuant were tested across a bit-width spectrum from 1 to 8 bits.

- Notable methods like PB-LLM and DB-LLM employ strategies for effective compression at ultra-low bit-widths, revealing promising capabilities in maintaining performance integrity.

- LoRA-Finetuning (LoRA-FT) Quantization

- Focused on the newer methods such as QLoRA and IR-QLoRA, the paper explores adaptations of the model parameters during fine-tuning to achieve better quantization outcomes.

- These methods were primarily evaluated on the MMLU benchmark and additional CommonSenseQA tasks to assess their capacity to handle lower-bit operational demands.

Experimental Results

- PTQ Evaluation: A comprehensive assessment using various benchmarks showed that while some methods like GPTQ and AWQ can maintain reasonable model performance down to 3-bit quantization, nearly all techniques faced severe degradation at ultra-low bit-widths (1-2 bits). However, specialized methods like PB-LLM introduced mixed-precision strategies that somewhat mitigated performance drops.

- LoRA-FT Evaluation: The findings indicate that LoRA-FT methods did not substantially improve the performance outcomes for the LLaMA3 model, especially when compared against their non-fine-tuned counterparts. In some cases, these methods performed worse, underscoring the challenges of applying low-rank adjustments to highly optimized models.

Implications and Future Directions

The observed performance degradation in low-bit scenarios highlights a critical challenge for deploying LLaMA3 in resource-limited environments. This issue prompts further research into developing more robust quantization techniques that can effectively bridge the performance gap identified in this paper. Future advancements might focus on:

- Enhancing PTQ methods to support lower-bit operations without a substantial loss in accuracy.

- Innovating LoRA-FT approaches that can leverage the intrinsic capacity of LLaMA3 models more effectively, perhaps through more sophisticated parameter optimization or adjustment techniques.

Ultimately, by improving the efficacy of these quantization methods, LLMs like LLaMA3 could be deployed more widely, extending their utility to a variety of applications where computational resources are a limiting factor.