Holistic Evaluation of Large Vision-LLMs: Introducing VALOR-Eval and VALOR-Bench for Assessing Hallucination, Coverage, and Faithfulness

Introduction to the Paper's Contributions

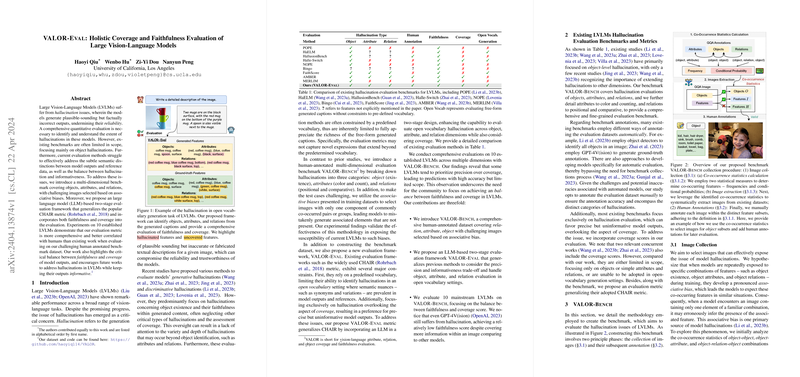

The paper presents a rigorous evaluation framework and benchmark, VALOR-Eval and VALOR-Bench, aimed at addressing the prevalent issue of hallucinations in Large Vision-LLMs (LVLMs). These hallucinations are misleading outputs where the model describes nonexistent objects or features within an image. The paper's contributions are multifaceted:

- VALOR-Bench: A new benchmark dataset comprised of human-annotated images. These images are carefully selected based on associative biases to challenge models on the accurate rendering of objects, attributes, and relationships.

- VALOR-Eval: An evaluation framework leverages a two-stage approach using a LLM to enhance the assessment of hallucinations in an open-vocabulary scenario, considering both the faithfulness and coverage of model outputs.

Key Findings from the Evaluation

The evaluation applied the VALOR-Eval framework across 10 LVLMs, revealing significant insights into the existing models' performance:

- The paper identifies a consistent issue across multiple models where there is a trade-off between precision and output scope. Some models showed high accuracy but limited coverage, suggesting a potential model bias towards being conservative in generating outputs to avoid errors.

- Despite advancements in model capabilities, the presence of hallucinations remains a critical issue. This problem underscores the need for more refined approaches in training and evaluating LVLMs.

Comparative Analysis with Existing Frameworks

The paper provides a detailed analysis of previous hallucination evaluation methods, underscoring the limitations of current approaches that either focus narrowly on specific types of hallucinations or lack the integration of crucial metrics such as coverage. The new VALOR-Eval improves upon these by offering a comprehensive, nuanced, and scalable approach. This capability is attributed to its use of LLMs in identifying and matching hallucinated content more dynamically compared to fixed vocabulary lists used in conventional methods.

Implications and Future Directions

The implications of this research are profound for the development and refinement of LVLMs. The introduction of the VALOR-Bench dataset provides a robust tool for future studies, offering a platform to train and test models under challenging conditions designed to mimic real-world complexities.

Furthermore, the insights regarding the trade-offs between precision and coverage invite further exploration into model architectures and training processes that can balance these aspects more effectively. The field might also explore integrating these evaluation techniques directly into the training loop of LVLMs to directly address and mitigate hallucination during model development.

Concluding Thoughts

The VALOR-Eval framework and VALOR-Bench dataset set new standards for the evaluation of vision-LLMs, emphasizing the critical balance between hallucination control and output informativeness. This paper not only advances our understanding of the limitations of current LVLMs but also charts a pathway for future enhancements in model accuracy and reliability. As LVLMs continue to permeate various technological and creative sectors, refining these models' ability to interpret and describe visual content accurately remains a paramount endeavor.