Token-level Direct Preference Optimization: Enhancing LLM Alignment with Human Preferences

Introduction

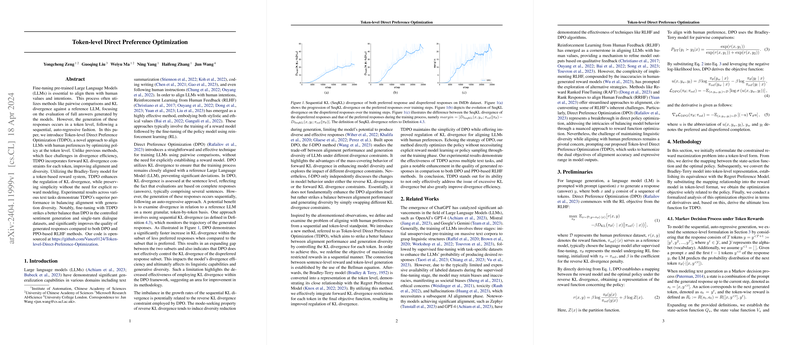

LLMs have become central to contemporary AI research due to their ability to generalize across various textual tasks. Traditional methods such as Direct Preference Optimization (DPO) and Reinforcement Learning from Human Feedback (RLHF) have made significant strides in aligning these models with human-like responses. However, these approaches often assess divergence at a sentence level and may not effectively control the token-level generation diversity. This paper introduces Token-level Direct Preference Optimization (TDPO), a novel approach focusing on token-level optimization to improve alignment and manage generative diversity more efficiently.

Advances in Direct Preference Optimization

TDPO offers a conceptual leap from traditional DPO by shifting the focus from sentence-level evaluation to token-level optimization. This method integrates forward KL divergence constraints at the token level, allowing for finer control over the model's output, aligning more closely with human preferences, and preserving generative diversity. This paper leverages the Bradley-Terry model for token-based preference assessment, enhancing traditional methods without the necessity for explicit reward model training or policy sampling during training.

Experimental Framework and Results

The authors conducted extensive experiments to validate TDPO's effectiveness across different datasets, including IMDb for sentiment generation and Anthropic HH for single-turn dialogues. The results were impressive, showing that TDPO outperforms both DPO and PPO-based RLHF methods in generating quality responses. Specifically, TDPO achieved better balance and control over KL divergence and showcased superior divergence efficiency.

Key insights include:

- Alignment and Diversity: TDPO significantly improves the balance between model alignment with human preferences and generative diversity compared to existing methods.

- KL Divergence Control: By optimizing at the token level, TDPO provides a more nuanced control of KL divergence, leading to more stable and consistent model performance across different textual tasks.

Implications and Future Directions

The introduction of TDPO marks a pivotal development in the training of LLMs. Looking forward, this method opens new avenues for research into fine-tuning LLMs at a more granular level. Future work may explore the potential of token-level optimizations in other aspects of LLMing, such as reducing toxicity or bias in generated texts. Additionally, further research could extend this approach to other forms of media, like generative models for audio or video, where fine-grained control over generative processes is crucial.

Conclusion

Token-level Direct Preference Optimization represents a significant refinement over sentence-level optimization techniques used in LLMs. By effectively addressing the challenges of divergence efficiency and maintaining a balance between alignment and diversity, TDPO sets a new standard for the development of human-aligned AI systems. The method's ability to fine-tune generative attributes at a token level will likely influence future LLM research and applications, making it a cornerstone in the ongoing evolution of machine learning models.