Overview of "AesExpert: Towards Multi-Modality Foundation Model for Image Aesthetics Perception"

The paper presents "AesExpert," a novel approach for enhancing the image aesthetics perception capabilities of multimodal LLMs (MLLMs). Recognizing the deficiency in human-annotated multimodal aesthetic data, the authors introduce a new dataset, Aesthetic Multi-Modality Instruction Tuning (AesMMIT), designed to bridge the gap between MLLMs and human aesthetic judgment.

Key Contributions

The authors make several notable contributions:

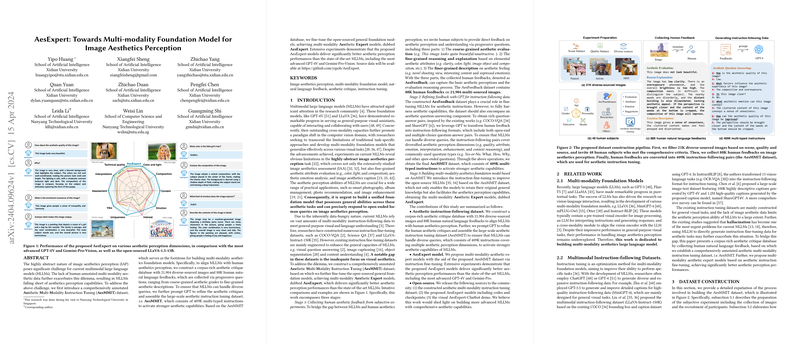

- Aesthetic Instruction-Following Dataset: AesMMIT is built on a corpus of aesthetic critiques collected through subjective experiments. It consists of 409K multi-type instructions derived from 21,904 diverse images and 88K human feedbacks. These incorporate various perception dimensions such as quality, attribute, emotion, and context reasoning.

- AesExpert Model: The paper fine-tunes existing MLLMs on the AesMMIT data, resulting in the AesExpert models. These models exhibit superior performance in aesthetics perception compared to contemporary MLLMs, including GPT-4V and Gemini-Pro-Vision.

- Open-source Contribution: The dataset and models, including their codes and checkpoints, are made publicly available, which could support further advancements in MLLMs with comprehensive aesthetic capabilities.

Methodology

The methodology involves three main stages:

- Collecting Human Feedback: 48 subjects provided detailed aesthetic feedback on images, focusing on coarse and fine-grained aesthetic evaluations and feelings.

- GPT-Assisted Refinement: The authors used GPT to generate diverse instruction-following data formats from the human feedback, enhancing the dataset's breadth.

- Model Fine-tuning: The researchers fine-tuned pre-existing MLLMs on this comprehensive dataset to derive the AesExpert models, targeting improved aesthetic interactions.

Results

Extensive evaluations on the AesBench benchmark demonstrate substantial improvements in aesthetic perception tasks. The models exhibit notably enhanced capabilities in aesthetic interpretation, perception, and empathy. Performance improvements, especially in assessing artificial intelligence-generated images, highlight the dataset’s efficacy.

Implications and Future Directions

The work implies significant potential for MLLMs to serve roles requiring nuanced aesthetic understanding, such as in smart photography and media curation. Future advancements may explore expanding datasets to include more diverse aesthetic contexts and improve MLLMs' interpretative precision further.

Overall, this research lays foundational work for developing models that replicate human-like aesthetic judgments, catalyzing progress in AI that interacts with visual domains more profoundly.