TransformerFAM: Integrating Working Memory into Transformers Through Feedback Attention

Introduction to TransformerFAM

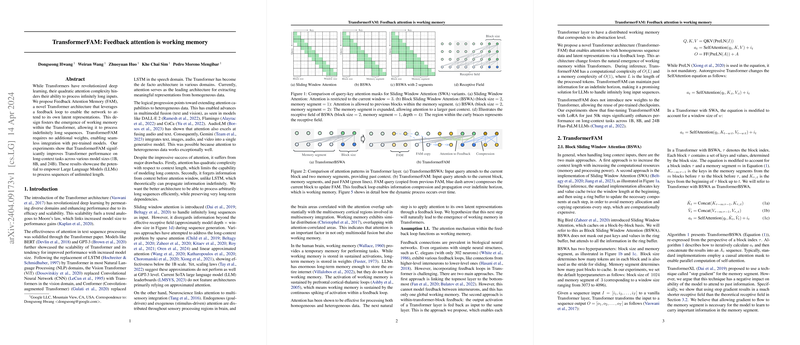

The paper introduces TransformerFAM, a novel architecture enhancing the Transformer model to process indefinitely long sequences by integrating a feedback loop that acts as working memory. This advancement addresses one of the major limitations of existing Transformer models - their quadratic attention complexity that restricts them from efficiently handling very long inputs. Unlike conventional approaches that either increase computational resources or implement variations of sliding window attention, TransformerFAM allows the model to attend to its own latent representations through a feedback loop, emulating the functionality of working memory in the human brain.

Core Contributions

- Feedback Attention Memory (FAM): The introduction of FAM enables the Transformer to maintain and update a working memory of past information, allowing for the processing of indefinitely long sequences with linear computational complexity. This novel component does not introduce additional weights, facilitating its integration with pre-trained models.

- Compatibility with Existing Models: TransformerFAM's design allows it to leverage pre-existing Transformer models by integrating seamlessly without necessitating retraining from scratch. It particularly shows compatibility with models of various sizes, demonstrating its scalability.

- Significant Performance Improvements: The experiments conducted show that TransformerFAM significantly outperforms standard Transformer models on long-context tasks, a result consistently observed across different model sizes.

Experiments and Results

The experimental results underscore TransformerFAM's ability to enhance performance on tasks requiring long-context processing. For instance, on the PassKey retrieval task, TransformerFAM demonstrated proficiency in handling filler contexts up to 260k tokens, markedly exceeding the capabilities of models employing traditional sliding window attention mechanisms. This proficiency was manifest across model sizes, from 1B to 24B, indicating scalability.

Implications and Future Prospects

- Theoretical Implications: TransformerFAM presents a novel approach to integrating working memory into deep learning models, which could stimulate further research into models that more closely mimic human cognitive processes.

- Practical Applications: The ability to process indefinitely long sequences efficiently opens up new avenues for application in areas such as document summarization, extended conversation understanding, and anywhere long-contextual understanding is crucial.

- Future Development: The architecture invites exploration into models that can handle increasingly heterogeneous data types, perhaps leading toward more integrative and versatile AI systems.

Conclusion

TransformerFAM represents a significant step forward in the quest to overcome the limitations imposed by the quadratic attention complexity of traditional Transformers. By introducing a mechanism that emulates working memory, it not only enhances the model's ability to process long sequences but also aligns artificial neural network architectures more closely with the cognitive functions of the human brain. As such, TransformerFAM not only advances the field of deep learning but also opens new pathways for research into AI systems capable of complex, contextually rich information processing.