Overview of GenCHiP: Generating Robot Policy Code for High-Precision and Contact-Rich Manipulation Tasks

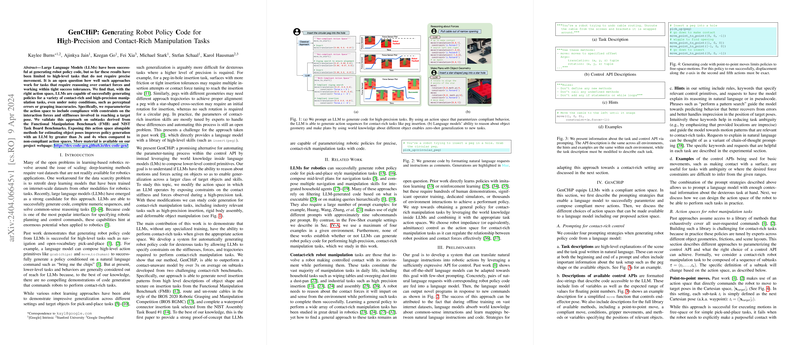

The paper "GenCHiP: Generating Robot Policy Code for High-Precision and Contact-Rich Manipulation Tasks" presents an exploration of the capabilities of LLMs to generate robot policy code that can handle contact-rich and high-precision manipulation tasks. This capability is contrasted with previous applications of LLMs which have been concentrated on high-level tasks that involve less precision in movement. The paper leverages LLMs' capabilities by reparameterizing the action space to accommodate constraints on interaction forces and stiffness, thus enhancing the models' ability to reason over movements that require finer control. The research is driven by the challenge of how well LLMs perform in tasks demanding contact force reasoning and reaction to sensory noise, such as perceptual errors or grasp inaccuracies.

Research Focus and Methodology

The authors focus on enabling LLMs to generate robotic code that can adaptively handle precise and contact-intensive tasks by introducing a compliant action space, facilitating interactions with environmental constraints. Their approach is validated through trials on subtasks from the Functional Manipulation Benchmark (FMB) and the NIST Task Board Benchmarks. They expose this action space alongside methods for object pose estimation, yielding significant improvements in policy generation by more than threefold compared to non-compliant action spaces.

The research utilizes techniques from robotics and deep learning, such as variable impedance control, to guide robots in complex manipulation tasks. By providing a compliant action space, the paper allows LLMs to parameterize low-level control by modulating stiffness and applying constraints on the forces. This strategy encourages LLMs to generate motion patterns essential for job completion, such as in peg insertion or cable routing.

Key Insights and Results

The numerical results presented in the paper illustrate noticeable performance improvements over previous models that do not utilize compliant action spaces. The LLM-generated code is shown to vastly outperform a basic scripted baseline in various settings. For instance, in the Functional Manipulation Benchmark, the GenCHiP-equipped models demonstrated significant success in handling geometries of high precision and various object shapes. Furthermore, extending this research framework to industrial tasks demonstrated LLMs' scalability in tackling complex real-world problems.

Implications and Future Directions

This research provides compelling evidence of LLMs' potential to autonomously generate precise control code for robotics. It expands the theoretical implications of LLM adaptability to include domains traditionally dominated by task-specific control engineering. Practically, the paper suggests a pathway to automate complex calibration tasks in robotics, achieving remarkable results that rely on LLM-inherited world knowledge.

In terms of future development, this approach could be extended by further refining strategies for integrating perception and language-model-generated control, perhaps delving deeper into multi-modal data fusion and unsupervised learning in control environments. The promising results suggest future research could continue enhancing the robustness and generalization of robot policy code, including integrating with sophisticated sensory inputs and exploring broader classes of manipulation tasks.

In summary, this paper presents a well-argued examination of using LLMs for generating robotic control policies, underlining the potential and versatility of these models in executing and planning intricate tasks beyond general linguistic capabilities. The research outcomes reinforce the growing relevance of LLMs in complex task automation, which may eventually transform approaches to robotic learning and control.