Revisiting Text Understanding and Context Faithfulness in LLMs through Imaginary Instances

Introduction to Imaginary Instances for Reading Comprehension

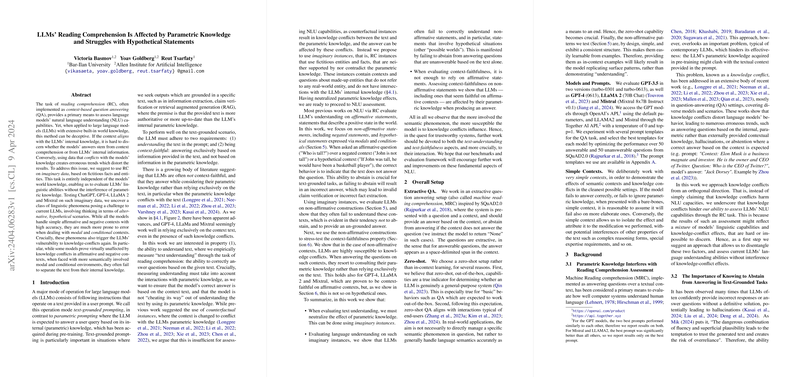

In the continual assessment of Natural Language Understanding (NLU) capabilities of LLMs, the integration of Reading Comprehension (RC) tasks, particularly context-based Question Answering (QA), remains pivotal. Traditional methods may fall short by either aligning too closely with or conflicting against the LLMs' extensive built-in knowledge, thus skewing results. This paper introduces an innovative approach using "imaginary instances" in RC tasks to bypass this issue, providing a purer measure of an LLM's text understanding capabilities free from the distortions of built-in knowledge.

Evaluation with Imaginary Instances

Creating Neutral Testing Conditions

The proposed method involves textual modifications to traditional QA tasks where real-world entities and facts are replaced with fictive counterparts, thus ensuring that LLMs' responses are uninfluenced by their pre-existing knowledge. The entities and facts used are carefully crafted to contain no overlap with real-world knowledge, ensuring that LLMs must rely solely on the linguistic content provided to answer correctly.

Strong Numerical Results and Implications

Results from testing top-performing models like ChatGPT, GPT-4, LLaMA 2, and Mixtral on these imaginary datasets show a significant distinction between their capabilities in handling simple affirmative/negative scenarios versus more complex modal and conditional statements. The paper reveals that while models handle straightforward contexts with high accuracy, their performance is significantly impeded in scenarios requiring interpretations of hypotheticals (modal verbs and conditionals), highlighting a crucial gap in current NLU capabilities.

Deep Dive Into Non-Affirmative Text Handling

The investigation extends to non-affirmative text structures, such as negations and hypothetical contexts, which often require the model to abstain from providing a definitive answer when the context does not supply sufficient information. This "ability to abstain" is crucial in real-world applications, yet as demonstrated, models frequently default to incorrect or ungrounded answers when faced with such structures. Particularly, the paper illustrates how models struggle more with hypothetical constructs, indicating a significant challenge in modeling alternative, "possible worlds," scenarios.

Assessing Context-Faithfulness Across Affirmative and Hypothetical Constructs

The effectiveness of LLMs in sticking purely to provided text (context-faithfulness) is further scrutinized under different setups: where context aligns with, contradicts, or is independent of their built-in knowledge. Notably, while some models show robustness in affirmative and negative contexts, their reliability waivers in hypothetical scenarios—suggesting a susceptibility to internal knowledge even when it conflicts with given text. This nuanced exploration underlines that even models demonstrating high context-faithfulness in simpler tasks may falter in more complex semantic environments.

Speculations on Future Developments

Practical and Theoretical Advancements

The findings suggest an urgent need for future models to better handle modal and conditional contexts which involve abstract, non-real-world scenarios. This advancement could significantly enhance the applicability and reliability of LLMs in tasks requiring deep comprehension and factual adherence, such as in automated content generation, academic research, or legal document analysis.

Forward-looking Theoretical Implications

Theoretically, the paper challenges current understandings of LLMs' language comprehension and posits that true NLU might still be an elusive goal, particularly in dealing with non-concrete, speculative content. This opens further avenues in AI research to develop models that better mimic human-like understanding and reasoning in uncertain or abstract realms.

Conclusion

By introducing imaginary instances, this research shifts the paradigm of evaluating LLMs' understanding and faithfulness to text. It presents a foundational step toward more accurately measuring true language comprehension capabilities, which are critical for both practical applications and the theoretical advancement of AI technology. The rigorous assessment of LLMs across different contexts and the revealing insights into their operational limits provide a benchmark for future developments aimed at achieving more sophisticated and reliable natural language processing systems.