Exploring Direct Nash Optimization for Self-Improving LLMs

Introduction to Direct Nash Optimization

In the field of artificial intelligence research, particularly in the development of LLMs, optimizing the alignment of LLMs with complex human preferences has emerged as a significant challenge. Traditional approaches to post-training LLMs, such as Reinforcement Learning from Human Feedback (RLHF), have focused on reward maximization based on scalar rewards. However, this methodology encounters limitations when expressing general preferences, especially in the context of intransitive or cyclic preference relations. Addressing this challenge, the paper on Direct Nash Optimization (\DNO) presents a novel framework that diverges from the conventional reward-focused paradigm, embracing the optimization of general preferences through a scalable, contrastive learning-based algorithm.

Key Contributions of the Study

The paper introduces \DNO, an algorithm that combines the theoretical robustness associated with optimizing general preferences with the practical efficiency and stability of contrastive learning. The following points summarize the critical contributions and findings of this work:

- Algorithmic Foundation: \DNO leverages batched on-policy iterations alongside a regression-based objective, facilitating a stable and efficient approach to optimizing general preferences. This methodology sidesteps the need for explicit reward function computation.

- Theoretical Insights: The paper demonstrates, through theoretical analysis, that \DNO converges to a Nash equilibrium on average, offering a foundational mathematical underpinning for its approach to learning from general preference feedback.

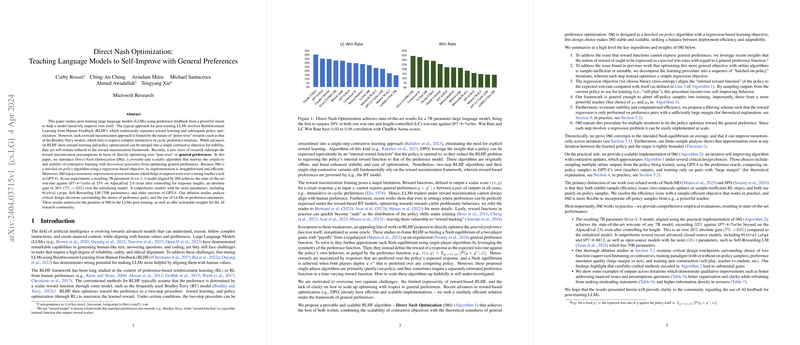

- Practical Efficacy: Empirical evaluations showcase that \DNO, when applied to a 7B parameter LLM, outperforms its counterparts, achieving record performance on standard benchmarks such as \alpaca.

- Monotonic Improvement: \DNO is proven to exhibit monotonic improvement across iterations, ensuring consistent progress in aligning the LLM with the targeted preferences.

Theoretical and Practical Implications

The exploration of \DNO contributes significantly to both the theoretical understanding and practical applications of post-training LLMs with human feedback. Specifically, the paper sheds light on the following aspects:

- Expressing Complex Preferences: By moving away from scalar reward functions, \DNO addresses the critical limitation of expressing complex, non-linear preferences, paving the way for more nuanced LLM tuning.

- Stability and Efficiency: The batched on-policy approach, combined with regression-based objectives, marks a stride towards achieving both theoretical soundness and practical efficiency in learning from human feedback.

- Benchmark Performance: The state-of-the-art performance of the resultant 7B parameter model underscores \DNO's effectiveness in real-world applications, suggesting its potential as a new standard for post-training LLMs.

Future Directions

While \DNO marks a significant advancement in the alignment of LLMs with human preferences, it also opens avenues for further exploration. Future work could focus on extending the algorithm to broader applications beyond text generation, exploring the integration of \DNO with other LLM architectures, and further refining the algorithm for even greater efficiency and scalability.

Conclusion

The development and paper of Direct Nash Optimization represent a noteworthy advancement in optimizing LLMs for alignment with human preferences. By theoretically and empirically demonstrating the effectiveness of this approach, the research sets a new precedent for future endeavors in fine-tuning LLMs in a manner that more accurately reflects the intricacies of human preferences.