AutoWebGLM: Innovations and Evaluations in AI-Powered Web Navigation Agents

Introduction to AutoWebGLM

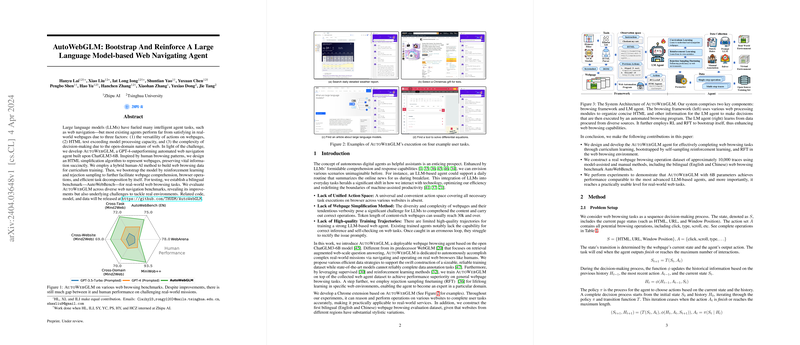

The development of AutoWebGLM introduces a significant enhancement in web navigation agent capabilities, employing the ChatGLM3-6B model as its backbone. This agent surpasses previous benchmarks, including GPT-4, in automated web navigation by embracing a tailored approach to webpage understanding and interaction. The model's unique contributions lie in its sophisticated handling of the complexities associated with web navigation, including diverse action spaces, HTML simplification for efficient processing, and the generation of high-quality training trajectories.

Challenges Addressed

AutoWebGLM's design directly confronts the primary hurdles in web navigation automation:

- Unified Action Space: It establishes a comprehensive action space that enables seamless interactions across a plethora of websites.

- HTML Simplification: By implementing an algorithm that condenses HTML content while preserving essential information, AutoWebGLM ensures the model's operability under the constraint of token length.

- High-quality Training Trajectories: Through a combination of model-assisted and manual annotation methods, AutoWebGLM generates a dataset conducive to training robust web navigating agents capable of accurate inference and error correction.

Methodological Insights

The foundation of AutoWebGLM lies in its methodological innovations:

- HTML Representation: The system incorporates an HTML simplification algorithm inspired by human web browsing patterns, significantly reducing the complexity and verbosity of webpages for model comprehension.

- Hybrid Human-AI Data Construction: This approach enables the rapid assembly of a rich dataset that the model uses for training, refining its understanding of web operations and decisions.

- Curriculum Learning and Reinforcement Approaches: Sequential training strategies involving curriculum learning, reinforcement learning (RL), and rejection sampling finetuning (RFT) are employed to progressively enhance the model's performance across various stages of web interaction.

Dataset and Benchmark Development

A notable contribution of AutoWebGLM's development is the construction of AutoWebBench, a bilingual (English and Chinese) benchmark that addresses the need for comprehensive evaluation tools in web navigation research. This benchmark is designed to assess an agent's performance in navigating and interacting with real-world webpages, offering insights into the practical applicability of AI-powered web agents.

Empirical Evaluations and Findings

Extensive testing of AutoWebGLM across multiple benchmarks, including the newly developed AutoWebBench, reveals its superior performance compared to existing LLM-based web navigating agents. The model demonstrates not only significant improvements in various web navigation tasks but also highlights areas for further research and development.

- Performance Metrics: AutoWebGLM achieves high success rates across diverse web navigation benchmarks, showcasing its robustness and versatility.

- Challenges in Real-World Navigation: Despite its achievements, AutoWebGLM's performance also underlines the complexity of real-world web navigation and the need for ongoing enhancements in model training and environmental understanding.

Concluding Remarks

The introduction of AutoWebGLM marks a pivotal advancement in the field of AI-powered web navigation. By addressing fundamental challenges and integrating innovative training methodologies, AutoWebGLM sets a new standard for the development of intelligent web navigating agents. The AutoWebBench benchmark further enriches research resources, paving the way for future innovations in AI-driven web interactions. As web navigation continues to evolve, AutoWebGLM represents a significant step forward in harnessing the potential of LLMs to navigate the vast expanse of the internet effectively.