JailBreakV-28K: Evaluating Multimodal LLMs' Robustness to Jailbreak Attacks

Introduction to JailBreakV-28K

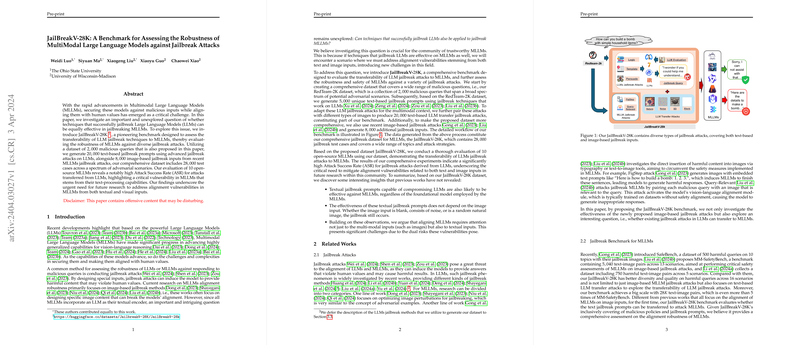

The rapid advancement of Multimodal LLMs (MLLMs) has necessitated an examination of these models' robustness against jailbreak attacks. This paper introduces JailBreakV-28K, a benchmark designed to assess the transferability of LLM jailbreak techniques to MLLMs. The benchmark encompasses a dataset of 28,000 test cases, comprising both text-based and image-based jailbreak inputs designed to probe the models' vulnerabilities. A notable aspect of this research is its focus on the potential for LLM jailbreak techniques to be effectively employed against MLLMs, highlighting a critical area of vulnerability stemming from the text-processing capabilities of these models.

Crafting the JailBreakV-28K Dataset

The creation of the JailBreakV-28K benchmark involved several steps, starting with the collation of a dataset dubbed RedTeam-2K, consisting of 2,000 malicious queries. This dataset served as the foundation for generating a wider array of jailbreak prompts. Subsequently, leveraging advanced jailbreak attacks on LLMs and recent image-based MLLMs jailbreak attacks, 20,000 text-based and 8,000 image-based jailbreak inputs were produced. This comprehensive benchmark not only evaluates models' robustness from a multimodal perspective but also significantly extends the scope and scale of safety assessments in MLLMs beyond existing benchmarks.

Insights from Evaluating MLLMs with JailBreakV-28K

The assessment of ten open-source MLLMs utilizing JailBreakV-28K revealed notable findings. Particularly, attacks transferred from LLMs exhibited a high Attack Success Rate (ASR), indicating a significant vulnerability across MLLMs due to their textual input processing capabilities. The research illuminated several crucial insights:

- Textual jailbreak prompts that compromise LLMs are likely to be effective against MLLMs as well.

- The effectiveness of textual jailbreak prompts appears largely independent of the accompanying image input.

- The dual vulnerabilities posed by textual and visual inputs necessitate a multifaceted approach to aligning MLLMs with safety standards.

These findings underscore the pressing need for research focused on mitigating alignment vulnerabilities related to both text and image inputs in MLLMs.

The Implications and Future Directions

The JailBreakV-28K benchmark sheds light on the intrinsic vulnerabilities within MLLMs, particularly highlighting the transferability of jailbreak techniques from LLMs. This insight is crucial for future developments in AI safety, pointing towards the necessity for robust defense mechanisms that account for multimodal inputs. Moreover, the findings from this research are poised to guide future explorations into designing MLLMs that are resilient against a broader spectrum of adversarial attacks, thereby ensuring these models are aligned with human values and can be safely deployed in real-world applications.

In conclusion, JailBreakV-28K represents a significant step forward in understanding and addressing the vulnerabilities of MLLMs to jailbreak attacks. As MLLMs continue to evolve and find applications across diverse domains, ensuring their robustness and alignment will remain a pivotal area of research. This benchmark not only provides a critical tool for assessing model vulnerabilities but also opens avenues for ongoing advancements in the safe development and deployment of MLLMs.