Survey of Bias in Text-to-Image Generation: Definition, Evaluation, and Mitigation

Introduction

The research under discussion provides a comprehensive overview of bias within Text-to-Image (T2I) generative systems, a field of paper that has rapidly gained attention with the advancement of models like OpenAI's DALLE-3 and Google's Gemini. While these models promise a vast array of applications, they also raise significant concerns about bias, aligning with broader societal issues related to gender, skintone, and geo-cultural representations. This survey is the first to extensively collate and analyze existing studies concerning bias in T2I systems, shedding light on how bias is defined, evaluated, and mitigated across different dimensions.

Bias Definitions

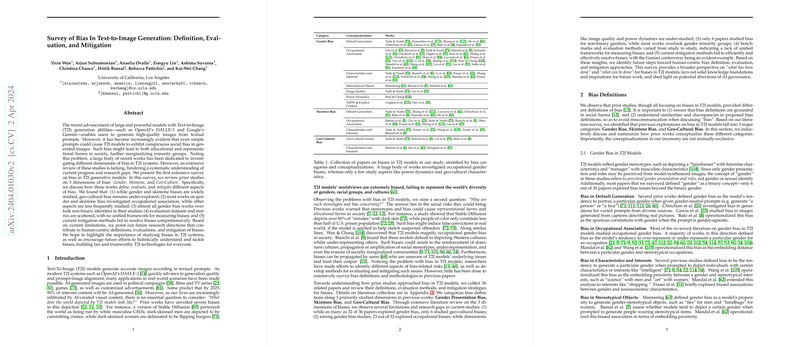

The paper identifies three primary dimensions of bias in T2I models:

- Gender Bias, where extensive research has been conducted, reveals a significant inclination towards binary gender representations and stereotypes. Specific areas scrutinized include gender default generation, occupational association, and portrayal of characteristics, interests, stereotypes, and power dynamics.

- Skintone Bias, which addresses the model's tendency to favor lighter skin tones in scenarios where skintone is unspecified. The biases extend to occupational associations and characteristics interests.

- Geo-Cultural Bias reflects an under-representation or skewed portrayal of cultures, notably magnifying Western norms and stereotypes at the cost of global diversity.

Bias Evaluation

Evaluation Datasets

Different approaches toward dataset compilation are noted, from manually curated prompts to proposed datasets like CCUB and Holistic Evaluation of Text-to-Image Models (HEIM) benchmark. The adoption of predefined datasets such as LAION-5B and MS-COCO highlights the scattered nature of evaluation frameworks.

Evaluation Metrics

The paper discusses the prevalence of classification-based metrics, augmenting this with embedding-based metrics for a nuanced understanding of bias. While classification methods dominate the evaluation landscape, concerns around the reliability and ethical considerations of automated and human annotation processes are addressed.

Bias Mitigation

Mitigation strategies are broadly classified into model weight refinement and inference-time and data approaches. Despite various proposed methods—ranging from fine-tuning and model-based editing to prompt engineering and guided generation—the absence of an encompassing solution to biases is evident. The paper calls for further research into robust, adaptive, and community-informed mitigation strategies to cultivate fairer T2I systems.

Future Directions

The survey emphasizes the necessity for:

- Enhanced definitions that clarify and contextualize biases,

- Improved evaluation methods to measure biases accurately, considering human-centric perspectives,

- Continuous development of mitigation strategies that are effective, diverse, and adaptive to evolving societal norms.

The discussion extends to ethical considerations, highlighting the importance of transparency in defining bias and the potential misuse of mitigation strategies in unjust applications.

Conclusion

This survey articulates the pressing need for an integrated approach to understanding, evaluating, and mitigating bias in T2I generative models. By categorizing existing studies and identifying gaps, it paves the way for future research aimed at developing fair, inclusive, and trustworthy T2I technologies.