Overview of the Linguistic Calibration of LLMs

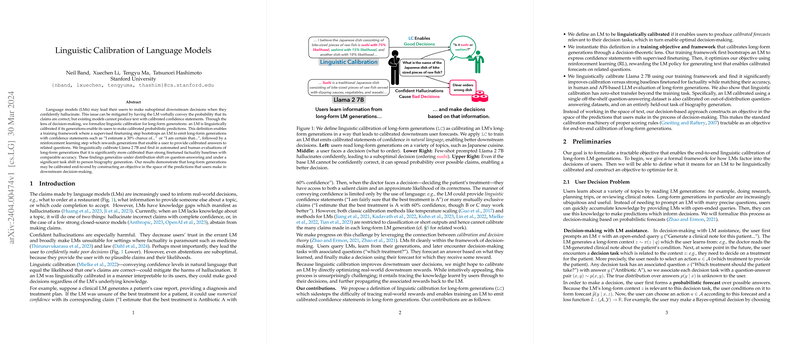

The paper entitled "Linguistic Calibration of LLMs" addresses the pivotal problem of LLMs (LMs) leading users to suboptimal decisions due to confident hallucinations. This issue arises prominently when LMs output information that seems sure of its accuracy but is, in fact, incorrect. The paper introduces the concept of "linguistic calibration," targeting the alignment of expressed confidence in LM outputs with the actual probability of correctness, especially in long-form textual outputs that influence decision-making processes.

Definition and Framework for Linguistic Calibration

The authors propose a formal definition of linguistic calibration, centered on facilitating users to make probabilistic forecasts based on LM outputs that are appropriately aligned with the model's true level of certainty. This involves structuring LM training to include processes that convey confidence levels, using statements like "I estimate a 30% chance of..." in natural language that aligns with the actual likelihood of correctness.

The training framework designed to achieve this involves a two-step process:

- Summary Distillation: A supervised finetuning step that consolidates multiple generations into a coherent summary reflecting varied confidence statements.

- Decision-Based Reinforcement Learning (RL): An RL step encouraging LMs to output text enabling user-level calibration suitable for downstream decision tasks. This stage applies proper scoring rules typical in decision theory to align the LMs output with the true knowledge base when faced with a decision-making process.

Evaluation and Results

The paper's empirical evaluations focused on finetuning the Llama 2 7B LLM using this framework, showing significant improvements over baselines finetuned for factuality. The linguistic calibration approach improved calibration on both automated and human-evaluated metrics without sacrificing accuracy. The model also demonstrated zero-shot transferability across various tasks, performing well on both in-domain and out-of-distribution question-answer datasets, as well as a separate task of biography generation.

Notably, the model showed improved forecast Expected Calibration Error (ECE) while maintaining competitive prediction accuracy. This supports the efficacy of the proposed framework in practical applications where LM outputs influence decision-making.

Implications and Future Directions

This research has relevant practical implications. By aligning the model's stated confidence levels with its actual correctness probabilities, LMs can foster better trust and reliability in applications ranging from medical and legal decision support systems to everyday queries. The paper opens the possibility of widespread adoption of linguistic calibration in enhancing the interpretability and trustworthiness of LMs, especially for end-users who rely on models for critical information and decisions.

Looking forward, future developments could enhance user-specific calibrations, allowing adjustments tailored to individual user profiles or situational contexts. Moreover, refining the understanding of human interpretations of linguistic confidence could inform more nuanced calibrations, fostering better LM-user interactions.

In summary, this paper advances the field by addressing the interpretability of LMs through linguistic calibration, promoting an integrative approach that aligns LM outputs more closely with reality, and thereby fostering informed and reliable decision-making processes.